Overview of "VersusDebias: Universal Zero-Shot Debiasing for Text-to-Image Models via SLM-Based Prompt Engineering and Generative Adversary"

The paper introduces VersusDebias, a universal framework for zero-shot debiasing of text-to-image (T2I) models. The authors address a significant concern in the rapid development of T2I models: demographic and ethical biases in image generation. Existing debiasing methods often lack adaptability and zero-shot capabilities, limiting their practical application. VersusDebias aims to overcome these limitations by employing a dual-module approach consisting of an Array Generation (AG) module and an Image Generation (IG) module.

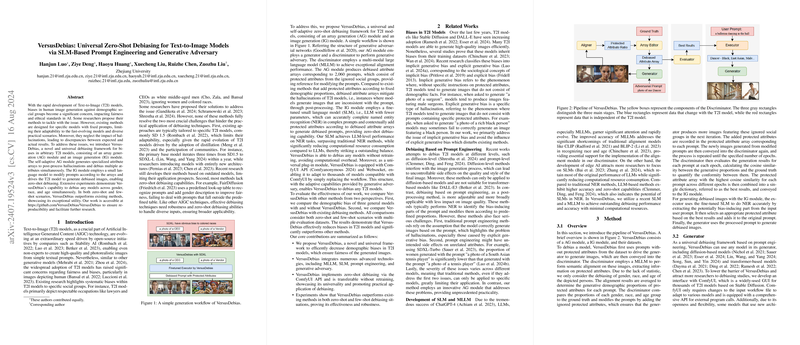

Framework Composition

VersusDebias' framework integrates several advanced technologies such as Multi-Modal LLMs (MLLM), Small LLMs (SLM), prompt engineering, and generative adversarial techniques. The AG module's discriminator utilizes an MLLM for semantic image alignment, which is pivotal for detecting biases in generated outputs. The subsequent array editor then adjusts prompts by adding underrepresented attributes. The IG module, powered by a fine-tuned SLM, conducts Named Entity Recognition (NER) to rewrite prompts with debiased attributes, fostering zero-shot debiasing.

Evaluation and Results

Extensive experiments demonstrate VersusDebias's effectiveness in debiasing T2I models across gender, race, and age simultaneously. The framework consistently performs well in both zero-shot and few-shot scenarios, surpassing baseline methods like FairDiffusion and PreciseDebias. Notably, VersusDebias maintains image quality while reducing biases, highlighting its utility as a universal debiasing framework suitable for various T2I models without retraining.

Implications and Future Directions

VersusDebias provides a substantial advancement in the field of unbiased AI-generated content. Its ability to dynamically adapt to evolving T2I models makes it a promising tool for a fairer generative AI ecosystem. The paper underscores the need for continual improvement in handle explicit biases and extreme hallucinations, which remain challenging. Future research could explore refining the alignment accuracy of MLLMs and expanding the framework to mitigate both implicit and explicit biases robustly.

In sum, VersusDebias not only contributes to the theoretical understanding of bias mitigation in generative models but also offers a practical solution that can be readily applied across various contexts in AI image synthesis. Its modular design and adaptability underline its potential as a cornerstone for future developments in debiasing methodologies within the AI community.