Overview of Non-Stationary Direct Preference Optimization under Preference Drift

Introduction

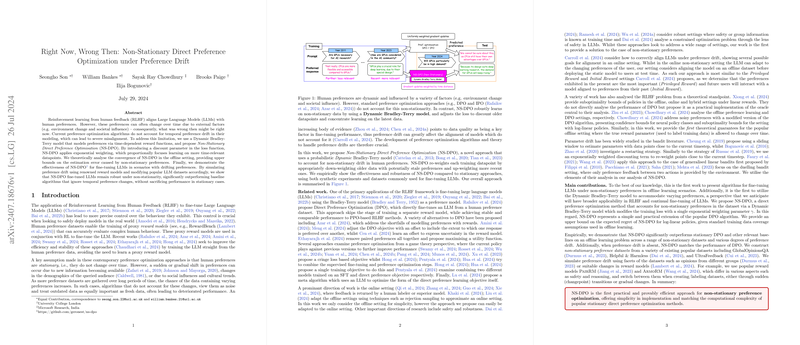

The paper "Right Now, Wrong Then: Non-Stationary Direct Preference Optimization under Preference Drift" addresses a critical limitation in current Reinforcement Learning from Human Feedback (RLHF) algorithms: the assumption that human preferences remain stationary over time. This assumption does not hold in real-world scenarios where preferences evolve due to environmental changes, societal influences, or other external factors. To bridge this gap, the authors propose the Non-Stationary Direct Preference Optimization (NS-DPO) method, which incorporates a Dynamic Bradley-Terry (DBT) model to accommodate time-dependent preferences.

Methodology

Dynamic Bradley-Terry Model

The core innovation of NS-DPO is the application of the Dynamic Bradley-Terry model to preference optimization problems. In contrast to the stationary Bradley-Terry model, where the probability of preference between two responses is time-invariant, the DBT model allows these probabilities to vary over time. The DBT model uses time-dependent reward functions to capture this variation, formulated as:

Here, the reward function changes over time . NS-DPO introduces an exponentially weighted loss function with a discount parameter to prioritize more recent data, addressing issues of temporal preference drift.

Non-Stationary Direct Preference Optimization (NS-DPO)

NS-DPO modifies the Direct Preference Optimization (DPO) framework by incorporating time-varying rewards. The implicit reward function is defined as:

$r(x, a, T) = \tau \log \frac{\pi_{\theta_T}(a|x)}{\piref(a|x)} + \tau \log Z_{\theta_T}(x),$

where is the policy at time , and is a normalization constant. The NS-DPO loss function is then formulated as:

$\mathcal{L}_{\text{NS-DPO}}(\theta_T) = \sum_{(x_i, a_i, a'_i, t_i) \sim \mathcal{D}} - \gamma^{T-t_i-1} \log \sigma \left( \tau \log \frac{\pi_{\theta_T}(a_i|x_i)}{\piref(a_i|x_i)} - \tau \log \frac{\pi_{\theta_T}(a_i'|x_i)}{\piref(a_i'|x_i)} \right).$

This loss function down-weights older data, thus allowing the model to learn more aggressively from recent data where preferences are most relevant.

Theoretical Analysis

The paper provides theoretical guarantees on the performance of NS-DPO. The authors prove an upper bound on the expected regret of NS-DPO for log-linear policies, showing that the complexity of the regret bound is . This bound is comparable to state-of-the-art methods in stationary settings but extends to accommodate non-stationary environments.

Empirical Validation

The efficacy of NS-DPO is demonstrated through comprehensive experiments:

- Synthetic Experiments:

- NS-DPO significantly outperforms stationary DPO and other baselines in settings with controlled preference drifts.

- The performance of NS-DPO is robust across a range of discount parameters , highlighting its adaptability.

- LLM Experiments:

- NS-DPO was tested on datasets with simulated preference drift using well-known reward models like PairRM and ArmoRM.

- NS-DPO consistently showed superior performance compared to stationary methods, even when preferences changed abruptly or gradually.

Implications and Future Work

The introduction of NS-DPO has several important implications:

- Practical Impact: Practitioners can now fine-tune LLMs in environments where preferences are expected to drift over time. This is particularly useful for applications in social media, content recommendation, and personalized AI systems.

- Theoretical Contribution: The theoretical framework for handling preference drift in RLHF provides a new direction for future research. This can be extended to other non-stationary settings, such as online learning and continuous deployment scenarios.

- Future Developments: The approach can be adapted to more complex models and could incorporate advanced techniques like meta-learning to optimize the discount parameter dynamically.

Conclusion

The paper provides a robust solution to the problem of non-stationary preferences in RLHF. By leveraging the Dynamic Bradley-Terry model and introducing the NS-DPO framework, the authors offer a method that not only aligns models with current human preferences but also remains adaptive to changes over time. This work promises to significantly enhance the reliability and applicability of reinforcement learning systems in dynamic environments.