This paper introduces the emerging field of Technical AI Governance (TAIG), defined as technical analysis and tools designed to support the effective governance of AI (Reuel et al., 20 Jul 2024 ). The authors argue that TAIG is crucial because policymakers and decision-makers often lack sufficient technical information and tools to identify the need for AI governance interventions, assess the efficacy of different options, and implement policies effectively. TAIG can contribute by:

- Identifying areas needing governance intervention (e.g., predicting risks from AI advances).

- Informing governance decisions by providing accurate assessments (e.g., evaluating the effectiveness of different regulations based on technical feasibility).

- Enhancing governance options by providing tools for enforcement, incentivization, or compliance (e.g., developing robust auditing methods).

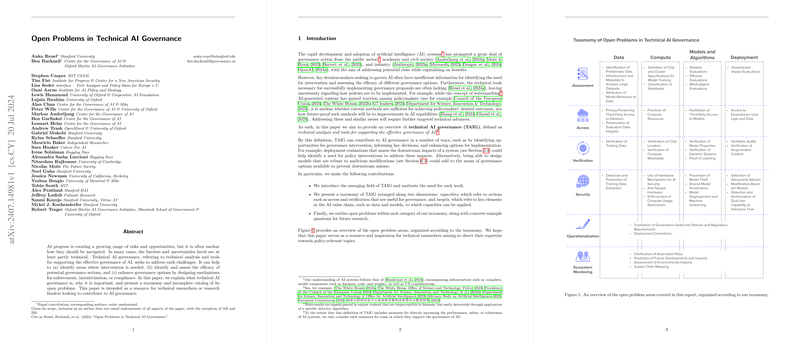

The paper presents a taxonomy of open problems in TAIG organized along two dimensions:

- Capacities: Actions useful for governance (Assessment, Access, Verification, Security, Operationalization, Ecosystem Monitoring).

- Targets: Key elements in the AI value chain (Data, Compute, Models and Algorithms, Deployment).

The bulk of the paper details open problems within this taxonomy:

1. Assessment: Evaluating AI systems, capabilities, and risks.

- Data: Identifying problematic data (copyrighted, private, harmful, biased) in massive datasets, both with and without direct access; building infrastructure for large-scale dataset analysis; attributing model behavior to specific training data points (including synthetic data effects).

- Compute: Defining chip/cluster specifications relevant for AI training vs. other uses; classifying compute workloads (training, inference, non-AI) reliably and privately, robustly against gaming.

- Models & Algorithms: Developing reliable and efficient evaluations (measuring thoroughness, accounting for data contamination, using mechanistic analysis); scaling red-teaming; evaluating agentic and multi-agent systems.

- Deployment: Evaluating downstream societal impacts accurately across diverse contexts; ensuring evaluation validity (construct and ecological); designing dynamic, real-world simulation environments.

2. Access: Enabling interaction with AI systems and related resources for third parties (auditors, researchers, governments) while managing risks.

- Data: Providing privacy-preserving third-party access to training datasets; preserving evaluation data integrity against contamination.

- Compute: Addressing compute inequities for non-industry researchers through public infrastructure; ensuring interoperability, sustainability, and fair allocation of public compute resources.

- Models & Algorithms: Facilitating different levels of third-party model access (beyond black-box) for research/auditing while mitigating IP/security risks; ensuring model version stability for reproducibility.

- Deployment: Enabling access to downstream user logs/data for impact assessment while preserving user privacy; clarifying access responsibilities along the AI value chain.

3. Verification: Confirming claims about AI systems' development, properties, and compliance.

- Data: Verifying that a model was (or was not) trained on specific data; verifying fair data use (e.g., license compliance).

- Compute: Verifying the physical location of AI hardware robustly; verifying compute workload properties (e.g., size, type) using methods like TEEs or trusted clusters, while ensuring privacy and scalability.

- Models & Algorithms: Verifying model properties (capabilities, architecture) with and without full access (e.g., using formal methods or zero-knowledge proofs); verifying dynamic systems through tracking updates; developing scalable and robust proof-of-learning mechanisms.

- Deployment: Enabling verifiable audits (proving audit process/outcome, linking audits to deployed versions); verifying AI-generated content through robust watermarking or detection methods.

4. Security: Protecting AI system components from unauthorized access, use, or tampering.

- Data: Detecting and preventing the extraction of training data from models.

- Compute: Using hardware mechanisms (like TEEs) for AI security at scale; developing robust anti-tamper hardware (tamper-evidence/responsiveness); enforcing compute usage restrictions (e.g., for export controls).

- Models & Algorithms: Preventing model theft (physical/cybersecurity, defending against inference attacks); enabling shared model governance (e.g., via model splitting, SMPC, HE, TEEs); developing robust methods for model disgorgement and machine unlearning/editing to remove unwanted knowledge or behaviors.

- Deployment: Detecting adversarial attacks at inference time; developing modification-resistant models (preventing malicious fine-tuning); detecting and authorizing requests for dual-use capabilities.

5. Operationalization: Translating governance goals into concrete technical strategies, procedures, and standards.

- Identifying reliable technical indicators of risk for regulation (beyond just compute thresholds).

- Developing specific, verifiable technical standards for AI safety, fairness, security, etc., across the AI life cycle.

- Defining technical options for deployment corrections when flaws are found post-deployment (ranging from restrictions to shutdown).

6. Ecosystem Monitoring: Understanding the evolving AI landscape and its impacts.

- Clarifying associated risks through better threat models and incident reporting.

- Predicting future developments and impacts by measuring and extrapolating trends.

- Assessing the environmental impacts (energy, water, resources) across the AI life cycle.

- Mapping AI supply chains to understand actors, dependencies, and potential intervention points.

The authors caution against techno-solutionism, acknowledging that TAIG tools can be dual-use and that social, political, and ethical considerations are paramount. The paper aims to be a resource for technical researchers and funders to identify impactful areas where their expertise can contribute to more effective AI governance. An appendix provides a concise policy brief summarizing key takeaways and recommendations.