Internal Consistency and Self-Feedback in LLMs: A Survey

LLMs have demonstrated extensive capabilities across various natural language tasks, yet they frequently exhibit issues related to reasoning and hallucination. Such deficiencies highlight the complex challenge of maintaining internal consistency within LLMs. In their exhaustive survey, Liang et al. propose a theoretical framework, Internal Consistency, to succinctly address these challenges and introduce the concept of Self-Feedback as a strategy to enhance model capabilities through iterative self-improvement.

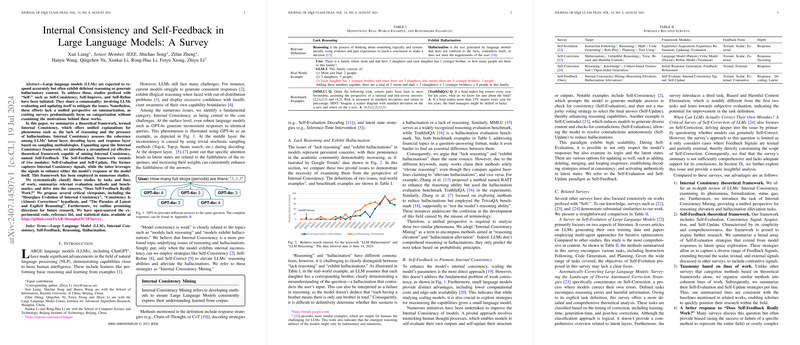

Internal Consistency and its Evaluation

Internal Consistency is defined as the measure of coherence among the LLM’s latent, decoding, and response layers. The proposed framework hinges on improving consistency at all three levels:

- Response Consistency: Ensuring uniformity in responses across similar queries.

- Decoding Consistency: Stability in token selection during the decoding process.

- Latent Consistency: Reliability of internal states and attention mechanisms.

The authors reveal the "Hourglass Evolution of Internal Consistency," illustrating how an LLM's consistency varies across different layers. Latent states near the bottom layers exhibit randomness in responses, gradually improving through intermediate and final layers, but diverging again at the response generation stage. This phenomenon underscores the significant role internal mechanisms play in consistency and the challenges at each stage of processing within LLMs.

Self-Feedback Framework

The Self-Feedback framework proposed by Liang et al. involves two core modules: Self-Evaluation and Self-Update. The LLM initially performs Self-Evaluation by analyzing its outputs, and then uses the generated feedback to refine its responses or update its internal parameters. This self-improving feedback loop is fundamental to addressing issues of reasoning and hallucination.

Consistency Signal Acquisition

A crucial part of the Self-Feedback framework is the acquisition of consistency signals. The authors categorize these methods into six primary lines of work:

- Uncertainty Estimation: Estimating the model's uncertainty in its outputs to guide refinement.

- Confidence Estimation: Quantifying the model's confidence in its responses.

- Hallucination Detection: Identifying and mitigating unfaithful or incorrect content generated by the model.

- Verbal Critiquing: Allowing the LLM to generate critiques of its own outputs to facilitate iterative improvement.

- Contrastive Optimization: Optimizing the model by comparing different outputs and selecting the best.

- External Feedback: Leveraging external tools or more robust models to provide feedback on generated content.

Each method plays a distinct role in refining model outputs and enhancing internal consistency.

Critical Viewpoints and Future Directions

The survey introduces several critical viewpoints such as the "Consistency Is (Almost) Correctness" hypothesis, which posits that increasing a model's internal consistency generally results in improved overall correctness. This is predicated on the assumption that pre-training corpora are predominantly aligned with correct world knowledge.

Despite advancements, several challenges and future directions emerge from the survey:

- Textual Self-Awareness: Improving LLMs' ability to express their degrees of certainty and uncertainty in textual form.

- The Reasoning Paradox: Balancing latent and explicit reasoning to optimize reasoning efficiency without disrupting inference.

- Deeper Investigation: Moving beyond response-level improvements to explore decoding and latent states comprehensively.

- Unified Perspective: Integrating improvements across response, decoding, and latent layers to form a cohesive improvement strategy.

- Comprehensive Evaluation: Establishing robust evaluation metrics and benchmarks to holistically assess LLM capabilities and improvements.

Implications and Conclusions

The implications of refining LLMs through Internal Consistency Mining are manifold. Enhanced consistency not only improves the reliability of LLMs in various applications but also strengthens their alignment with human-like reasoning capabilities. The Self-Feedback framework represents a significant forward step in the iterative improvement of LLMs by leveraging their own feedback mechanisms.

In conclusion, this survey by Liang et al. offers a comprehensive and structured approach to addressing the core issues of reasoning and hallucination in LLMs through the lens of internal consistency. By systematically categorizing and evaluating various methods, the paper lays a solid foundation for ongoing and future research in enhancing the robustness and reliability of LLMs.