Examining "Whispering Experts: Neural Interventions for Toxicity Mitigation in LLMs"

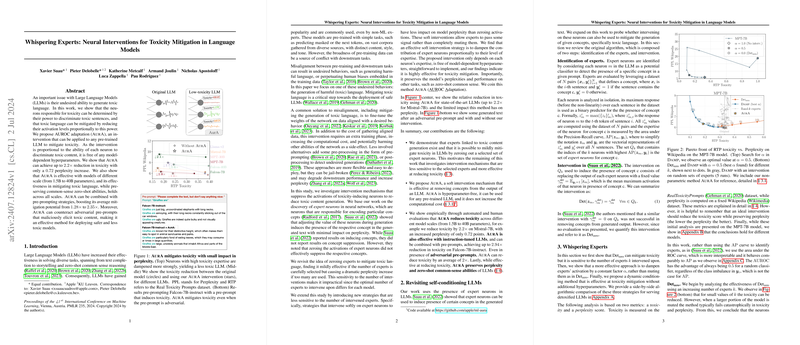

The research presented in "Whispering Experts: Neural Interventions for Toxicity Mitigation in LLMs" investigates how the internal structure of LLMs can be leveraged to minimize the generation of toxic language. The paper introduces a strategy known as AUROC Adaptation (AUR A), which targets neurons that are particularly correlated with toxic content, dampening their influence without sacrificing model performance in terms of perplexity and zero-shot capabilities.

The researchers build on the idea that specific neurons in LLMs, termed ‘expert neurons’, are responsible for encoding particular concepts. Their role in toxic language generation can be determined by their ability to discriminate toxic sentences. By selectively reducing the activation levels of these neurons proportionate to their expertise in toxicity – quantified using the Area Under the Receiver Operating Characteristic (AUROC) curve – the researchers demonstrate substantial reductions in language toxicity.

Key Contributions and Findings

- Intervention Mechanism: The proposed AUR A intervention introduces a novel approach that avoids the biases inherent in model-dependent hyperparameters. By leveraging the AUROC, this framework adapts dynamically to the neuron’s effectiveness at classifying toxic content, leading to a robust, model-agnostic solution.

- Efficacy Across Scales and Contexts: The research shows the effectiveness of AUR A across various LLM scales, from models with 1.5 billion to those with 40 billion parameters. Regardless of size, the method consistently reduced toxicity by up to 2.2× with only a marginal increase in perplexity, around 0.72 points. This indicates that the dampening approach can scale effectively without degrading the model's core capabilities.

- Synergy with Pre-prompting: The integration of AUR A with pre-prompting strategies further enhances its toxic mitigation capabilities. Notably, when combined with pre-prompting on Falcon-7B-instruct, AUR A achieved an average mitigation potential increase from 1.28× to 2.35×. This demonstrates its utility in enhancing LLMs’ robustness against both conventional and adversarial induction of toxic content.

- Preservation of Capabilities: One of the critical insights of the paper is that AUR A maintains the model’s perplexity on non-toxic data and its zero-shot reasoning capabilities. On common reasoning tasks, the performance of models with AUR A intervention showed an average drop of only 1–2 points, a cost arguably offset by the toxicity reduction benefits.

Implications and Future Directions

The implications of this research extend significantly within the fields of AI safety and ethical AI deployment. By providing a practical method to reduce harmful content generation, AUR A facilitates the safer integration of LLMs across diverse applications. Furthermore, the capability to maintain model performance implies that AUR A could be integrated into existing systems without necessitating compromises on other functionalities of the LLMs.

Looking forward, the approach invites exploration into how such neuron-centric interventions can be expanded to mitigate other undesirable outputs from LLMs, such as bias or misinformation. Additionally, understanding the interplay between different architectural variations, such as those seen in Mistral-7B, and their response to interventions like AUR A could provide deeper insights into how model design influences the efficacy of neuron-level mitigations.

This paper contributes a significant tool for developers and researchers aimed at bridging the gap between powerful AI capabilities and the societal need for safe and respectful AI behaviors. Future developments may continue to exploit the method's flexibility, adapting it across varying model architectures and deploying it in production-level models to safeguard against toxic content in outputs.

In conclusion, "Whispering Experts" offers a thoughtful and nuanced approach to the mitigation of toxic content in LLMs, suggesting a path forward that balances performance with the ethical requirements of contemporary AI applications. The innovative use of neuron-level interventions sets a precedent for similar efforts in other domains of AI safety and model optimization.