Graph-Based Captioning: Enhancing Visual Descriptions by Interconnecting Region Captions

This paper introduces Graph-Based Captioning (GBC), a novel annotation strategy aimed at improving the way complex scenes are described in vision-language datasets. GBC represents a shift from traditional plain text descriptions, opting instead for a structured, graph-based approach that retains the flexibility of natural language while incorporating hierarchical and relational information among entities within an image.

Overview and Methodology

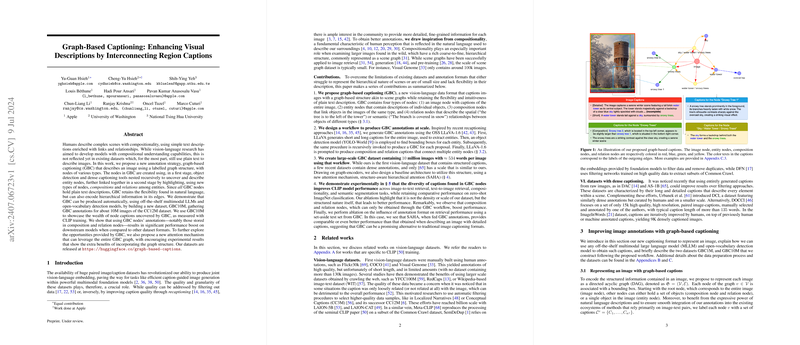

Graph-Based Captioning (GBC): GBC uses a labeled graph structure to describe images. Each node in the graph represents either the entire image, individual objects, compositions of similar objects, or relationships between different objects. This structure is more nuanced than traditional captions, which often fail to capture the intricate relationships and compositional structures present in complex scenes. The nodes are generated in a multi-stage process involving object detection and dense captioning, creating a comprehensive and interconnected description of the image.

Automated Dataset Creation: The paper demonstrates the feasibility of producing GBC annotations at scale using off-the-shelf multimodal LLMs (MLLMs) and open-vocabulary detection models. Specifically, the authors annotated approximately 10 million images from the CC12M dataset, creating the GBC10M dataset. The process involves generating both short and long captions for entire images, extracting entities via object detection, and recursively producing GBC annotations.

New Attention Mechanism: A novel attention mechanism, Structure-Aware Hierarchical Attention (SAHA), was proposed to leverage the graph structure during training. This mechanism interleaves standard transformer blocks with custom cross-attention layers that incorporate hierarchical and relational information from the GBC graph, facilitating more robust and context-aware text embeddings.

Experimental Results and Insights

Evaluation Setup: GBC was evaluated through CLIP model training on the GBC10M dataset, examining various downstream tasks such as zero-shot classification, image-to-text and text-to-image retrieval, compositional understanding, and semantic segmentation. Models trained with GBC annotations generally performed better across these benchmarks compared to those trained with traditional annotation formats.

Key Findings:

- Performance Boost: Using GBC annotations resulted in significant performance improvements, particularly in retrieval tasks. This highlights the importance of incorporating detailed and structured information in vision-LLMs.

- Graph Structure Benefits: The hierarchical and relational information encoded in GBC graphs provided additional benefits, as evidenced by the models' enhanced performance on tasks requiring detailed contextual understanding.

- New Attention Mechanism: The SAHA blocks effectively utilized the graph structure, improving retrieval performance and demonstrating the potential of this new attention mechanism in handling structured annotations.

Ablation Studies: Several ablation studies were conducted to understand the contributions of different components of the GBC framework. These included:

- Impact of Different Caption Types: Relation and composition captions were shown to significantly boost performance.

- Objective Function Analysis: The multi-positive contrastive loss proved crucial for leveraging multiple captions per image.

- Graph Structure Importance: The underlying graph structure was validated as a critical component for capturing and utilizing complex relationships in images.

Implications and Future Developments

The introduction of GBC presents several practical and theoretical implications for the development of vision-LLMs. By providing a more detailed and structured representation of visual scenes, GBC has the potential to enhance various applications, from image retrieval and generation to detailed scene understanding and dense prediction tasks. Moreover, the methodology for creating GBC annotations could be integrated into other large-scale vision-language datasets, fostering the development of more sophisticated multimodal models.

Future Directions: Possible future research avenues include:

- Refinements to Annotation Processes: Enhancing the object detection and captioning models to further reduce errors and biases.

- Expansion to Other Domains: Adapting GBC to specialized fields such as scientific imagery or abstract art, which may require different annotation strategies.

- Broader Applications: Extending the application of GBC annotations to areas like text-to-image generation, where the detailed and structured descriptions could significantly improve generative models.

In conclusion, Graph-Based Captioning represents a significant advancement in the annotation of vision-language datasets, providing a richer and more interconnected way to describe complex scenes. This, combined with the innovative use of hierarchical attention mechanisms, opens new horizons for the development and application of multimodal AI systems.