Empirical Comparison of Vocabulary Expansion and Initialization Approaches for LLMs

The paper "An Empirical Comparison of Vocabulary Expansion and Initialization Approaches for LLMs" explores how to adapt pre-trained LLMs (LMs) like RoBERTa and LLaMA2 to accommodate multiple languages by expanding their vocabulary and initializing the new embeddings effectively. This paper addresses two primary challenges: enhancing the tokenizer’s vocabulary to better capture different languages and effectively initializing the embeddings for these new vocabulary items.

Problem Context and Objectives

LLMs, although highly effective for English, often underperform for other languages due to limited vocabulary coverage in the tokenizer. The authors tackle this issue by introducing new vocabulary items and investigating various initialization strategies for these new embeddings. Current embedding initialization techniques, such as cross-lingual embeddings, although prevalent, lack a strong theoretical basis and comparative analysis against simpler methods. This paper aims to bridge this gap by providing a theoretical foundation for good initialization strategies and introducing Constrained Word2Vec (CW2V), a novel method that forgoes the need for cross-lingual embeddings.

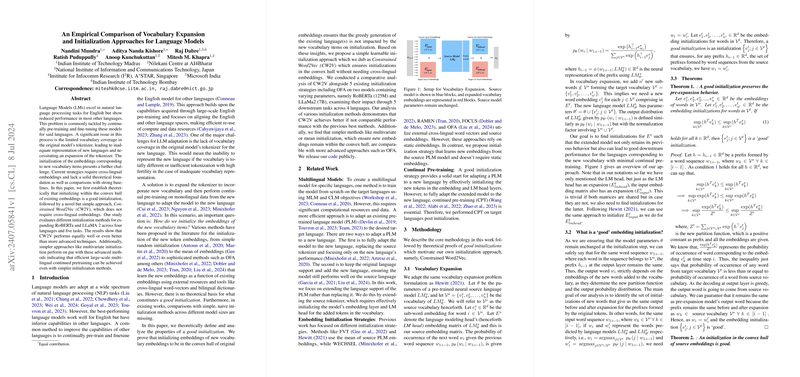

Methodological Approach

The authors establish theoretically that embeddings within the convex hull of the existing embeddings provide a good initialization. They introduce CW2V, which enforces this constraint without needing cross-lingual data. The CW2V method learns the target embeddings by transforming the source embeddings through a weight matrix , ensuring the new embeddings are within the convex hull of the source embeddings. The paper compares CW2V against five initialization strategies, namely OFA, Multivariate, Univariate, Mean, and Random initialization, across two models (RoBERTa and LLaMA2), four languages (Hindi, Tamil, Russian, German), and five tasks (XNLI, NER, QA, MT, XLSUM).

Experimental Setup

The tokenizer expansion involved creating a unified target tokenizer using SentencePiece, merging subwords from target languages with those from the LLaMA2 tokenizer, ensuring significant reduction in fertility scores for the target languages, thus facilitating a better representation. The CW2V and other baseline initializations were evaluated both before and after continual pre-training (CPT) for various downstream tasks using different metrics.

Results

Initial findings indicate that CW2V preserves the pre-expansion performance for English tasks better than other methods for both RoBERTa and LLaMA2. Specifically, for RoBERTa, CW2V is on par with or slightly inferior to OFA in multilingual tasks, but significantly better in preserving English performance. For LLaMA2, CW2V outperforms OFA across most multilingual and generative tasks while showing comparable performance on English tasks.

With CPT, both RoBERTa and LLaMA2 models initialized via CW2V converge swiftly, aligning with and sometimes surpassing the performance of more sophisticated methods like OFA. Interestingly, simpler methods such as Multivariate initialization, which inherently lie within the convex hull with high probability, also demonstrate competitive performance, suggesting that a strong initialization strategy does not always require complex methodologies.

Implications

Theoretical findings highlighting the importance of convex hull properties in embedding initialization underscore the potential for simpler, computationally cheaper methods to be equally effective for multilingual adaptation. Practically, employing methods like CW2V can significantly improve adaptation performance for different languages in a pre-trained LM without extensive cross-lingual resources. However, initial phases of CPT tend to adversely affect performance on English tasks, necessitating prolonged training to mitigate this effect, hinting at a delicate balance between multilingual adaptation and the preservation of the source language capabilities.

Future Directions

This paper lays a groundwork for further research into more efficient and theoretically grounded initialization strategies. Future work can explore broader sets of languages and tasks, finer adjustments to CPT to better balance source and target language performance, and the potential integration of these findings with dynamic vocabulary adaptation methods.

By addressing the inherent challenges in vocabulary expansion and providing empirical evidence for the efficacy of simple, yet theoretically sound methods, this paper contributes significantly to the ongoing development of robust, multilingual LLMs.