Understanding Alignment in Multimodal LLMs: A Comprehensive Study

The paper under examination explores the underexplored domain of alignment within Multimodal LLMs (MLLMs). While alignment techniques have proven beneficial for LLMs in reducing hallucinations and enhancing response alignment with human preferences, similar methods have not been comprehensively studied within MLLMs.

Key Contributions and Findings

1. Introduction to MLLM Alignment Challenges:

The authors initially highlight the unique challenges MLLMs face, such as generating responses inconsistent with image content, commonly known as hallucinations. MLLMs not only need to overcome the typical challenges observed in LLMs but also need to ensure visual data fidelity.

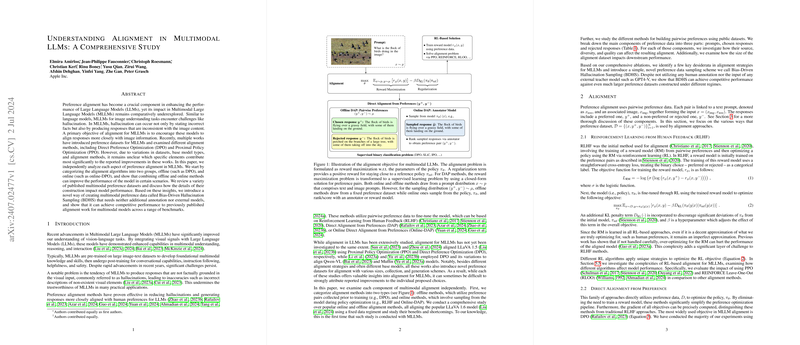

2. Categorization and Analysis of Alignment Methods:

The paper categorizes alignment algorithms into offline methods (e.g., Direct Preference Optimization - DPO) and online methods (e.g., Proximal Policy Optimization - PPO). The authors meticulously analyze the benefits and limitations of each approach when applied to MLLMs. They show empirical evidence that combining offline and online methods leads to performance improvements in certain scenarios.

3. Exploration of Multimodal Preference Datasets:

Various existing multimodal preference datasets are reviewed, with an in-depth analysis of how their construction impacts MLLM performance. The paper identifies key components of these datasets and their contribution to the alignment process. Notably, the introduction of a Bias-Driven Hallucination Sampling (BDHS) method improves performance and does not require additional annotations or external models.

Experimental Findings

1. BDHS Methodology:

The paper presents BDHS, which induces model hallucinations by restricting access to parts of the image embeddings. BDHS achieves competitive performance against previously published datasets across various benchmarks.

2. Comparative Analysis with Other Methods:

Results indicate that offline methods like DPO and BDHS generally outperform online methods like PPO, which often suffer from the reward model's generalization issues. The hybrid Mixed-DPO method, combining both approaches, shows balanced improvements.

3. Dataset-Specific Results:

The paper also investigates the impact of the source and diversity of the preference data. It reveals that models trained on responses from diverse sources, even if smaller in size, often generalize better compared to those trained on homogeneous data. Additionally, the effectiveness of structured corruptions over merely sampled rejected responses is highlighted.

Practical and Theoretical Implications

1. Practical Implications:

For practitioners, the paper provides actionable insights into constructing effective preference datasets and choosing appropriate alignment methodologies. The BDHS approach, due to its minimal requirement for external resources, offers a practical and scalable solution for improving MLLM performance.

2. Theoretical Implications:

Theoretically, the paper advances understanding of how multimodal information can be better integrated and aligned within large-scale models. It sets a foundation for future investigations into the nuanced dynamics of vision-language integration, potentially guiding the development of more sophisticated alignment frameworks.

Future Directions

1. Enhanced Reward Models:

The paper suggests that better generalization in reward models could significantly improve the efficacy of RL-based alignment methods. Future work may explore incorporating more context-aware reward models or hybrid approaches combining symbolic and empirical rewards.

2. Benchmark Improvements:

The paper calls for the creation of more robust benchmarks for evaluating hallucinations. This would enable more accurate assessments of model performance and guide the refinement of alignment techniques.

3. Broader Applications:

Further research could extend these alignment strategies to more diverse multimodal tasks, including those involving video, audio, and other sensory inputs, thereby broadening the applicability of these findings.

In summary, this comprehensive paper on MLLM alignment not only provides novel methodologies like BDHS but also sets a strong precedent for future research endeavors. By detailing the nuances of preference data construction and alignment strategies, the paper makes a substantial contribution to the field of multimodal AI.