T-MAC: Enhancing Low-Bit LLM Deployment on Edge Devices Using Table Lookup

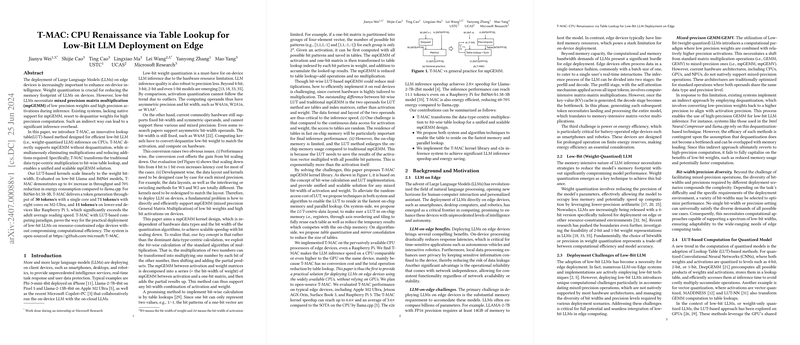

The deployment of LLMs on edge devices represents a growing area of interest due to the potential benefits of improved on-device intelligence and reduced response latency. The paper presents T-MAC, a system designed to optimize the deployment of low-bit, weight-quantized LLMs on CPUs by transforming existing computational paradigms to leverage a lookup table (LUT)-based approach.

Weight Quantization and Mixed Precision Challenges

Weight quantization is crucial for reducing the memory footprint of LLMs on edge devices. However, the translation of low-bit weights to high-precision activations poses a significant computational challenge. Mixed precision General Matrix Multiplication (mpGEMM) is necessitated, yet current hardware lacks native support for such operations, often requiring inefficient dequantization techniques leading to performance bottlenecks.

T-MAC Approach

The T-MAC framework introduces an innovative LUT-based methodology, facilitating mpGEMM without the need for dequantization and thereby circumventing multiplication operations. The key to T-MAC's approach is transforming traditional multiplication operations into bit-wise table lookups, resulting in efficient and scalable matrix multiplication regardless of the bit-widths of weights and activations.

Performance Evaluation

T-MAC's novel approach was evaluated on Llama and BitNet models, exhibiting substantial improvements in throughput—up to a 4× increase—and reducing energy consumption by 70% compared to state-of-the-art implementations like llama.cpp. Specifically, T-MAC achieved token generation throughputs of up to 71 tokens/s on high-end devices such as the M2-Ultra and even delivered a commendable 11 tokens/s on resource-constrained platforms like the Raspberry Pi 5.

Technical Innovations

- Table Lookup Optimization: By precomputing potential weight-activation product values and storing them in LUTs, T-MAC efficiently substitutes traditional multiplicative operations with lookup operations, supported by optimized memory layouts and register management.

- Data Layout and Reduction Techniques: Techniques such as axis reordering and LUT-centric tiling allow for data to be processed more efficiently, reducing redundant operations and optimizing both speed and memory footprint.

- System Implementation: The T-MAC system is implemented across various processors and edge devices, providing open-source access for further development and integration, underscoring its versatility and practical applicability.

Implications and Future Directions

The implications of T-MAC are twofold:

- Practically, it provides a framework for running low-bit LLMs on edge devices with competitive efficiency without relying heavily on GPUs.

- Theoretically, it opens avenues for research into more efficient computational paradigms and hardware architectures based on the LUT paradigm, potentially influencing future hardware designs tailored to AI operations.

Future research could explore further optimization of LUT methods, alternative quantization strategies, and the applicability of T-MAC to other model architectures beyond LLMs. Additionally, adaptable versions for different hardware architectures could broaden the utility of T-MAC, reinforcing its relevance in the evolving landscape of edge computing and AI deployment.