Analyzing the Resilience of LLMs to Faulty Tools

The paper "Tools Fail: Detecting Silent Errors in Faulty Tools" explores the domain of LLMs enhanced by external tools and exposes critical aspects of tool reliability. This research challenges the presumption that the main difficulty in LLM tool use lies in selecting appropriate tools, and instead highlights the importance of detecting and recovering from tool errors without explicit signals.

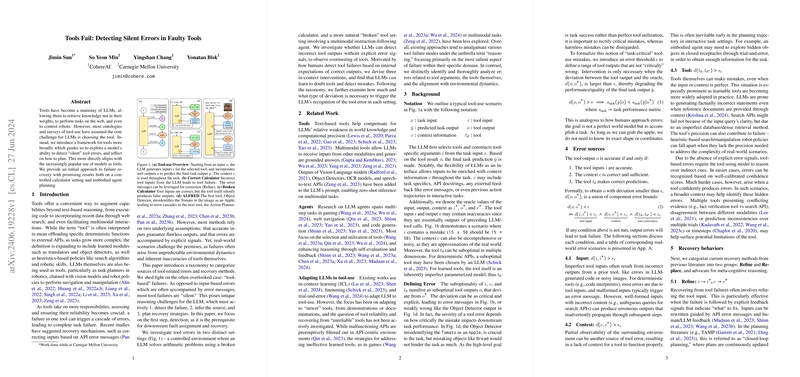

The authors establish a detailed framework categorizing sources of tool-related errors and outline approaches for recovery, with a strong empirical component involving a series of methodical experiments. These experiments span across controlled settings using a calculator and more complex multimodal scenarios with embodied agents in the ALFRED environment.

Key Findings

- Taxonomy of Tool Errors:

- The paper identifies three primary sources of tool errors: inaccurate tool inputs, imperfect context information, and inherent tool inaccuracies.

- The taxonomy provides a structured way to understand and diagnose errors, facilitating targeted recovery strategies.

- Error Detection and Recovery:

- The authors introduce methods like disclaimers, confidence scores, and checklists, which aim to raise model awareness and improve error detection rates.

- Numerical evidence shows that even simple interventions, like a disclaimer, can significantly improve the model's performance in identifying faulty tool outputs. For instance, GPT-3.5 saw accuracy improvements up to 30% with disclaimers.

- Controlled Setting - Calculator Task:

- When using a broken calculator for arithmetic tasks, LLMs struggled to detect "silent" errors without external cues, with performance dropping to as low as 22.7%.

- With explicit prompts suggesting potential errors, models improved significantly. For example, GPT-4's performance increased from 76% to 82% when given a simple disclaimer.

- Multimodal Task - ALFRED Scenario:

- Evaluation of the action planner and object detector within the ALFRED environment revealed how multimodal tools compound the problem of error propagation in task planning.

- GPT-4 showed a notable increase in accuracy (from 57% to 60%) when given a checklist of common planner failures.

Implications and Future Directions

- Theoretical Implications:

- The findings underscore the need for meta-cognitive abilities in LLMs, allowing them to reason over their own and other tools' uncertainties. This move towards introspective capabilities in LLMs could significantly enhance their reliability and robustness in real-world applications.

- The taxonomy presented serves as a foundational structure for categorizing and addressing tool-related errors, providing a roadmap for future research focused on enhancing LLM robustness.

- Practical Implications:

- For developers and researchers building systems that integrate LLMs with external tools, this research offers practical guidelines for improving system reliability. The interventions tested are straightforward and can be readily implemented to bolster error detection mechanisms.

- The paper suggests a potential design philosophy for future AI systems where LLMs are systematically aware of and can adapt to the reliability of the tools they use.

Conclusion

This paper provides a critical insight into the dynamics of trust and error recovery in LLM-enhanced systems. By methodically categorizing tool-related errors and empirically testing error detection and recovery strategies, the research offers a nuanced understanding that is both theoretically enlightening and practically valuable. Future work could explore advanced meta-cognitive mechanisms and extend the taxonomy to encompass a broader range of tools and interaction modalities, setting a pathway for more resilient AI systems.