Overview of "Improving Hyperparameter Optimization with Checkpointed Model Weights"

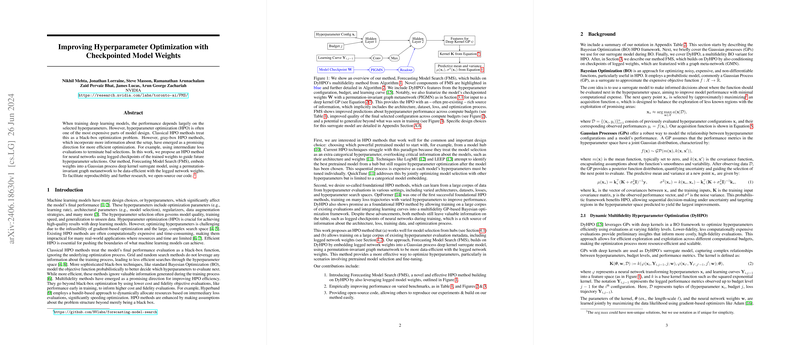

The paper "Improving Hyperparameter Optimization with Checkpointed Model Weights" by Nikhil Mehta et al. introduces a novel approach to Hyperparameter Optimization (HPO) in deep learning. This method leverages checkpointed model weights to improve the efficiency and accuracy of HPO, which is often a resource-intensive process. The proposed method, termed Forecasting Model Search (FMS), embeds model weights into a Gaussian Process (GP) deep kernel surrogate model using a permutation-invariant graph metanetwork (PIGMN). This approach aims to be more data-efficient and informative about hyperparameter performance.

Introduction and Background

Optimizing hyperparameters is essential for achieving high-quality results in deep learning models. Traditional HPO methods treat this as a black-box optimization problem, often leading to computationally expensive and time-consuming processes. More recent gray-box methods, which incorporate additional information from the training process, have shown promise in enhancing efficiency. For instance, multifidelity methods like Hyperband use intermediate evaluations to inform higher-fidelity evaluations, thus expediting the search process.

However, these methods still leave valuable information unutilized, such as the weight checkpoints of neural networks during training. By integrating this often over-looked resource, the proposed FMS method aims to improve HPO efficiency further, especially when dealing with pretrained model hubs.

Methodology

The core contribution of the paper is the integration of logged model weights into the HPO process. The authors build on the DyHPO framework, which already leverages GP-based Bayesian Optimization (BO) for multifidelity HPO. DyHPO uses learning curves to inform optimization decisions dynamically.

FMS enhances DyHPO by incorporating checkpointed weights. It does this by embedding weights into a permutation-invariant graph metanetwork (PIGMN), which respects the symmetries inherent in neural network structures. This embedding is used as input for a GP deep kernel surrogate model, which informs the HPO process. Consequently, FMS is designed to work effectively in two scenarios:

- Selecting pretrained models from hubs: It considers additional model information, such as architecture and weights.

- Learning from a large corpus of pre-existing HPO metadata: This includes varied architectures, datasets, and optimization processes.

The method conducts multifidelity optimization by gradually increasing the computational budget allocated to more promising configurations, similar to Hyperband’s approach.

Experimental Results

The authors present a comprehensive set of experiments to evaluate FMS. They use two model hubs: a Simple CNN Hub containing variations of a single architecture and a Pretrained Model Hub comprising various architectures, including ResNet, Vision Transformer (ViT), CNN, and Deep Sets.

Kendall’s Tau Evaluation:

- FMS consistently achieves high Kendall’s Tau values, indicating a better ranking of hyperparameter configurations.

- FMS-GMN, the permutation-invariant variant, outperforms other methods, including DyHPO and Random Search.

Regret Over Time:

- The results show that FMS-GMN leads to lower regret over time, suggesting more efficient convergence to high-performing hyperparameter configurations.

- This trend holds across various model hubs and datasets, underlining the robustness of the proposed method.

Generalization Beyond Training Data:

- The paper also investigates the generalization capabilities of FMS. The authors train FMS on multiple datasets and then evaluate its performance on unseen datasets and architectures.

- FMS demonstrates effective transfer learning, maintaining lower regret and better generalization compared to non-generalization setups.

Implications

The practical implications of this work are significant. By incorporating checkpointed weights into the HPO process, FMS offers a more informed and efficient approach to navigating the hyperparameter space. This has direct applications in scenarios where computational resources and time are constrained, such as in industrial settings or extensive research pipelines.

From a theoretical perspective, the work highlights the potential of leveraging additional sources of information in optimization tasks. It opens up avenues for further research into using various forms of logged training metadata, beyond just weights and learning curves, to guide HPO.

Potential Future Developments

There are several promising avenues for future research:

- Scalability Enhancements: Extending the method to scale with larger datasets and more extensive hyperparameter spaces.

- Dynamic Hyperparameter Spaces: Adapting FMS to handle dynamically changing hyperparameter search spaces.

- Integration with LLMs: Exploring the integration of LLMs to process textual information, such as code documentation, to inform hyperparameter choices.

- Hierarchical and Text-based Hyperparameter Tuning: Expanding the method to address more complex hyperparameter types.

Conclusion

In summary, the paper presents a substantial advancement in HPO by integrating checkpointed model weights into the optimization process. By doing so, it leverages a rich source of information that enhances the efficiency and effectiveness of hyperparameter searches, particularly in contexts involving pretrained model hubs. The comprehensive evaluation and promising results support the potential of FMS to redefine best practices in hyperparameter optimization.