From Decoding to Meta-Generation: Inference-time Algorithms for LLMs

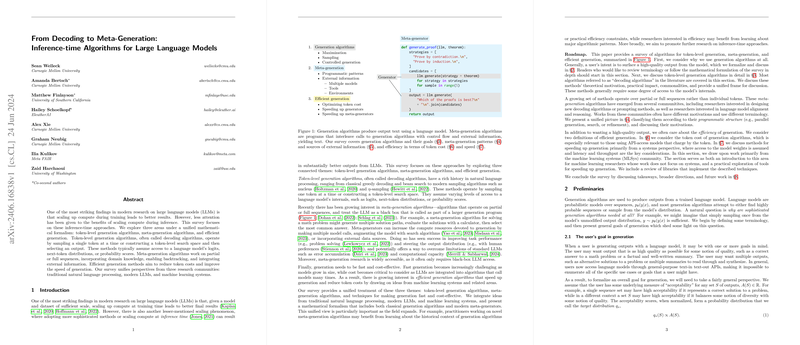

The paper “From Decoding to Meta-Generation: Inference-time Algorithms for LLMs” by Welleck et al. provides a comprehensive survey of the evolving methodologies for efficient and sophisticated inference in LLMs. The paper spans a wide spectrum of generation techniques, categorized mainly into token-level generation algorithms, meta-generation algorithms, and efficient generation approaches.

Token-Level Generation Algorithms

Token-level generation algorithms have been a cornerstone in NLP. These algorithms focus on generating text token-by-token, often leveraging logits, next-token distributions, or probability scores provided by the LLM.

MAP Decoding Algorithms:

Methods like greedy decoding and beam search form the backbone of maximum a-posteriori (MAP) decoding strategies. Greedy decoding selects the highest probability token at each step, while beam search expands multiple tokens in parallel and retains the top- sequences. However, MAP decoding often suffers from issues like generating repetitive or empty sequences, causing it to be less desirable in certain applications.

Sampling Algorithms:

Ancestral sampling operates by recursively sampling tokens according to the model’s distribution. Although it introduces diversity, it can lead to incoherence, prompting a tradeoff between diversity and coherence. Modifications such as top-, nucleus sampling, and temperature scaling are deployed to strike a balance. For instance, nucleus sampling adjusts token probabilities at each step to ensure higher coherence.

Controlled Generation:

Algorithms like DExperts, reward-augmented decoding, and other inference-time adapters control the statistical properties of generated text and allow for stylistic or attribute-specific generation. These methods utilize adapters that modify the next-token distributions based on criteria such as bonus models or attribute classifiers.

Meta-Generation Algorithms

Meta-generation algorithms extend the capabilities of token-level algorithms by allowing iterative, layered, and multi-step generation processes. They treat the LLM as a black-box component within larger generative frameworks.

Chained Meta-Generators:

A chained structure integrates multiple generator calls, enabling complex generation tasks through sequential or hierarchical generator compositions. This is particularly useful in problem decomposition, where complex queries are divided into manageable sub-queries, which are then solved and aggregated.

Parallel Meta-Generators:

Parallel meta-generators generate multiple sequences in parallel and then aggregate these sequences to improve the final output quality. Methods like best-of-, majority voting, and self-consistency fall under this category. Best-of- generates multiple sequences, selecting the one that maximizes a given scoring function, while self-consistency aggregates outputs to improve correctness probability.

Step-Level Search Algorithms:

Step-level algorithms like tree search or beam search at a step level, operate over partial solutions (steps) rather than full sequences. They utilize heuristic evaluations and expand steps iteratively to approach a solution. Prominent examples include mathematical problem solvers and theorem proving systems.

Refinement Algorithms:

Refinement involves iterative improvement of an initial generation by incorporating feedback loops. Algorithms like self-refinement and Reflexion generate an initial solution and iteratively refine it using feedback derived from models or external evaluations.

Incorporating External Information

The paper highlights the utility of integrating external tools, models, or domain-specific information into the generation processes. Notably:

Multiple Models:

Combining smaller, efficient models with larger LLMs can enhance decoding speed and output quality. For example, contrastive decoding uses a scoring function that combines outputs from multiple models to balance diversity and coherence.

External Environment:

Incorporating external tools, like search engines or code interpreters, allows addressing specific tasks more effectively. This approach notably augments the generation pipeline with capabilities like real-time data retrieval and computational functionality beyond the model’s intrinsic knowledge.

Token Cost and Performance Analysis

A significant concern addressed is the tradeoff between computational cost and generation quality. Meta-generation algorithms, while often improving quality, introduce substantial token and computational overheads. The paper includes detailed cost analyses and highlights techniques to manage and reduce token budgets, such as caching and parallel computation.

Speeding Up Generation

Given the computational burden associated with sophisticated generation algorithms, the paper reviews methods to enhance generation speed, such as:

State Reuse and Compression:

Efficiently managing and reusing pre-computed states (KV Cache) across multiple generation steps significantly reduces overhead in repeated computations.

Speculative Decoding:

Draft-then-verify mechanisms allow parallel generation and validation of sequences, effectively balancing speed with quality.

Hardware-Aware Optimization:

Leveraging hardware capabilities through techniques like FlashAttention and quantization, modern approaches optimize the utilization of GPUs and TPUs, ensuring faster generation without compromising model efficacy.

Discussion and Conclusion

The survey concludes by synthesizing the motivations and practical implications of sophisticated generation algorithms. It posits that generation algorithms are essential not only for addressing inherent deficiencies in models (such as degeneracies) but also for aligning generated text with specific distributional goals, dynamically allocating computational resources, and integrating external information to enhance functionality. The foreseeable trajectory includes refining these methodologies, especially in domains extending beyond traditional text generation, such as digital agents and interactive environments.

This comprehensive survey elucidates the breadth and depth of inference-time methods for LLMs, setting a foundation for further research and practical advancements in AI-driven text generation.