A Critical Study of What Code-LLMs (Do Not) Learn

Abhinav Anand, Shweta Verma, Krishna Narasimhan, and Mira Mezini's paper delivers an incisive critique of the current capabilities and limitations of LLMs trained on code corpora (code-LLMs). Leveraging Transformer models, these Code-LLMs have exhibited substantial progress in numerous coding assistance tasks. However, the authors aim to dissect these models' deficiencies, particularly their gaps in encoding crucial code properties.

Key Insights and Findings

Arbitrary Assumptions in Prior Work

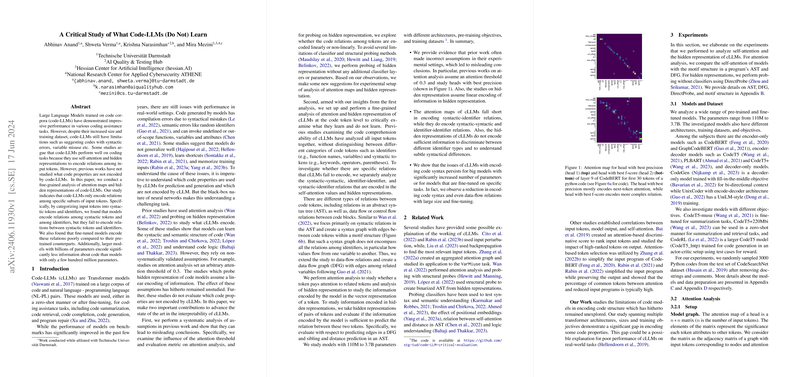

A primary contribution of this paper is an in-depth, systematic analysis that debunks several assumptions employed in previous studies on attention analysis and probing hidden representations. The investigation highlights that prior works frequently relied on arbitrary attention thresholds (commonly 0.3) and conventional linear probing methods, which could lead to skewed or misleading interpretations. The current paper shifts focus to a more fine-grained examination, advocating for lower attention thresholds (such as 0.05) and non-linear approaches to probing hidden representations.

Specific Observations on Attention Maps

The analysis illuminates that code-LLMs primarily encode relations among syntactic tokens and among identifiers but struggle to capture dependencies between syntactic tokens and identifiers. This partial encoding is fundamentally insufficient for a comprehensive understanding of program logic and flow. Even models fine-tuned on code-specific tasks or those with billions of parameters (e.g., CodeGen, CodeT5+ 2B) demonstrate a deteriorated performance in these regards compared to smaller pre-trained models. This observation posits a significant challenge to the prevailing belief that larger models inherently capture more sophisticated relationships.

Hidden Representations and Encoding Complexities

The utilization of DirectProbe sheds light on another critical limitation: the nonlinear nature of encoded information. Probing without classifier layers shows that while Code-LLMs can discern certain syntactic and data flow relations among tokens, they fail to encode more nuanced syntactic structures and identifier types effectively. This inadequacy severely hampers the models' abilities to fully comprehend the underlying semantics of the code, particularly in understanding the variable types and their interactions within control flows and data dependencies.

Implications and Future Directions

The revealed limitations underscore a pivotal theoretical and practical implication for developing next-generation Code-LLMs:

- Theoretical Implications: The current architecture of Transformer-based models may have inherent constraints in capturing all relevant coding relations necessary for robust code understanding. This paper advocates for exploring alternative architectural innovations or hybrid models integrating multiple learning paradigms (neural-symbolic learning, for instance).

- Practical Implications: As the paper demonstrates, relying heavily on performance benchmarks may inadvertently mask these underlying limitations, particularly given the propensity for these models to memorize training data. This reliance challenges the deployment of such models in real-world coding assistance tools without further investigation and remediation of these encoding gaps.

- Model Fine-Tuning: The paper suggests that fine-tuning on specific downstream tasks does not substantially mitigate these limitations. Instead, it posits that expanding the model size exacerbates the problem, potentially due to increased memorization rather than improved comprehension.

Future Research Directions

The paper opens various avenues for subsequent research endeavors:

- Novel Training Objectives: There is an evident need for developing and experimenting with more sophisticated training objectives that address the encoding of syntactic-identifier relations explicitly, perhaps through augmentation with more structured representations (e.g., Abstract Syntax Trees) during training.

- Architecture Alternatives: Future research should delve into alternative model architectures that can more effectively capture the deep syntactic and semantic nuances of code. Models that blend symbolic reasoning with deep learning could offer a promising path forward.

- Code Understanding Beyond Transformers: Given the fundamental limitations of the transformer architecture highlighted in this paper, subsequent explorations into non-transformer based approaches or hybrid methodologies integrating symbolic logic could be profoundly impactful.

In conclusion, this critical examination of Code-LLMs uncovers systemic limitations in the current models' ability to understand intricate code structures. The rigorous methodology and insightful findings laid out in this paper underscore the necessity for innovation in both theoretical frameworks and practical implementations to usher in more intelligent and reliable code-comprehending models.