The paper "LLMFactor: Extracting Profitable Factors through Prompts for Explainable Stock Movement Prediction" presents an innovative approach to stock movement prediction using LLMs. This research addresses the challenge of applying LLMs to financial forecasting, a field that typically relies on time-series data rather than pure text analysis.

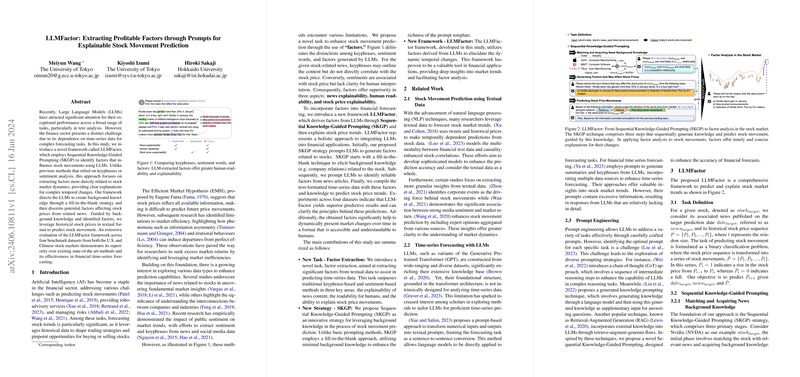

The authors introduce a framework named LLMFactor, which utilizes a technique called Sequential Knowledge-Guided Prompting (SKGP). This method focuses on identifying factors that influence stock movements, aiming for a deeper understanding of the market dynamics rather than relying solely on traditional sentiment analysis or keyphrase extraction.

Key elements of the LLMFactor framework include:

- Sequential Knowledge-Guided Prompting (SKGP): This technique involves creating structured prompts that guide LLMs to generate relevant background knowledge through a fill-in-the-blank strategy. This process helps in discerning potential factors that influence stock prices based on related news.

- Factor Extraction: Unlike traditional methods, this framework directly targets the extraction of market-relevant factors, offering explanations for temporal changes in stock prices. It enhances the interpretability of the predictions by correlating extracted factors with stock movements.

- Integration of Historical Data: The framework uses historical stock prices in textual format, integrating them with the identified factors to predict future stock movements. This incorporation of time-series data into LLM analysis represents a novel approach in financial forecasting.

The paper evaluates the effectiveness of the LLMFactor framework on four benchmark datasets from the U.S. and Chinese stock markets. The results show that LLMFactor outperforms existing cutting-edge methods in financial time-series forecasting, highlighting its potential to improve predictive accuracy and clarity in stock market predictions.

Overall, this research contributes to the growing field of financial prediction by leveraging the advanced capabilities of LLMs and introducing a method that enhances both the accuracy and explainability of stock movement predictions.