Predicting Downstream Capabilities of Frontier AI Models with Scale

Predicting the downstream capabilities of large AI models as they scale has been a complex and elusive challenge within the field of AI research. The paper "Why Has Predicting Downstream Capabilities of Frontier AI Models with Scale Remained Elusive?" by Schaeffer et al., explores this topic in depth, identifying new factors that impact the predictability of these models' performance on downstream tasks, particularly those using multiple-choice question-answering benchmarks.

Key Insights and Methodology

This paper primarily explores the relationship between the pretraining performance scaling laws and the downstream capabilities scaling laws. While pretraining performance scaling is well-understood and typically follows predictable patterns, downstream performance, especially on multiple-choice tasks, often shows unpredictable behavior with scale. This unpredictarity has been attributed to a variety of factors, but the paper identifies a critical, previously unexplored factor: the probabilistic handling of incorrect choices in multiple-choice formats.

The paper uses five different model families (Pythia, Cerebras-GPT, OLMo, INCITE, LLM360) and twelve widely-used benchmarks (such as ARC Easy and Hard, HellaSwag, MathQA, and more) to empirically investigate how performance metrics are computed and how predictability changes with scale.

Sequence of Transformations and Their Impact

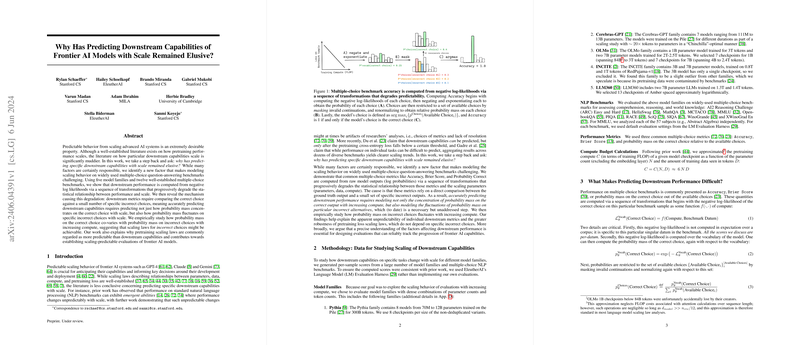

The authors elaborate on the sequence of transformations that model outputs undergo from raw logits to final performance metrics like Accuracy and Brier Score. They demonstrate that these transformations progressively degrade the statistical relationships between performance metrics and scaling variables (parameters, data, compute). Fundamentally, this degradation occurs because these performance metrics require not just a model's ability to identify the correct answers but also to appropriately distribute probability mass across incorrect choices.

For instance:

- Stage 1: Compute the negative log-likelihood of the correct choice (

L_vocab). - Stage 2: Transform it to probability mass on the correct choice (

p_vocab(correct choice)). - Stage 3: Restrict and renormalize probabilities to the set of available choices (

p_choices(correct choice)). - Stage 4: Calculate downstream performance metrics like Accuracy and Brier Score.

Each stage introduces complexity and potential noise, diluting the predictive power of the original log-likelihoods.

Empirical Findings

The key empirical findings show a consistent drop in correlation between compute and performance scores as we move through the transformations. When transforming raw log-likelihoods to probability masses for the correct choice, the predictability remains relatively high. However, once probabilities are normalized against incorrect choices, the degradation starts, and this effect is exacerbated in final performance metrics like Accuracy and Brier Score.

As a result, the co-variance of probability mass on incorrect choices with scale becomes a critical but challenging task. The paper highlights that for any given value of p_vocab(correct choice), the corresponding values of p_choices(incorrect choices) can vary significantly, affecting the final performance unpredictably.

Implications and Future Directions

This paper's insights have both practical and theoretical implications. Practically, understanding the mechanism by which downstream performance predictability degrades can inform better design and evaluation of AI systems, ensuring more stable and reliable performance metrics. Theoretically, these findings highlight the necessity to develop more robust models that can handle the inherent noise introduced by incorrect choices.

Notably, the paper suggests that focusing on metrics that directly derive from p_vocab(correct choice) may provide more reliable scaling trends. For practitioners seeking predictability, designing evaluation metrics considering these findings can help shape more accurate assessments of model capabilities.

Conclusion

The research underscores the intricacies involved in predicting the scaling behavior of downstream capabilities of AI models, particularly when evaluated through multiple-choice metrics. By elucidating the degradation process through a sequence of transformations, Schaeffer et al. provide a valuable framework for future investigations and methodologies aimed at enhancing the predictability and reliability of frontier AI model evaluations. These insights contribute significantly to the ongoing discourse on advancing the science of AI model scaling and evaluation.

Future works suggested by the authors include exploring generative evaluations and observing whether transforming generative outputs introduces similar predictive challenges. Additionally, predicting benchmark performance a priori remains a significant challenge, warranting further detailed research and model enhancements. This paper lays a solid groundwork for addressing these complex but crucial aspects of AI model development and evaluation.