Comprehensive Suite for Alignment in LLMs: Xwin-LM

Abstract

The paper presents Xwin-LM, an extensive suite of methodologies designed to enhance the alignment of LLMs. Specifically, the suite comprises supervised finetuning (SFT), reward modeling (RM), rejection sampling finetuning (RS), and direct preference optimization (DPO). The suite includes several key components: Xwin-LM-SFT, Xwin-Pair, Xwin-RM, Xwin-Set, Xwin-LM-RS, and Xwin-LM-DPO. Evaluations on AlpacaEval and MT-bench demonstrate marked improvements in model performance through the proposed pipeline, which is showcased as robust and scalable. The research community is encouraged to engage and contribute to the ongoing development through the provided repository.

Introduction

Recent strides in artificial intelligence have brought forth LLMs such as GPT-4 and Claude, showcasing their immense capabilities across numerous applications. A significant challenge remains in aligning these models to align with human values and expectations. Reinforcement Learning from Human/AI Feedback (RLHF/RLAIF) emerges as a promising solution. Nevertheless, the complexity and resource requirements pose substantial barriers.

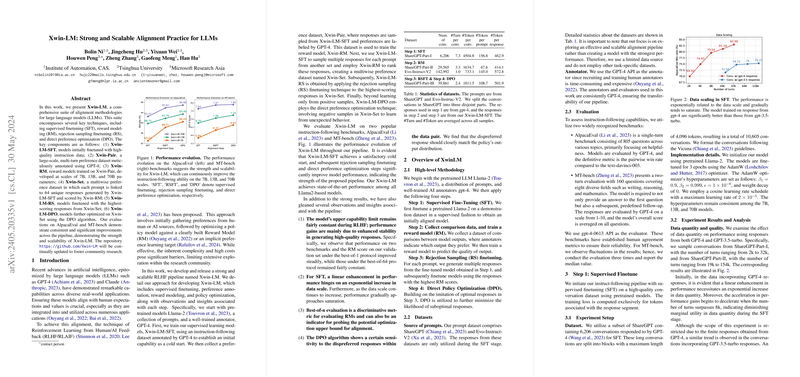

Xwin-LM seeks to address these challenges by constructing a robust RLHF pipeline. Utilizing supervised finetuning, preference annotation, reward modeling, and policy optimization, Xwin-LM transforms pretrained models like Llama-2 into highly aligned versions. This paper systematically outlines the steps and evaluates the performance improvements through benchmarks.

High-Level Methodology

The creation of Xwin-LM involves a four-step process commencing with a pretrained LLM, a distribution of prompts, and a well-trained annotator, GPT-4.

- Supervised Fine-Tuning (SFT): Initial finetuning of a pretrained Llama-2 using a demonstration dataset results in the baseline aligned model, Xwin-LM-SFT.

- Reward Modeling (RM): A dataset of preference comparisons is amassed, followed by training a reward model to predict the quality of outputs.

- Rejection Sampling Finetuning (RS): Multiple responses are generated per prompt, and models are finetuned using the highest RM-scored responses.

- Direct Policy Optimization (DPO): Enhances the model further by minimizing the likelihood of suboptimal responses using preference pairs.

Dataset and Implementation

The sources of prompts include ShareGPT and Evo-Instruct-V2. Annotators and evaluators consistently leverage the GPT-4 API, providing a stable transferability of the alignment pipeline. Evaluations involve two recognized benchmarks: AlpacaEval and MT-bench.

- AlpacaEval: A single-turn benchmark with questions across various topics, scored by GPT-4 with a pairwise win rate metric.

- MT-bench: A two-turn evaluation covering diverse fields, scored on a scale of 1-10 by GPT-4, with the median value reported over three evaluations.

Experimental Setup and Results

- Supervised Finetuning (SFT): Utilizing a dataset from ShareGPT, the models are finetuned for three epochs.

- Result: Linear performance enhancement requiring an exponential increase in data scale, with quality outperforming quantity.

- Reward Modeling (RM): A preference dataset named Xwin-Pair is created, consisting of fine-grained ratings.

- Result: Larger reward models demonstrated higher accuracy and generalizability. The best-of-n evaluation aligned well with GPT-4 judgments.

- Rejection Sampling Finetuning (RS): This approach is evaluated using top-performing responses.

- Result: Higher-ranked samples consistently yielded better performance with gains plateauing beyond a sample size of 32.

- Direct Preference Optimization (DPO): Updates the policy directly from preference pairs using the DPO method.

- Result: Showed optimal performance when dispreferred samples closely mirrored the policy’s output distribution.

Insights and Observations

- Consistency in Capability: The upper capability limit remains fairly constant during RLHF, with gains primarily arising from improved response quality stability.

- Performance Saturation: Performance gains decelerate with increased data scale, approaching a saturation point.

- Evaluation Metrics: Best-of-n evaluation serves as an effective metric for evaluating reward models and the optimization upper bound.

- DPO Sensitivity: The DPO algorithm's effectiveness hinges on the quality of dispreferred responses matching the policy's output distribution.

Conclusion and Limitations

Xwin-LM establishes a robust, scalable pipeline enhancing LLM alignment. The paper contributes noteworthy methodologies and evaluations confirmed by benchmarks. Nonetheless, certain limitations persist: enhanced multi-turn capabilities are not explicitly addressed, and the reliance on self-generated data introduces hallucination risks. Further, the stability of GPT-4 annotations and evaluations introduces variability.

Advancing this research will encompass refining the alignment techniques, exploring diverse data sources, and ensuring robust evaluation mechanisms foster the development of reliable and aligned LLMs.