Intelligent Go-Explore: Leveraging Giant Foundation Models for Enhanced Exploration Capabilities

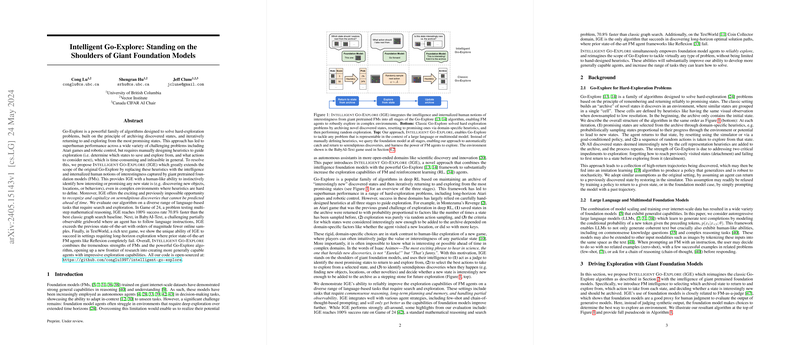

The paper "Intelligent Go-Explore: Standing on the Shoulders of Giant Foundation Models" introduces a novel method for improving the exploration capabilities of reinforcement learning (RL) agents. Building on the Go-Explore framework, this approach integrates the intelligence and human-like reasoning of large pretrained foundation models (FMs) to tackle hard-exploration problems more effectively. The research addresses significant shortcomings in classical Go-Explore by replacing manually designed heuristics with the adaptive and contextual understanding provided by FMs.

Overview of Go-Explore and Its Limitations

Go-Explore is a well-established algorithm family in deep RL that excels in solving complex exploration problems such as Atari games and robotic control. The core mechanism involves archiving novel states and iteratively returning to and exploring from the most promising ones. This method relies heavily on domain-specific heuristics to guide state selection, action choices, and the criteria for archiving new states.

However, the necessity of manually designing these heuristics poses substantial limitations, making the approach impractical or infeasible for more complex and less well-defined problems. Human players, in contrast, possess an intuitive sense of state interestingness and potential, which classical Go-Explore lacks.

Introduction to Intelligent Go-Explore (IGE)

Intelligent Go-Explore (IGE) extends the Go-Explore framework by incorporating the sophisticated reasoning capabilities of giant foundation models. These models, trained on vast internet-scale datasets, can understand and contextualize complex state information, which allows them to:

- Select Promising States: IGE uses the FM to choose the most promising states to return to from the archive, leveraging its internalized notions of interest and value.

- Select Actions: Instead of relying on random action sampling, IGE queries the FM to determine the best actions to explore from a given state.

- Archive New States: The FM also judges whether newly discovered states are sufficiently interesting to be archived, thereby recognizing and prioritizing serendipitous discoveries.

By employing FM intelligence in these stages, IGE automates the exploration process, reducing the need for domain-specific heuristics and enabling more effective exploration in previously challenging environments.

Empirical Evaluation

IGE's efficacy is demonstrated across a variety of text-based environments that require exploration and search:

- Game of 24: This mathematical reasoning task requires the agent to use arithmetic operations to reach a target number. IGE achieved a 100% success rate 70.8% faster than the best graph search baseline, showcasing its ability to leverage FM's intuitive problem-solving skills effectively.

- BabyAI-Text: This environment involves a partially observable gridworld where agents follow language instructions. IGE outperformed the state-of-the-art methods with significantly fewer online samples, specifically excelling in more complex tasks involving sequence and temporal reasoning.

- TextWorld: In this text-based game environment requiring long-horizon exploration and commonsense reasoning, IGE successfully solved tasks with complex state transitions and partial observability. Notably, IGE was the only algorithm to succeed in finding optimal solutions in the Coin Collector domain.

Analysis and Implications

The analysis section of the paper substantiates the importance of FM intelligence in different stages of Go-Explore, demonstrating substantial performance improvements when FM-based decision-making is used. Furthermore, integrating FMs significantly reduces the archive size by filtering uninteresting states, thereby focusing computational resources more effectively.

Future Directions and Speculations

The research opens new avenues for advancing autonomous agents across diverse and complex domains. As foundation models continue to improve, the capabilities and performance of IGE are expected to scale correspondingly. Future work could explore multimodal environments and expand the scope of applications to scientific discovery and innovation.

Conclusion

Intelligent Go-Explore represents a significant step forward in enhancing exploration capabilities through the integration of foundation models. By reducing reliance on manually designed heuristics and leveraging the contextual understanding of FMs, IGE offers a robust, scalable, and efficient approach to solving hard-exploration problems in RL. This work lays a strong foundation for future research into more generally capable and intelligent autonomous agents.