Unimodal Models in Vision-Language-Action Tasks

This paper provides a comprehensive overview of key unimodal models utilized in vision-language-action (VLA) frameworks. By dissecting the evolution and significance of vision, NLP, and reinforcement learning (RL) models, it establishes the foundational components that synergize to create sophisticated VLA systems.

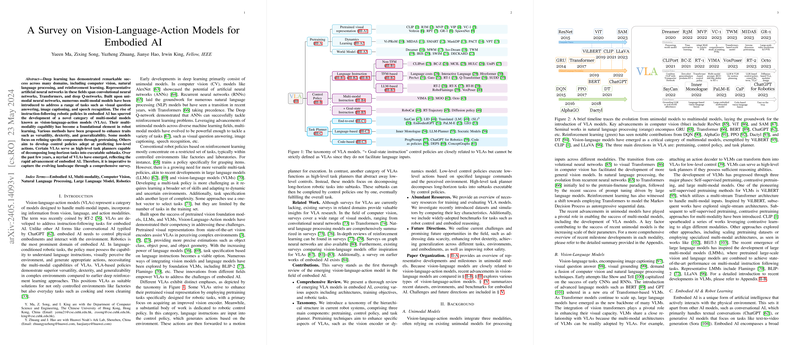

Computer Vision

The field of computer vision (CV) has seen significant advancements starting from early convolutional neural networks (CNNs) focusing on tasks like image classification. Milestones include LeNet, AlexNet, VGG, GoogLeNet, ResNet, Inception-ResNet, ResNeXt, SENet, and EfficientNet, each introducing novel architectures or mechanisms such as depth increase, inception blocks, skip connections, and attention mechanisms. Object detection models, evolving from CNN backbone networks, emphasize region-based methods (e.g., R-CNN, Fast R-CNN, Faster R-CNN, Mask R-CNN) and grid-based methods like YOLO. Image segmentation models like FCN, SegNet, U-Net, and Vision Transformers (ViT, DETR) further developed capabilities for detailed visual tasks. Additionally, vision models now exploit depth maps, point clouds, and volumetric data to enhance 3D understanding, crucial for robotic applications.

Natural Language Processing

The NLP domain forms the core component in VLA models, enabling linguistic comprehension and generation. The paper traces NLP progress from early computational linguistics to sophisticated models using the Transformer architecture. Early developments involved hierarchical language processing, while later phases embraced recurrent neural networks (RNNs) like LSTM and GRU, which were pivotal in early NLP implementations. The introduction of Transformer-based models, particularly BERT and GPT families, marked a significant shift. These models leverage the self-attention mechanism to perform tasks with superior efficiency and accuracy. The emergence of LLMs like GPT-3 and GPT-4 further extends NLP capabilities, pushing the boundaries of tasks achievable with implicit instruction-following paradigms.

Reinforcement Learning

Reinforcement learning (RL) remains integral to the decision-making aspect of VLA systems. The paper highlights breakthroughs like Deep Q-Networks (DQN), AlphaGo, and value-based approaches like Double DQN and BCQ, addressing stability and efficiency in policy learning. Policy search methods, including policy gradient and actor-critic techniques (e.g., DDPG, A3C, TRPO, PPO), have advanced RL’s applicability in robotic learning through improved data efficiency and stability. Sophisticated models like Gato extend these paradigms to multi-task, multi-modal learning environments, underscoring RL’s versatility. Special mention is made of robotics-focused RL implementations like E2E-DVP and Dreamer, demonstrating practical progress in real-world robotic control tasks.

Integrated Approaches and Future Directions

The synthesis of unimodal models in vision, language, and action into unified VLA systems epitomizes the convergence of these fields. This integration facilitates advanced functionalities in robotics, where models can visually recognize, interpret linguistic commands, and execute optimal actions. The paper outlines representative VLA models and benchmarks, examining multi-stream, single-stream, and sophisticated multi-modal pretraining methodologies. Benchmark comparisons illustrate the capabilities and performance metrics of these integrated models.

Future research in VLA systems is likely to focus on:

- Enhancing multi-modal pretraining datasets and methodologies.

- Leveraging LLMs for improved linguistic comprehension and interaction.

- Exploring advanced RL algorithms to refine action policies in dynamic environments.

- Innovating in network architectures to achieve more efficient training and inference.

The convergence of these developments will continue to drive the evolution of intelligent, adaptable robotic systems capable of intricate interactions within their operational environments.

In conclusion, the paper presents a detailed and structured examination of the developments in unimodal models, laying the groundwork for the continued advancement of integrated VLA systems. This overview provides invaluable insights for researchers and practitioners aiming to push the frontier of intelligent robotic systems.