Exploring MoRA: A High-Rank Alternative to LoRA for Fine-Tuning LLMs

When it comes to updating LLMs, the methods we use can significantly impact performance, especially as the models themselves grow exponentially in size. Recently, a new method called MoRA has been proposed which offers a potentially more efficient way to fine-tune LLMs compared to the widely-used LoRA. Let's break down how MoRA works, how it stacks up against LoRA, and what it might mean for the future of AI research.

How Do LoRA and MoRA Differ?

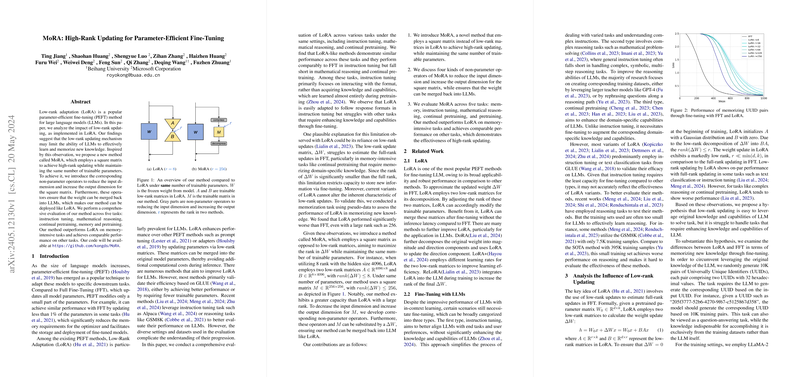

LoRA, or Low-Rank Adaptation, operates by using low-rank matrices to update the weights of LLMs. Essentially, it modifies a small portion of parameters, which allows it to fine-tune the model using significantly less memory. The catch? This low-rank nature can limit performance in tasks that require the model to learn and memorize a lot of new information.

Enter MoRA. Instead of sticking to low-rank matrices, MoRA employs a square matrix, achieving higher-rank updates without increasing the number of trainable parameters. This approach allows for more extensive updating and helps overcome some of the limitations seen with LoRA.

Key Concepts and Methods

Non-parameter Operators: One of MoRA's key innovations is the use of non-parameter operators to modify the input and output dimensions of its square matrix. Essentially, these operators help manage the dimensionality so that the square matrix can be as effective as possible without adding extra computational costs.

High-rank Updating: MoRA uses a square matrix to allow high-rank updates, while LoRA uses two low-rank matrices. By comparison, MoRA can maintain higher ranks, providing it a better capacity to store new information, especially in memory-intensive tasks.

Experiments and Results

The researchers evaluated the performance of MoRA and LoRA across five key tasks: instruction tuning, mathematical reasoning, continual pretraining, memory, and general pretraining. Here are some notable findings:

- Memory-intensive Tasks: MoRA outperformed LoRA significantly in tasks that required memorization, such as updating UUID pairs. In this experiment, MoRA reached 100% character-level accuracy much faster than LoRA.

- Fine-Tuning Tasks: In continual pretraining tasks, MoRA showed a clear advantage over LoRA. When fine-tuning LLMs for domain-specific knowledge (e.g., biomedicine or finance), MoRA's high-rank updates resulted in better performance.

- Mathematical Reasoning: For tasks like solving math problems, higher ranks (256) gave both MoRA and LoRA an edge, but MoRA still managed to squeeze out better results, reflecting its superior ability to handle complex tasks requiring fine-tuned knowledge.

Practical Implications and Future Prospects

The introduction of MoRA suggests a strong move towards more efficient and effective fine-tuning techniques for LLMs. Here are some potential implications:

- Improved Performance: For future AI systems that need to operate in specialized domains or handle complex, multi-step reasoning tasks, MoRA offers a promising solution. Its high-rank updating can better capture and retain the necessary details.

- Memory Efficiency: As MoRA maintains the same number of trainable parameters as LoRA but uses them more effectively, it ensures that LLMs can be fine-tuned with lower memory overhead and computational costs.

- Scalability: This method opens doors for further scaling LLMs without a proportional increase in the resources required for fine-tuning, making it easier to deploy these advanced models in more practical settings.

Conclusion

While LoRA has been a popular method for parameter-efficient fine-tuning of LLMs, its low-rank nature can sometimes limit performance. MoRA addresses these limitations by introducing high-rank updates via a novel use of square matrices and non-parameter operators. This research shows that MoRA not only matches but frequently surpasses the performance of LoRA, especially in memory-intensive and more specialized tasks. As AI continues to advance, methods like MoRA could prove pivotal in making these sophisticated models more effective and broadly usable.

With this research paving the way, we're likely to see further developments that refine and expand on these concepts, ensuring AI remains both innovative and practical.