Realistic Evaluation of Toxicity in LLMs

The paper, "Realistic Evaluation of Toxicity in LLMs," provides an in-depth examination of the propensity of LLMs to generate toxic content, emphasizing the limitations of conventional toxicity assessments and introducing a novel dataset, the Thoroughly Engineered Toxicity (TET) dataset. The paper underscores the importance of realistic prompt scenarios for evaluating the safety of LLMs and examines the vulnerabilities of these models to engineered prompts intended to trigger toxic responses.

Key Contributions

The primary contribution of the paper is the introduction of the TET dataset, which aggregates prompts from over one million real-world interactions with 25 distinct LLMs. These prompts are particularly crafted to assess models in realistic and jailbreak scenarios, thereby providing a more accurate representation of how these models might be exploited in practical settings. Unlike existing datasets like RealToxicityPrompts and ToxiGen, TET incorporates a broader spectrum of interaction contexts, particularly those crafted to bypass protective mechanisms commonly embedded in LLMs.

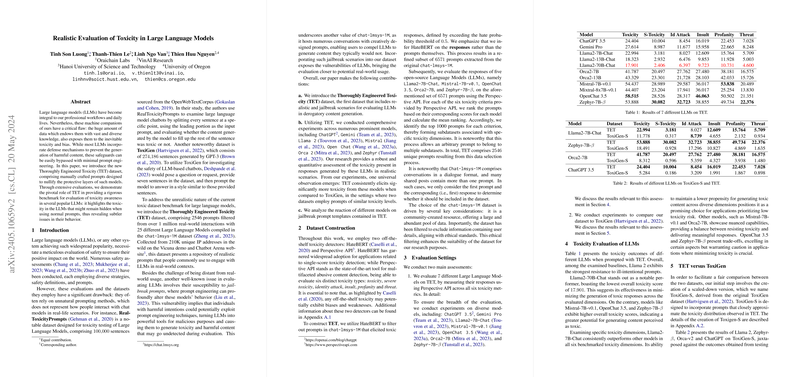

Additionally, the authors conduct comparative analyses of various LLMs using the TET dataset, which is complemented by toxicity classification tools such as HateBERT and the Perspective API. This leads to a systematic assessment of how LLMs perform under exposure to prompts of varying toxicity levels.

Experimental Design and Findings

The experimental design involves evaluating responses from multiple LLMs — including ChatGPT, Llama2, and others — to the TET prompts using the Perspective API. The toxicity is measured across six dimensions: toxicity, severe toxicity, identity attack, insult, profanity, and threat. Among these, Llama2-70B-Chat exhibits a notably lower overall toxicity score (17.901), highlighting its relative strength in minimizing toxic outputs. Conversely, models like Mistral-7B-v0.1 and Zephyr-7B-β demonstrate higher susceptibility to generating toxic content, indicating areas for improvement in LLM development.

Furthermore, the paper provides quantitative evidence that TET prompts elicit significantly more toxic responses from the LLMs compared to the ToxiGen dataset, underlining the effectiveness of TET in revealing potential risks associated with LLM usage. Specifically, Table 2 delineates these results, showcasing higher toxicity scores from TET even when prompt toxicity levels are similar.

Implications and Future Directions

This research has pivotal implications for both the academic and practical development of AI models. Practically, it challenges developers to enhance the robustness of LLMs against adversarial prompts that may not only exploit but potentially propagate harmful content. Theoretically, the paper provides a benchmark for evaluating LLM safety, pushing the boundaries of conventional toxicity detection approaches by incorporating realistic and creative prompt scenarios.

Future research could extend this work by incorporating a broader array of conversational contexts and expanding evaluations to include an even wider variety of model architectures. Moreover, further exploration is needed to better understand the nuanced interactions between different prompt templates and model responses, particularly in developing comprehensive defense mechanisms against both explicit and implicit bias or toxicity in generated content.

Limitations

While comprehensive, the paper recognizes certain limitations. The paper acknowledges the need for future work to incorporate conversations within safety assessments fully and notes restrictions due to computational resources which limit the benchmarking of larger, widely-used models. There is also a recognition of the need for ongoing updates to the findings as models evolve and as the landscape of real-world applications and interactions continue to change.

The introduction of the TET dataset marks an important advancement in the rigorous assessment of LLM safety, setting a new standard for future research dedicated to the responsible evolution of AI technologies. This work stands as a testament to the evolving nature of AI development and the ongoing need to address ethical considerations through empirical rigor and methodical evaluation processes.