Understanding Intention in Non-Literal Language: A Generative Evaluation Approach

Introduction

Humans are pretty good at picking up on subtle cues and context in conversations, often understanding each other even when we don't say exactly what we mean. LLMs need to develop a similar knack for this if we're expecting them to engage in natural, nuanced interactions with us. In the paper I'm discussing here, the authors propose a new framework to check how well LLMs understand and respond to non-literal language—think metaphors, sarcasm, irony, etc.

Pragmatic Response Generation

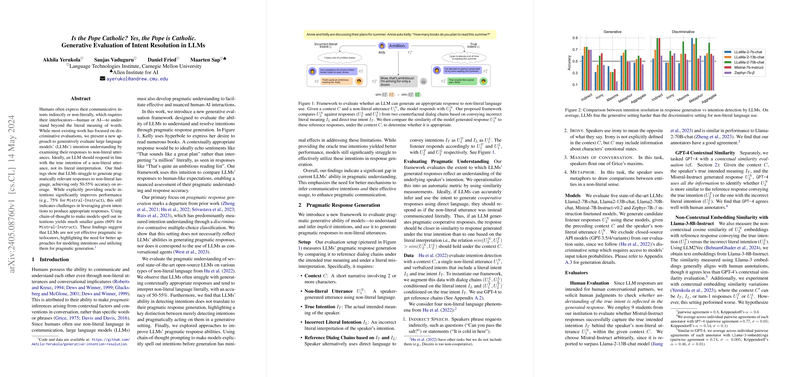

The paper introduces a fresh way to evaluate LLMs' ability to understand and generate responses that reflect the true intention behind non-literal language. Here's a bit more detail on how the whole setup works:

Setup

The evaluation involves a few key components:

- Context (C): A short narrative or scenario involving two or more characters.

- Non-literal Utterance (U1N): The actual non-literal statement made by a character.

- True Intention (IT): What the speaker really means.

- Incorrect Literal Intention (IL): A misunderstood, literal interpretation of the statement.

- Reference Dialog Chains: Dialogs illustrating how responses would look if the intention was clearly stated or misunderstood.

Data

The authors expanded existing datasets by creating dialog chains that demonstrate how a respondent might react if they correctly understood the true intention or mistakenly took the statement literally. They used GPT-4 to generate these reference dialogs, ensuring a consistent and meaningful evaluation framework.

Results

The main takeaway? LLMs still have a long way to go in terms of pragmatic understanding. Here are some specifics:

Model Performance

LLMs were evaluated on several types of non-literal language:

- Indirect Speech: Easy for models, perhaps because such requests often follow familiar patterns.

- Irony and Metaphors: Tricky, especially when the context isn't clear-cut.

- Conversational Maxims: Models commonly misinterpreted subtle conversational hints, performing worse than random chance.

Generative vs. Discriminative Evaluation

A key highlight of the paper is the difference between generative and discriminative approaches. In previous work, models would typically pick the intended meaning from a set of options—a task they generally performed better at. However, generating an appropriate response based on the inferred intention? That's a tougher nut to crack and reflects a more realistic conversational challenge.

Chain-of-Thought Prompting

Given that LLMs can sometimes detect the intended meaning, the authors experimented with chain-of-thought (CoT) prompting—essentially a way to nudge models to think step-by-step.

Experiment Results

- Without Oracle Information: Minimal improvements in understanding.

- With True Intention Information: Significant boost in performance, demonstrating its critical role.

- Specific Non-Literal Cues: Minor improvements, emphasizing how recognizing the type of non-literal language used can help but is not as impactful as knowing the true intention.

Implications and Future Directions

Practical Implications

In practical terms, the findings suggest that while LLMs can sometimes recognize the intended meaning behind statements, leveraging this understanding to generate suitable responses is still challenging. This gap needs to be addressed to improve human-AI interactions.

Theoretical Implications

Theoretically, this highlights areas where LLMs need to improve. Specifically, better mechanisms for:

- Inferring Intentions: More accurately understanding the true intentions behind statements.

- Utilizing Intentions: Effectively using this understanding to inform response generation.

Speculating on Future Developments

Future advancements may include:

- Enhanced Contextual Understanding: Incorporating richer social contexts, emotional states, and external knowledge to improve nuanced intention modeling.

- Larger and More Varied Datasets: Using more comprehensive datasets that cover a broader range of non-literal language.

Limitations and Ethical Considerations

While this research is a significant step forward, it has some limitations:

- Context Scope: Current focus is narrow, and future work should explore broader contexts.

- Evaluation Challenges: Human judgments are considered gold standards, but consistency can be an issue.

- Potential Impact on Factuality: Improvements in non-literal understanding might affect the factual accuracy of LLM responses.

Summary

This paper presents a new lens to evaluate how LLMs understand and respond to non-literal language. It reveals current shortcomings, especially in pragmatic response generation, and highlights the importance of better modeling and leveraging communicative intentions. While there's clear progress, there's also a lot more to be done to make our interactions with AI as natural and intuitive as our human conversations.