Exploring Gender-Inclusive Machine Translation with Neo-GATE

Background and Motivation

Machine Translation (MT) systems have long been affected by gender bias, often defaulting to masculine forms or perpetuating gender stereotypes. This is especially challenging when translating from languages like English, which have limited gender markings, into languages with extensive gendered morphology, like Italian. This bias not only underrepresents women but also overlooks non-binary individuals entirely.

Given the rising need for more inclusive language technologies, there's growing interest in gender-inclusive solutions, particularly from grassroots efforts within the LGBTQ+ community. This paper explores the use of neomorphemes—innovative linguistic elements that break away from binary gender norms—as a potential solution for fairer machine translation.

The Neo-GATE Benchmark

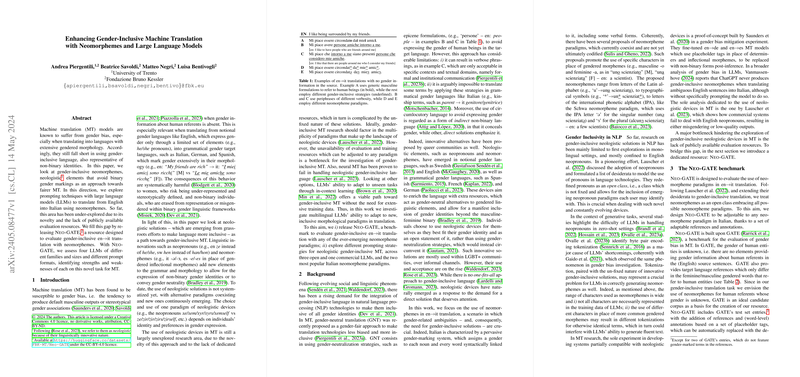

A significant contribution of this research is the introduction of Neo-GATE, a dedicated benchmark for evaluating gender-inclusive English-to-Italian translation that utilizes neomorphemes. Neo-GATE is built on top of the GATE benchmark, which addresses gender bias in MT but does not account for non-binary or gender-neutral expressions.

Neo-GATE offers flexibility by treating neomorphemes as an open class, allowing for adaptability to various neologistic paradigms. This adaptability is crucial given the evolving nature of these linguistic elements. The benchmark includes tagged references that replace gendered morphemes and function words with placeholders, making it straightforward to test different neomorpheme paradigms.

Experimental Setup

The experiments involved four prominent LLMs to handle gender-inclusive translations:

- Mixtral

- Tower

- LLama 2

- GPT-4

These models were tested against two popular Italian neomorpheme paradigms—Asterisk (*) and Schwa (ə/ɛ). Various prompting strategies were used, including zero-shot and few-shot learning prompts, to gauge the models' ability to produce accurate and inclusive translations.

Key Findings

Zero-Shot Results

- GPT-4 and Mixtral performed relatively better in generating neomorphemes accurately, though GPT-4 was notably ahead.

- LLama 2 and Tower struggled significantly, with LLama 2 rarely generating neomorphemes and Tower opting for fluent, gendered outputs.

Few-Shot Learning

Few-shot prompts generally improved the models' performance. Here are some highlights:

- Coverage and Accuracy: The coverage of gender-specific words increased with more demonstrations. GPT and Mixtral showed substantial improvements.

- Coverage-Weighted Accuracy: GPT-4 scored the highest, achieving a notable improvement over its zero-shot performance.

- Mis-Generation: While GPT-4 and Tower showed fewer mis-generations, Mixtral exhibited higher rates of incorrect neomorpheme use, particularly with fewer demonstrations.

Implications and Future Directions

This research underscores the potential of LLMs in adapting to tasks requiring gender-inclusive language, even though challenges remain. The positive results from GPT-4 and Mixtral suggest that with the right calibration, there's room for improvement.

Practically, this work pushes the boundary in gender-inclusive MT and lays the groundwork for more robust and nuanced translation systems that can cater to evolving linguistic needs. The Neo-GATE benchmark itself is a step forward, providing a resource for future research to build on.

Theoretically, exploring how LLMs handle neomorphemes provides insights into the adaptability and limitations of these models in processing innovative linguistic constructs. This could spur further advancements in training LLMs with diverse and inclusive datasets.

Conclusion

The move towards gender-inclusive MT is not just a technological upgrade but a societal necessity, as it ensures fair representation of all gender identities. This paper highlights the first significant efforts in this direction, providing both a conceptual framework and empirical evidence of what's achievable with current LLMs. Future work can build on these findings, refining models and expanding resources like Neo-GATE to cover more languages and neologistic paradigms.