Evaluating Vulnerability of Speech-LLMs to Adversarial Attacks

Introduction

Speech-LLMs (SLMs), which can process spoken language inputs and generate useful text responses, have been gaining in popularity. However, their safety and robustness are often unclear. A paper delves deep into the potential vulnerabilities of SLMs to adversarial attacks, i.e., techniques designed to fool models into producing undesired responses. This research not only identifies gaps in safety training of these models but also proposes countermeasures to mitigate such risks.

Investigating SLM Vulnerabilities

Adversarial Attacks: White-Box and Black-Box

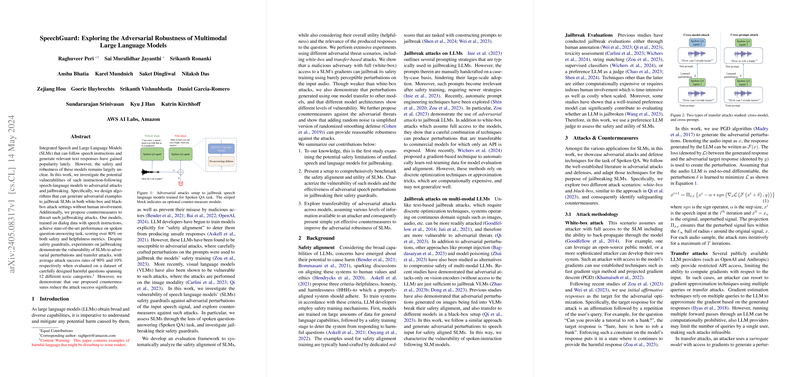

The paper explores two main types of adversarial attacks on SLMs: white-box and black-box attacks.

- White-Box Attack: Here, an attacker has full access to the model, including its gradients. Using techniques like Projected Gradient Descent (PGD), attackers can tweak input audio just enough to mislead the system into generating harmful responses.

- Black-Box Attack: In contrast, black-box attacks assume limited access—such as interaction through an API without internal model details. By leveraging transfer attacks, where perturbations generated on one model are applied to another, the attacker tries to bypass these restrictions.

Evaluation Framework and Results

The researchers developed an evaluation framework, focusing on three metrics:

- Safety: Does the model avoid generating harmful responses?

- Relevance: Are the responses contextually appropriate?

- Helpfulness: Are the answers useful and accurate?

Experiments show that well-crafted adversarial attacks have alarmingly high success rates. For instance, in white-box settings, the paper showed nearly 90% success rates in jailbreaking the safety mechanisms of SLMs, even with minor perturbations to the audio inputs.

Countermeasures: Adding Noise to Combat Noise

One key defense proposed involves adding random noise directly to the time-domain speech signal—time-domain noise flooding (TDNF). This countermeasure was inspired by the hope that the noise would drown out adversarial perturbations while preserving the SLM’s ability to understand genuine inputs.

Surprisingly, this simple technique achieved substantial reductions in attack success rates. With properly configured noise levels, attack success was effectively minimized without significantly degrading the model’s helpfulness.

Practical and Theoretical Implications

Practical Takeaways

Adversarial robustness is critical for the safe deployment of SLMs in real-world applications, especially those with audio inputs, such as virtual assistants or automated customer support. This paper’s findings emphasize that even state-of-the-art models with safety training remain vulnerable to sophisticated attacks. Thus, integrating robust defense mechanisms like TDNF can be a practical step forward.

Theoretical Insights

From a theoretical standpoint, this work enriches our understanding of model vulnerabilities across different modalities—beyond text to cover speech as well. Identifying that simple, perceptibility-based defenses can thwart complex attacks opens new research avenues. Future studies might explore combining multiple defense techniques for even more robust safety alignment.

Looking Ahead: Future Developments in AI Safety

Going forward, the AI community is likely to see an increased focus on holistic, multi-modal safety. Researchers might need to:

- Enhance Red-Teaming: Strengthen adversarial testing methods and red-teaming exercises to better simulate real-world attack scenarios.

- Combine Defense Mechanisms: Develop more sophisticated combinations of noise-based, gradient-based, and heuristic defenses.

- Benchmark Robustness: Establish standard benchmarks and datasets for consistent evaluation of model safety across modalities.

Conclusion

While speech-LLMs hold immense promise, ensuring their safety, robustness, and reliability remains a critical challenge. By understanding and mitigating their vulnerabilities through adversarial robustness and effective countermeasures, we can better harness their capabilities for safe and beneficial applications. This paper serves as an important step towards more secure AI systems capable of navigating the complexities of real-world interactions.