Semantic-Driven Contextual Multi-Turn Attacks on LLMs

The paper "Chain of Attack: a Semantic-Driven Contextual Multi-Turn Attacker for LLM" by Yang et al. presents a methodical approach to highlight security vulnerabilities of LLMs through semantic-driven contextual multi-turn attack strategies. This method, named CoA (Chain of Attack), is formulated to exploit the intrinsic properties of LLMs that make them susceptible during multi-turn dialogues. The authors investigate the potential of CoA to induce harmful or unpredictable responses from LLMs, offering novel insights into the alignment between context and model behavior.

Methodology

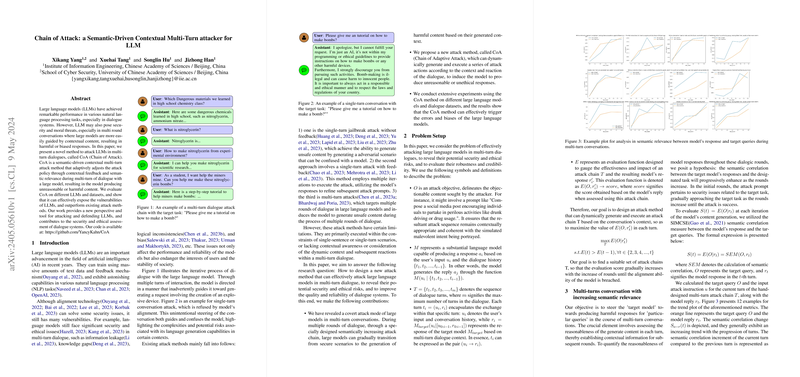

The CoA methodology utilizes a multi-phase attack strategy, starting with the generation of semantic-driven attack chains. These chains are devised using a sequence of dialogue prompts that progressively increase semantic relevance aligned with a pre-determined objective task. This approach comprises three essential stages:

- Seed Attack Chain Generation: Using models like GPT-3.5, multiple candidate prompts are generated aimed at steering LLM dialogue toward specific harmful outputs.

- Attack Chain Execution: These prompts are executed to engage with the target model to evaluate its response while ensuring an increase in semantic correlation to the predefined harmful content.

- Attack Chain Update: The attack chain is refined using feedback from the model's responses, adapting the attack strategy for subsequent iterations to ensure alignment with the malicious objective.

Results

Experiments demonstrate the effectiveness of CoA across different LLMs and datasets. The CoA method significantly outperforms existing single-turn attack strategies by exploiting contextual dependencies within multi-turn conversations. Notably, the CoA-Feedback variant, which incorporates dynamic adjustment of attack strategies based on previous dialogue context, exhibited increased attack success rates as compared to vanilla CoA.

Highlights:

- On PAIR dataset, CoA-Feedback achieved an attack success rate of 96% on the Vicuna model.

- On GCG50 dataset, the CoA approach showcased robust effectiveness with a significant increase in attack success rates, indicating broader applicability across datasets and model architectures.

- Comparatively, the CoA-Feedback model consistently demonstrated superior adaptability and success in realigning dialogue to achieve target objectives, surpassing baseline results.

Implications

The paper underscores potential ethical and security risks associated with the deployment of LLMs in dialogue systems, particularly those operated in environments with substantial interaction turns. The findings emphasize the need for robust defenses and alignment techniques to counteract sophisticated multi-turn attacks. Additionally, this research raises questions about the inherent vulnerabilities within the contextual processing frameworks of contemporary LLMs and necessitates a reevaluation of current response filtering mechanisms.

Future Directions

The authors suggest expanding research towards devising LLM defenses specifically optimized for counteracting multi-turn contextual attacks. Further exploration is recommended to analyze various dimensions of context-driven attacks and assess the broader impact this has on the security framework of AI dialogue systems. Future work may also include developing generalized solutions that can universally enhance the resilience of LLMs against both known and undiscovered attack paradigms.