An Overview of Vidur: A Large-Scale Simulation Framework for LLM Inference

The paper "Vidur: A Large-Scale Simulation Framework for LLM Inference," presents a detailed simulation framework designed to address the complexities and cost inefficiencies associated with optimizing the deployment of LLMs. The authors outline both the necessity of such a framework and the specific implementation nuances that set Vidur apart from existing simulation tools.

Context and Problem Statement

LLMs are foundational to modern NLP tasks, with applications in models like GPT-4 and LLaMA. Despite their capabilities, their inference process remains computationally expensive. Optimizing the deployment of these models typically requires experimental runs across a vast configuration space, including parallelization strategies, batching techniques, and scheduling policies. These experiments are resource-intensive and cost-prohibitive, warranting a more efficient approach to performance modeling.

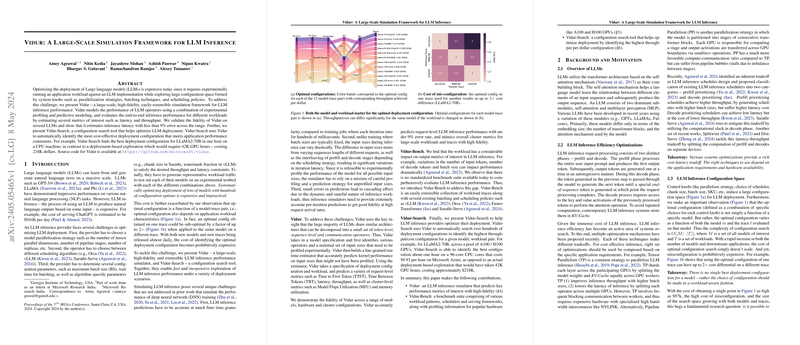

The Vidur Framework

Vidur is introduced as a simulation framework for high-fidelity, large-scale LLM inference performance modeling. The framework simulates LLM operators using experimental profiling combined with predictive modeling to estimate key metrics such as latency and throughput. Vidur's high accuracy is evidenced by its ability to predict inference latency within a 9\% error margin.

Vidur also includes Vidur-Search, a configuration search tool that optimizes LLM deployment configurations to maximize cost-effectiveness while meeting application performance constraints. For instance, Vidur-Search significantly reduces the cost and time of finding an optimal configuration for the LLaMA2-70B model, highlighting the framework's practical implications.

Key Contributions and Components

1. Architectural Insights

Vidur leverages the architectural similarities among LLMs to streamline the profiling process. By decomposing LLMs into token-level, sequence-level, and communication operators, Vidur minimizes the profiling workload and enhances the accuracy of runtime predictions for unprofiled input sizes.

2. Profiling and Runtime Estimation

The framework employs a sophisticated profiling mechanism that categorizes operations based on their computational dependencies, such as the context length for sequence-level operations. To predict runtime for unprofiled input sizes, Vidur trains random forest regression models, balancing data frugality and prediction accuracy.

3. Hierarchical Scheduling

Vidur's hierarchical scheduler supports multiple batching strategies and memory management capabilities. It integrates various scheduling policies, including vLLM, Orca+, and Sarathi-Serve, providing flexibility in simulating diverse deployment scenarios.

Evaluation and Fidelity

The evaluation demonstrates Vidur's high fidelity across multiple models and workloads. Static and dynamic workload simulations show that Vidur can predict end-to-end performance metrics with less than 5\% median error, even at high system capacities. This accuracy is crucial for production environments where slight misconfigurations can lead to significant cost inefficiencies.

Practical Implications and Future Work

Vidur's ability to deliver accurate performance metrics quickly and cost-effectively has profound implications for the deployment of LLMs. By significantly reducing the barriers to exploring optimal configurations, Vidur enables LLM inference providers to efficiently scale and adapt to new models and workloads.

Looking ahead, Vidur's extensibility suggests potential enhancements, such as supporting more parallelism strategies and integrating energy consumption metrics. These developments could further refine its utility in diverse computational environments.

Conclusion

Vidur represents a substantial advancement in the simulation and optimization of LLM inference. Its precise modeling capabilities, coupled with the practical benefits of Vidur-Search, provide a powerful tool for navigating the complex landscape of LLM deployments. This work not only addresses current inefficiencies but also lays the groundwork for future improvements in LLM-inference optimization.