Decoding the Pathways to Creativity in Humans and LLMs

Introduction to Creative Processes

Recent discussions around the capabilities of LLMs often focus on their outputs—what they produce. But equally important, though less touched upon, is the creative process—how these outcomes are reached. The paper in question pivots towards understanding this creative process both in humans and LLMs, through tasks encouraging divergent thinking.

Understanding Human and LLM Creativity

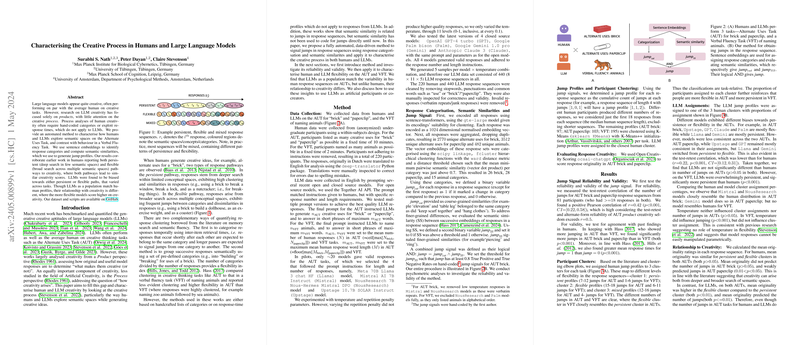

Humans and LLMs tackle creativity differently. When humans engage with a task asking for creative uses of an object, such as a brick, they typically follow two patterns:

- Persistent Pathways: Focused exploration within a narrow semantic field. Think "using a brick to break windows, locks, or as a nutcracker"—all involve breaking.

- Flexible Pathways: Broad exploration across different semantic categories. For example, "using a brick as a makeshift dumbbell, a bookend, or a doorstop" shows more diverse thinking.

The traditional methods to analyze these patterns involve categorizing responses based on time taken between ideas or predefined categories, which simplifies understanding these complex cognitive processes. However, such methods fall short when applied to LLMs since these models do not operate under human constraints like response time.

A New Method for Analysis

The paper introduces a revolutionary method relying purely on data to decode this creative engagement in both humans and AI. Main components of this method involve:

- Using sentence embeddings to understand semantic fields and categorize ideas.

- Calculating semantic similarities to identify 'jumps'—transitions from one category to another.

- Analyzing 'jump patterns' or profiles to characterize the type of creative process—persistent or flexible.

This method sidesteps traditional reliance on temporal metrics, which is a notable pivot especially useful for analyzing non-human participants like LLMs.

Key Results from the Study

Results from both tasks (Alternate Uses Task and Verbal Fluency Task) reveal interesting patterns:

- Consistency Between Models and Humans: LLMs, just like humans, displayed varied strategic engagement ranging from persistent to flexible. However, the nature of their engagement significantly impacted their creativity scores.

- Creativity and Flexibility: More flexible LLMs tended to score higher on creativity metrics contrary to humans where no clear correlation was observed between flexibility and creativity rating.

- Task Influence on Performance: The choice of task moderately influenced how models performed, with some showing great flexibility in one task but not the other.

Practical Implications and Forward Look

Understanding these mechanisms opens up intriguing possibilities:

- AI as Co-Creators: Knowing an AI’s tendency towards persistence or flexibility could complement human creativity, potentially leading to more diverse and rich ideation processes in collaborative scenarios.

- Refining AI Training: Insights from such studies could guide the development of more nuanced and balanced AI systems that aren't overly skewed towards any single creative strategy.

Tools and Resources

For the community, this paper not only brings new knowledge but also provides practical tools: the datasets and scripts used here are openly available for further experimentation and development.

Concluding Thoughts

This paper serves as a crucial stepping stone towards understanding the complex mechanisms of creativity in algorithms. By shedding light on how these models mimic or diverge from human cognitive processes, we pave the way for more meaningful interactions with artificial intelligence, ensuring they serve as valid supports or enhancements to human capabilities. This is just the beginning of a fascinating segment of AI research that explores the nuances of the creative mind, both biological and artificial.