Exploring Refinement: Advancing Small LLMs through Self-refine Instruction-tuning

An Overview of the Problem

LLMs come in various sizes: from small, nimble variants to colossal, data-hungry leviathans. These LLMs like GPT-3.5, have shown an impressive ability to handle complex reasoning tasks by breaking them down into manageable, sequential thought processes—a tactic known as Chain-of-Thought (CoT) prompting. However, these larger models face adoption hurdles due to their size and computational costs.

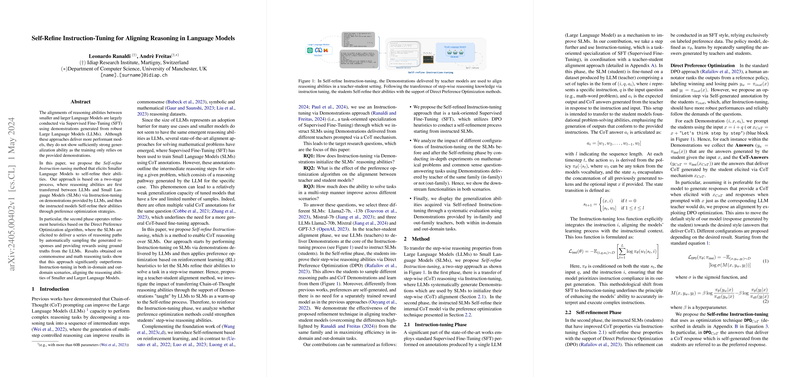

In contrast, Small LLMs (SLMs) are easier to handle but traditionally lag in performing complex cognitive tasks without explicit step-by-step guidance. The paper I discuss here introduces an innovative approach called Self-refine Instruction-tuning. This methodology aims to enhance the reasoning capability of SLMs by learning from the 'thought process' exhibited by LLMs, followed by a self-refinement stage to further improve their understanding.

The Methodology Insight

The research paper presents a two-part method to boost the reasoning powers of smaller LLMs using a system that involves both instruction and self-refinement:

Phase 1: Instruction-tuning

The initial phase is all about setting the stage. The SLMs are instructed using demonstrative examples derived from LLMs. These examples showcase how to solve specific problems step-by-step, aligning student models (SLMs) closer to their teacher models (LLMs) in terms of reasoning paths.

Phase 2: Self-refinement via Direct Preference Optimization

Once equipped with foundational reasoning skills from LLMs, SLMs enter a self-refinement phase. This stage harnesses the strength of Direct Preference Optimization (DPO)—a strategy rooted in reinforcement learning—to fine-tune their problem-solving abilities. The refinement involves the model evaluating its own generated responses against set criteria or 'preferences,' encouraging iterative self-improvement without constant supervision.

Standout Results and Practical Implications

The paper quantitatively demonstrates that Self-refine Instruction-tuning convincingly outperforms traditional instruction-tuning across various reasoning tasks both in-scenario (aligned with training examples) and out-scenario (where the tasks diverge from direct training examples). This indicates not just improved reasoning skills but also an enhanced ability to generalize these skills to varied contexts—a significant leap for deploying SLMs in real-world applications where flexibility and adaptability are crucial.

What's Next in AI?

The method proposes a systematic way to export high-quality reasoning capabilities from more powerful models to less demanding ones, potentially democratizing access to high-level AI reasoning. Looking forward, this methodology could lead to broader adoption of AI in diverse fields, from enhancing educational tools to powering intuitive user interfaces in software applications.

The continuous evolution of this self-refinement process may also prompt more robust forms of AI that can learn and adapt in live environments, ultimately requiring less human intervention in training sophisticated models.

The Big Picture

Self-refine Instruction-tuning appears as a promising avenue to bridge the functionality gap between LLMs and SLMs. By leveraging the sophisticated reasoning stratagems of their larger counterparts, smaller models can potentially serve more complex roles than previously deemed feasible, all while maintaining operational and resource efficiency.

This research showcases a practical roadmap for enhancing the generalization capability of AI without continually expanding the model size, steering us toward a future where smaller, smarter models could become ubiquitous collaborators in cognitive tasks.