Efficient Multimodal LLM for Chart Understanding with Visual Token Merging and Program-of-Thoughts Learning

Overview

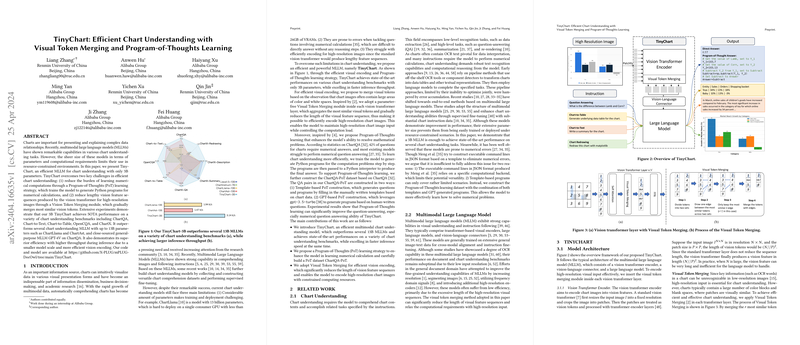

In the paper by Zhang et al., a new model, TinyChart, is introduced targeting efficient multimodal chart understanding tasks utilizing a significantly more compact architecture compared to existing solutions, with only 3 billion parameters. These efficiencies are achieved through innovative approaches: Visual Token Merging and Program-of-Thoughts Learning (PoT). Extensive experiments reveal that TinyChart not only effectively reduces computational demands but also surpasses the performance of models with up to 13 billion parameters across a suite of benchmarks.

Core Contributions

- TinyChart:

- A streamlined model achieving state-of-the-art performance on various chart understanding benchmarks.

- Demonstrates higher inference throughput thanks to its reduced scale and efficient encoding methods.

- Program-of-Thoughts Learning:

- Enhances numerical computation abilities in chart understanding tasks.

- A new dataset, ChartQA-PoT, supports PoT learning with both template and GPT-based generated programs.

- Visual Token Merging:

- Proposes an efficient mechanism to handle high-resolution chart images by merging similar visual tokens, thereby effectively controlling computational overhead.

Technical Insights

- Model Architecture:

- TinyChart integrates a vision transformer encoder with token merging capabilities, a vision-language connector, and a LLM for text generation.

- The visual encoder adopts a visual token merging strategy within each transformer layer, effectively reducing the length of feature sequences.

- Program-of-Thoughts Learning:

- Facilitates the generation of Python programs for numerical calculations from questions, effectively teaching the model computational reasoning.

- The ChartQA-PoT dataset enriches training material with both manually curated templates and generative approaches using GPT models.

- Efficiency and Performance:

- The visual token merging significantly enhances the handling of high-resolution inputs without proportional increases in computation, a key factor in maintaining model efficiency.

- TinyChart showcases superior performance metrics across several benchmarks, including ChartQA, Chart-to-Text, Chart-to-Table, and OpenCQA, consistently outperforming larger models.

Practical Implications and Future Research

The introduction of TinyChart and its underlying methodologies presents multiple avenues for further exploration and potential improvements in the field of multimodal machine learning:

- Extended Applications:

- The strategies employed can be adapted to other forms of visual data processing beyond chart understanding, potentially benefiting tasks in image recognition and visual media analysis.

- Optimization of Token Merging:

- Future models might explore dynamic token merging strategies that adjust based on context or content complexity, potentially offering even greater efficiency gains.

- Advanced Program-of-Thoughts Learning:

- Investigating more sophisticated program generation techniques and expanding training datasets might improve handling complex numerical reasoning tasks and reduce dependency on predefined templates.

Overall, the results from this paper indicate robust possibilities not just for building more efficient LLMs but also for significantly enhancing their applicability and performance in practical, resource-constrained scenarios. Such advancements could lead to broader deployments of advanced AI technologies in everyday applications, making them accessible to a wider range of devices and platforms.