Simplified Integration of Mixture-of-Experts in ASR Models Achieves High Efficiency with Scaled Performance

Introduction

The evolution of neural network architectures for Automatic Speech Recognition (ASR) has consistently aimed at enhancing model performance while addressing computational and efficiency challenges. The latest innovations have seen the incorporation of Mixture-of-Experts (MoE) to manage the computational demands of scaling models. This research explores a straightforward approach to integrating MoE layers in place of traditional Feed-Forward Network (FFN) layers within both encoder and decoder components of Conformer-based ASR models. A substantial benchmarking dataset, totaling 160,000 hours, demonstrates that such integration not only simplifies the model architecture but also maintains high efficiency without compromising accuracy.

Model Architecture and Methodology

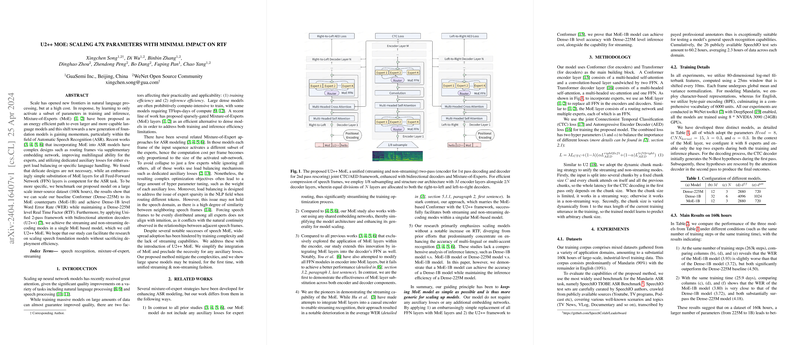

The core architectural component of this model is the Conformer, which is utilized for the encoder, while the transformer architecture is employed for the decoder. Each conventional FFN within these structures is replaced by an MoE layer consisting of multiple expert FFNs governed by a routing mechanism. This adjustment aims to leverage the sparsity for computational saving while keeping the model capacity high. The U2++ framework, known for its dual capability in streaming and non-streaming setups, underpins the proposed model, permitting dynamic adjustment of training strategies to align with either mode effectively.

- Encoder and Decoder Modification: All FFN layers are substituted with MoE layers, which consist of a routing network and several expert networks.

- Training Losses: Utilizes a combined loss function comprising Connectionist Temporal Classification (CTC) and Autoregressive Encoder Decoder (AED) losses without any auxiliary losses for load balancing or expert routing.

- Dynamic Chunk Masking: For streaming capabilities, a dynamic chunk masking strategy is employed allowing the model to handle variable chunk sizes, facilitating both streaming and non-streaming functionalities seamlessly.

Experimental Setup and Results

The experiments were conducted using a large-scale dataset predominantly in Mandarin, with a minor portion in English. The results were benchmarked against Dense-225M and Dense-1B models for comparative analysis. Impressively, the MoE-1B model achieved comparable Word Error Rate (WER) to the Dense-1B model, while preserving the real-time operation efficiency of the Dense-225M setup under similar computational conditions.

- WER and Model Efficiency: The MoE-1B model demonstrates a comparable WER to the Dense-1B model but exhibits significantly more computational efficiency, thus aligning the benefits of scaled performance with practical deployability.

- Inference Efficiency: In terms of Real-Time Factor (RTF), the MoE-1B model essentially matches the Dense-225M model despite having a parameter count closer to the Dense-1B model, highlighting the efficiency of MoE integrations.

Discussion on Streaming Abilities

A noteworthy aspect of this work is extending MoE integration to support streaming capabilities, a challenge often encountered with large-scale models. By employing a two-stage training approach that first establishes a robust non-streaming base before transitioning to a streaming-compatible configuration, the U2++ MoE successfully supports real-time ASR processing demands without degrading performance.

Future Implications and Developments

This research lays foundational work for further exploration into simple yet effective scaling strategies for ASR systems, particularly in how MoE layers can be utilized across different neural network architectures beyond Conformers. The findings encourage the pursuit of MoE models that prioritize not just performance but also operational efficiency and flexibility across different deployment scenarios, possibly extending beyond speech recognition into other domains of AI that require large-scale modeling capabilities.