Overview of "LLMs Know What They Need: Leveraging a Missing Information Guided Framework to Empower Retrieval-Augmented Generation"

The paper "LLMs Know What They Need: Leveraging a Missing Information Guided Framework to Empower Retrieval-Augmented Generation" presents the MIGRES (Missing Information Guided Retrieve-Extraction-Solving) framework, designed to enhance Retrieval-Augmented Generation (RAG) by enabling LLMs to identify and address missing information during the retrieval process. This approach addresses the limitations of LLMs, particularly their propensity to produce hallucinations or inaccuracies due to outdated knowledge, by effectively integrating retrieval mechanisms that are dynamically informed by identified missing information rather than traditional static methods.

Methodology and Framework

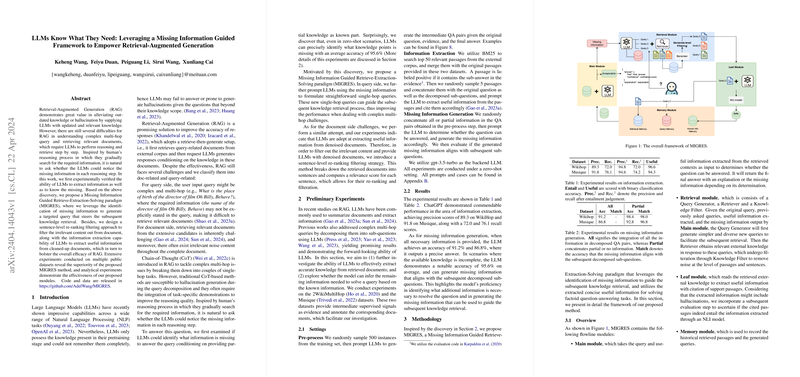

MIGRES is articulated through several key modules that collectively optimize the RAG process:

- Main Module: This component determines whether the available information suffices to answer the query, providing an answer if complete or identifying missing elements if not.

- Retrieval Module: Comprised of a Query Generator, Retriever, and Knowledge Filter, this module generates targeted queries based on the identified missing information, retrieves relevant documents, and filters content to remove noise. Notably, it incorporates sentence-level re-ranking to enhance the quality of retrieval.

- Leaf Module: Dedicated to extracting useful information from the filtered documents, this module ensures the reliability of information through entailment checks using Natural Language Inference (NLI) models.

- Memory Module: This tracks historical data to prevent repetitive queries and optimize retrieval efficiency.

Experimental Design and Results

The authors conducted extensive experiments across multiple datasets, including 2WikiMultiHopQA, HotpotQA, Musique, NQ, and TriviaQA, to evaluate MIGRES’s efficacy in handling multi-hop and open-domain question-answering tasks. The framework exhibited supremacy over existing methods in these domains, achieving improved exact match scores and demonstrating significant proficiency in synthesized knowledge extraction tasks even under zero-shot settings.

Implications and Future Directions

MIGRES provides a significant advancement in the domain of RAG by combining dynamic retrieval with fine-grained information filtering. This could lead to more reliable conversational agents and information retrieval systems capable of dynamic query formulation and contextual understanding. Future work might explore further application in real-time systems and investigate the scalability of the framework when integrated with evolving LLM architectures.

Conclusion

In conclusion, this paper delivers an innovative approach to tackling the inherent challenges LLMs face in dynamic environments. MIGRES enhances the robustness of information retrieval and response generation by allowing systems to self-identify information gaps and iteratively search for solutions. This advancement holds promise for more adaptable and accurate knowledge systems in various AI-driven applications.