Cross-Lingual Representation Alignment and Transfer in Multilingual Models: Insights from mOthello

Introduction to mOthello

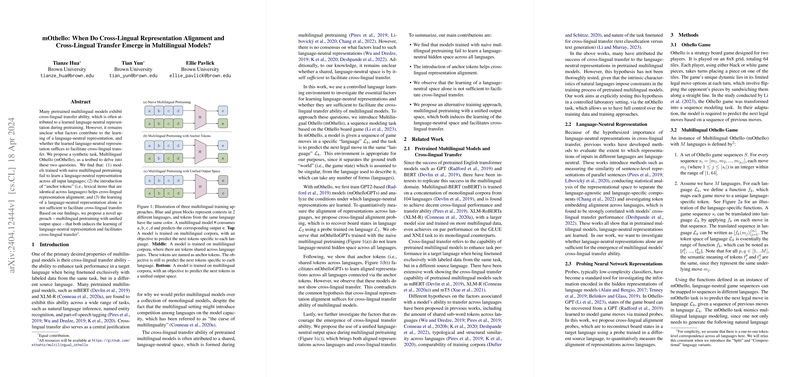

This paper introduces a synthetic task called Multilingual Othello (mOthello) designed to analyze cross-lingual representation alignment and transfer capabilities of pretrained multilingual models. The authors probe several core areas: whether models can develop language-neutral representations through naive multilingual pretraining, the role of "anchor tokens" in facilitating alignment, and if language-neutral representations are alone sufficient to ensure cross-lingual transfer.

Key Findings of the Study

1. Naive Multilingual Pretraining

The research uncovers that models developed under naive multilingual pretraining do not necessarily learn language-neutral representations that generalize across all languages. This finding suggests limited efficacy in naive approaches to developing truly cross-language capable AI models.

2. Role of Anchor Tokens

Introducing anchor tokens — lexical items common across multiple languages — is found to aid significantly in aligning representations across languages. This intervention appears to facilitate better synthesis of language-neutral spaces within models, enhancing the internal consistency of multilingual representations.

3. Insufficiency for Cross-Lingual Transfer

Contrary to common assumptions in the field, the paper presents evidence that the mere presence of language-neutral representations does not guarantee effective cross-lingual transfer capacities. This result implies that other factors or methodologies might be necessary to cultivate robust cross-lingual abilities effectively.

Methodological Innovations

mOthello Task and Cross-Lingual Probing

The adoption of the mOthello artificial task and the deployment of cross-lingual alignment probing represent methodological advancements aimed at better dissecting and understanding model behaviors in a controlled, transparent setting. These approaches allow for precise, clear-cut experiments on language representation and alignment without the many confounders present in natural language processing tasks.

Unified Output Space Training

A novel training framework proposed in the paper is the "unified output space" approach, which not only fosters the learning of shared representations but also significantly enhances cross-lingual transfer capabilities. This method denotes a significant strategy shift that may guide future research in multilingual model training.

Practical Implications

The insights from this paper suggest that practitioners and researchers should reconsider the reliability of traditional multilingual pretraining paradigms. In particular, the reliance on representation alignment alone to foster cross-lingual capabilities might be misguided without the integration of mechanisms like unified output spaces or other innovative methodologies.

Theoretical Contributions

The paper makes substantial theoretical contributions by challenging the previously held belief that alignment of language-neutral representations suffices for cross-lingual transfer. It opens new avenues for exploring the interplay between different types of language-specific and language-neutral training strategies within multilingual contexts.

Future Directions

Given the findings and methodologies introduced, future research could explore the applicability of the unified output space training in more complex, real-world scenarios across diverse multilingual datasets. Additionally, further investigations could also aim to identify other latent factors or training strategies that might complement or enhance the effects observed through anchor tokens and unified output spaces.

In conclusion, this paper not only highlights critical limitations in existing multilingual training paradigms but also sets the stage for more nuanced, effective training frameworks that might better harness the full potential of multilingual models.