Analysis of "SegFormer3D: an Efficient Transformer for 3D Medical Image Segmentation"

The paper authored by Shehan Perera et al. introduces SegFormer3D, a resource-conscious Vision Transformer (ViT) architecture designed specifically for 3D medical image segmentation. The paper offers an insightful examination regarding the nexus between deep learning architectures, specifically Vision Transformers, and the unique demands of medical image segmentation tasks. The authors propose SegFormer3D as a solution to the existing challenges posed by large and computationally intensive models in the domain.

Background and Contributions

3D medical image segmentation is a key task within the field of medical image analysis, which traditionally employs convolutional neural networks (CNNs). However, these models often struggle with capturing global contextual information due to their localized receptive fields, prompting a shift towards Transformer-based solutions, which have demonstrated superior performance by leveraging global attention mechanisms. Nevertheless, the state-of-the-art (SOTA) architectures tend to be extensive, demanding significant computational resources, and often struggle with generalization due to limited data availability within the medical field.

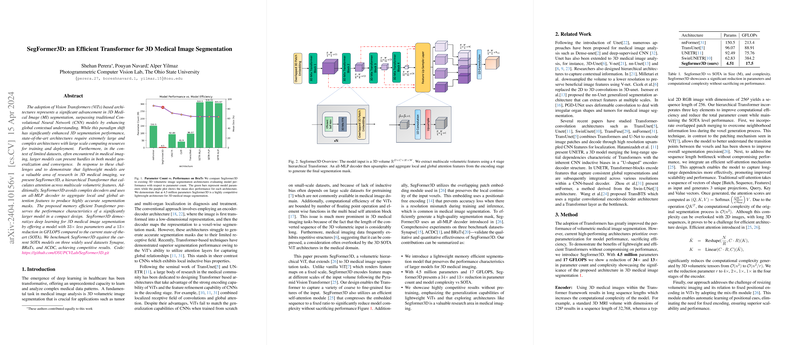

The core contribution of the paper is the introduction of SegFormer3D, a hierarchical Transformer that addresses these challenges by focusing on computational efficiency while maintaining competitive performance metrics. It integrates multi-scale attention calculation across volumetric features, utilizing an all-MLP decoder to efficiently handle both local and global features without the complexity of traditional Transformer decoders. SegFormer3D highlights a remarkable reduction in parameters and floating-point operations—33 times fewer parameters and a 13-fold decrease in GFLOPS compared to existing SOTA models, demonstrating its memory efficiency without significant loss in performance.

Methodology

SegFormer3D distinguishes itself through several methodological aspects:

- Hierarchical Design: The model employs a 4-stage hierarchical Transformer that encodes multi-scale volumetric features, leveraging a more structured approach for capturing feature variations at different scales.

- Efficient Attention Mechanism: SegFormer3D introduces a self-attention mechanism tailored for efficiency, compressing the sequence length to reduce computational overhead significantly.

- Overlapping Patch Merging: This process ensures local continuity in voxel generation, aiming to enhance segmentation accuracy by maintaining neighborhood information across patches.

- All-MLP Decoder: Instead of complex deconvolutional networks, SegFormer3D utilizes an all-MLP decoder, simplifying the process of generating high-quality segmentation masks and contributing to the model's efficiency.

The authors validate SegFormer3D through experiments on three major datasets: Synapse, BRaTs, and ACDC. The results demonstrate competitive mean Dice performance, cementing SegFormer3D's efficacy against larger models, such as nnFormer and TransUNet, while significantly reducing computational and memory requirements.

Implications and Future Directions

SegFormer3D facilitates broader accessibility to sophisticated 3D image segmentation models by significantly lowering the computational resources required for deployment. By demonstrating the competitive performance of a lightweight model, the paper suggests a future where medical image analysis can be more democratized—especially in environments with constrained access to large-scale computational infrastructure.

In theoretical paradigms, the success of SegFormer3D's architecture may point to broader applicability of memory-efficient Transformers in other areas of AI and image analysis. The hierarchical and efficient attention-based methodology could be explored further to enhance models in other domains requiring context-rich feature extraction from complex datasets.

Finally, SegFormer3D underscores the potential of lightweight Transformers offering a broader research pathway toward more sustainable AI, which balances performance with resource consumption—an increasingly pressing concern as models grow in complexity and application areas broaden.

Conclusion

SegFormer3D is a noteworthy contribution to the field of 3D medical image segmentation, displaying how efficiency and performance need not be mutually exclusive within deep learning architectures. With its significantly reduced parameter count and computational demands, SegFormer3D positions itself as an attractive option for researchers and practitioners focused on practical, resource-conscious AI deployments in medical imaging. Future explorations may lead to further enhancements in the balance between model size, efficiency, and segmentation performance, paving the way for similar innovations across diverse machine learning tasks.