TextHawk: Advancements in Multimodal LLMs for Document-Oriented Tasks

Introduction

The field of Multimodal LLMs (MLLMs) has significantly advanced with the advent of models capable of understanding and generating information across various modalities, notably visual and textual. Among these, document-oriented tasks stand out due to their complex nature, involving high-resolution images densely packed with information. The challenge lies in achieving fine-grained visual perception and efficient document image information compression. TextHawk emerges as a specialized MLLM, focusing on these challenges while maintaining robust general capabilities across vision and language domains.

Document-Oriented MLLMs and Their Limitations

Traditional MLLMs have ventured into enhancing fine-grained visual perception and information compression, employing methods such as increased input resolution and vision-language adapters. However, these approaches often fall short in striking a balance between general and document-specific capabilities, leaving a gap for further exploration.

TextHawk: Core Components and Innovations

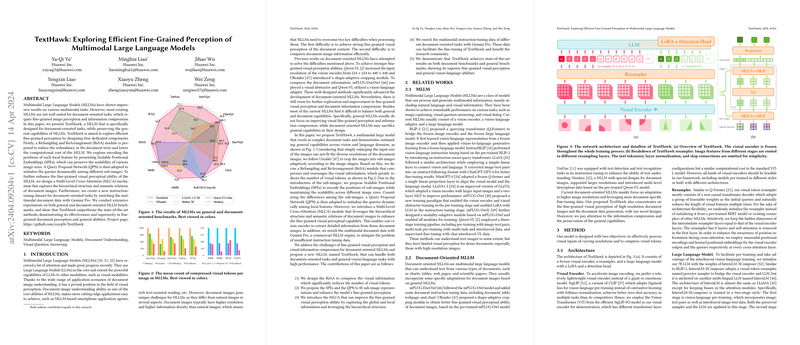

TextHawk introduces four pivotal components designed to address the nuanced demands of document-oriented tasks:

- ReSampling and ReArrangement (ReSA): A module that significantly compresses visual information, reducing the number of visual tokens required for document images, thus lowering computational costs.

- Scalable Positional Embeddings (SPEs): Designed to encode the positions of sub-images efficiently, SPEs facilitate handling varying image sizes without losing scalability.

- Query Proposal Network (QPN): This component dynamically initializes queries among different sub-images, addressing the variability inherent in document images.

- Multi-Level Cross-Attention (MLCA): Enhances fine-grained visual perception by leveraging the hierarchical structure and semantic relations within document images.

Additionally, TextHawk is enriched with a novel instruction-tuning dataset tailored for document-oriented tasks, complementing its architecture designed for fine-grained perception and information compression.

Empirical Validation

TextHawk has been rigorously evaluated against both general and document-oriented MLLM benchmarks. It has demonstrated superior performance, outperforming state-of-the-art methods, substantiating its effectiveness in fine-grained document perception alongside maintaining general vision-language capabilities.

Ablation Studies and Insights

A series of ablation studies shed light on the contributions of TextHawk’s individual components:

- The combination of ReSA's components leads to significant reductions in visual tokens, enabling more efficient processing of high-resolution document images.

- SPEs and QPN collectively contribute to the model’s enhanced perception capabilities, accommodating the diversity and complexity of document-oriented tasks.

- MLCA's ability to leverage multi-level features results in improved fine-grained perception, an essential attribute for document image understanding.

Limitations and Future Directions

While TextHawk marks a notable advancement, the freezing of the visual encoder during training points to potential areas for further exploration. Future work could involve adaptively training the vision encoder on task-specific data to refine and expand its perception capabilities.

Conclusion

TextHawk represents a significant leap forward in the specialized domain of document-oriented MLLMs. By addressing the intricate challenges of fine-grained visual perception and efficient information compression, TextHawk sets a new benchmark for future developments in the field. Its state-of-the-art performance across a wide range of benchmarks underscores its potential to pave the way for advanced document image understanding applications, bridging the gap between multimodal LLMs and the nuanced requirements of document-oriented tasks.