ALOHa: Advancing Object Hallucination Detection in Image Captioning with LLMs

Introduction to ALOHa and Its Contributions

Recent methodologies in vision-LLMs have pushed the boundaries of image caption generation. Despite these advancements, the generation of captions with object hallucinations — descriptors of objects absent in the image — remains a significant challenge. The paper presents ALOHa (A New Measure for Hallucination in Captioning Models), a novel approach leveraging LLMs to identify and quantify object hallucinations in captions more effectively compared to existing metrics. By incorporating semantic understanding and flexible object detection, ALOHa represents a step forward in evaluating and improving the reliability of automated caption generation systems.

Understanding Object Hallucination Metrics

Prior methods for detecting object hallucinations in image captions, such as CHAIR, rely on a fixed set of objects and their synonyms from existing datasets like MS COCO. This approach, while effective within its domain, lacks generalizability to captions pertaining to objects beyond the predefined set. ALOHa introduces an open-vocabulary metric that extends beyond these limitations by utilizing LLMs for object extraction and semantic similarity measures, thereby accommodating a wider array of objects and scenarios.

Methodological Innovations of ALOHa

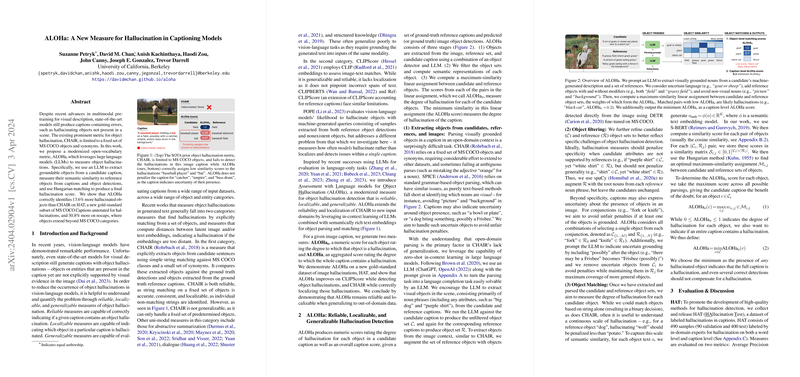

ALOHa method involves several key stages:

- Object Extraction: It employs an LLM to parse visually grounded objects from both the candidate caption and reference materials, adjusting for context, ambiguity, and uncertain language.

- Object Set Refinement and Semantical Representation: The method refines extracted object sets, considering uncertainties and conjunctions in captions, and computes their semantic representations.

- Object Matching and Hallucination Scoring: It utilizes Hungarian matching to assign scores to each object in the candidate caption based on their semantic similarity to reference objects. ALOHa generates both object-level and caption-level hallucination scores, providing fine-grained insights into the presence and extent of hallucinations.

Evaluating ALOHa

ALOHa's efficacy is demonstrated through extensive evaluations. When compared to CHAIR and other existing metrics like CLIPScore, ALOHa shows superior performance in detecting hallucinated objects, with significant improvements shown on the HAT dataset (a new, gold-standard dataset introduced alongside ALOHa for hallucination annotation) and on nocaps, especially for objects beyond the MS COCO categories. Such results underline ALOHa's enhanced generalizability and adaptability to different contexts.

Implications and Future Directions

The introduction of ALOHa has several significant implications for the field:

- It highlights the potential of using LLMs not just for content generation but also for evaluative and analytical tasks in multimodal contexts.

- ALOHa's open-vocabulary approach opens new avenues for caption evaluation across more diverse datasets, crucial for developing systems with wide applicational breadth.

- The nuanced understanding and detection of hallucinations ALOHa provides can be vital for enhancing the reliability and trustworthiness of automated captioning systems, especially in critical areas where accuracy is paramount.

Future research could explore extending ALOHa's methodology for detecting other types of inaccuracies in generated content, such as factual inaccuracies or incorrect object relations, thus broadening its applicability. Additionally, integrating LLMs with more sophisticated object detection frameworks could further enhance hallucination detection capabilities.

Conclusion

ALOHa represents a meaningful advancement in addressing the challenge of object hallucinations in automated image captioning. By leveraging the contextual understanding capabilities of LLMs and introducing a nuanced, flexible approach to hallucination detection, ALOHa sets a new standard for evaluating image captions' accuracy and reliability. It offers a promising path forward for both improving caption generation models and developing more sophisticated evaluation metrics in the vision-language domain.