On Translating Images for Cultural Relevance: A Preliminary Exploration

Introduction

Transcreation, the process of adapting content to maintain its essence across cultures, has become increasingly relevant in our multimedia-rich world. This paper introduces a novel task aimed at transcreating images, making visual content culturally relevant. Despite advancements in LLMs and generative AI, the automatic cultural adaptation of visual content remains a largely unexplored frontier. This paper presents three pipelines using state-of-the-art generative models for image transcreation, a comprehensive evaluation dataset, and an extensive human evaluation to gauge the success of these models in culturally adapting images.

Pipelines for Image Transcreation

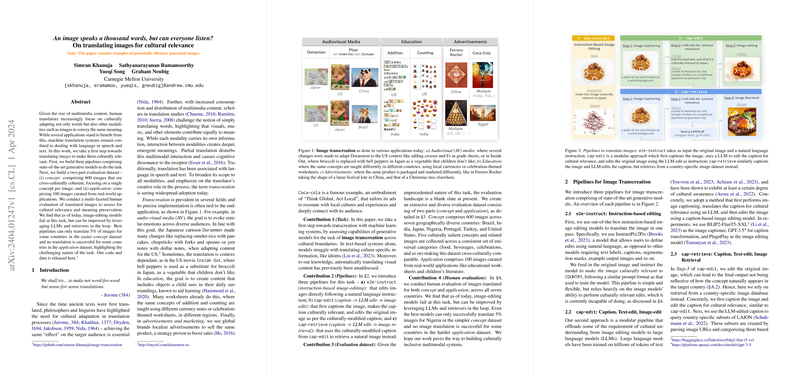

The task involves translating images to make them culturally relevant without losing their original essence. Three distinct pipelines are proposed:

- e2e-instruct: This pipeline leverages instruction-based image editing models to adapt images directly following natural language instructions, aiming for a one-step transformation process.

- cap-edit (caption -> LLM edit -> image edit): A modular approach that first generates a caption for the image, then modifies this caption to reflect cultural relevance using an LLM, and finally edits the original image based on this culturally adapted caption.

- cap-retrieve (caption -> LLM edit -> image retrieval): Similar to cap-edit in its initial steps but diverges by retrieving a relevant image from a country-specific dataset instead of editing the original image. This pipeline aims to find naturally occurring images that match the culturally adapted caption, potentially bypassing the limitations of direct image editing.

Evaluation Dataset

Given the novel nature of this task, a new evaluation dataset consisting of two parts was created:

- Concept Dataset: This part contains 600 images that are inherently cross-culturally coherent. These images focus on a single concept and are categorized into universal categories like food, beverages, and celebrations, allowing for cross-cultural comparison.

- Application Dataset: Comprising 100 images curated from real-world applications such as educational worksheets and children's literature, this dataset is meant to ground the task in practical applications.

Human Evaluation and Findings

A multi-faceted human evaluation was conducted to assess the cultural relevance and meaning preservation of the translated images. The findings reveal significant challenges:

- Limited Success in Cultural Transcreation: Across the best pipelines, only 5% of images were successfully translated for some countries in the concept dataset, highlighting the task's difficulty. For the application dataset, some countries saw no successful translations.

- Model Limitations: Current generative models, especially those focused on direct image editing, struggle to grasp and incorporate cultural context effectively. However, leveraging LLMs for textual guidance shows promise in improving outcomes.

- Importance of Evaluation Dataset: The developed evaluation framework provides a starting point for assessing progress in this nascent area, revealing that the task requires significant further research to achieve satisfactory results.

Implications and Future Directions

This work highlights the complexity of culturally adapting visual content using AI models. The limited success rate underscores the current limitations of generative models in understanding and applying cultural nuances. Future research could explore more sophisticated models that can better grasp cultural contexts, possibly through enhanced training datasets or more advanced multimodal understanding. Additionally, exploring the balance between direct image editing and retrieval-based approaches may yield more effective strategies for image transcreation.

In conclusion, while promising, the journey of using AI to culturally transcreate images is just beginning. The findings of this paper outline both the potential and the pitfalls of current methodologies, setting the stage for further exploration in this intriguing intersection of AI, culture, and visual content adaptation.