Human Detection Performance in Identifying Synthetic Media: An Insightful Investigation

Introduction

In recent years, the proliferation of generative AI technologies has significantly enhanced the realism of synthetic media, which includes images, videos, and audio. This advancement raises profound concerns regarding the application of these technologies for malicious purposes such as disinformation campaigns, financial fraud, and privacy violations. Traditionally, the primary defense against such deceptions has been the human capacity to recognize artificial constructs. The paper conducted by Di Cooke, Abigail Edwards, Sophia Barkoff, and Kathryn Kelly focuses on evaluating this capacity by analyzing human detection performance across various media types, specifically images, audio-only, video-only, and audiovisual stimuli.

Methodology

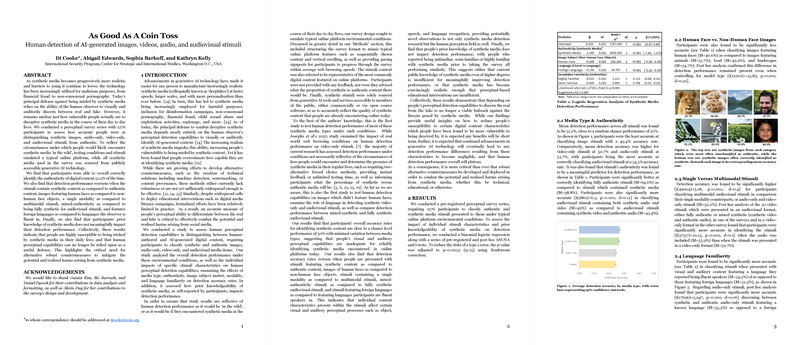

The paper employed a perceptual survey series involving 1276 participants to evaluate the effectiveness of human detection in differentiating between authentic and synthetic media. Conditions were designed to simulate the typical online environment, utilizing publicly accessible generative AI technologies to generate synthetic media, ensuring the stimuli's relevance to what individuals might encounter in everyday online interactions. This approach provides a more realistic assessment of human detection capabilities 'in the wild'. The research explored the influence of media type, authenticity, subject matter, modality, and language familiarity on detection rates.

Findings

Key findings from the paper revealed:

- Overall Detection Performance: On average, participants correctly identified synthetic content 51.2% of the time, indicating an almost chance-level accuracy.

- Influence of Synthetic Content and Human Faces: Detection performance decreased with synthetic content and when images featured human faces, underscoring the challenge in discerning AI-generated human likenesses.

- Comparison across Modalities: Audiovisual stimuli led to higher detection accuracy than single-modality stimuli, highlighting the additive benefit of multimodal information in discernment tasks.

- Impact of Language Familiarity: Detection success increased when stimuli included languages participants were fluent in, emphasizing the role of language familiarity in synthetic media detection.

- Prior Knowledge of Synthetic Media: Interestingly, participants' self-reported familiarity with synthetic media did not correlate with detection performance, suggesting either a general inadequacy in public knowledge or the sophistication of synthetic media rendering perceptual cues ineffective.

Implications

This paper underscores the limitations of human perceptual capabilities as a standalone defense against synthetic media deceptions. Despite the participants' almost incidental success rate, the nuances in detection performance across different media types and under varying conditions provide critical insights. For instance, the observed variability based on modality and language suggests potential avenues for enhancing education and training programs focused on synthetic media identification. However, the consistent challenge across scenarios points to an urgent need for developing sophisticated, non-perceptual countermeasures. These could include advanced machine learning detectors, blockchain-based content authentication, and comprehensive digital literacy initiatives aimed at fostering critical analysis of online content.

Future Outlook

Given the rapid advancement in AI and machine learning technologies, the already narrow gap between genuine and synthetic media is likely to diminish further. This trajectory implies that human detection capabilities, without the aid of technological tools, may become increasingly insufficient. Future research should, therefore, emphasize not only improving our understanding of human perception under digital duress but also expediting the development of reliable, scalable, and user-friendly technologies to aid in the identification of synthetic media. Moreover, the exploration of educational interventions that can adapt to the evolving landscape of digital content creation will be critical in empowering individuals to navigate the complexities of modern media consumption safely and responsibly.

The collective results from this paper paint a sobering picture of the current state of human vulnerability to synthetic media deceptions. They call into action a multi-faceted approach, blending technological, educational, and regulatory efforts, to safeguard individuals and societies against the potential harms of increasingly indistinguishable synthetic content.