Securing LLMs: Navigating the Evolving Threat Landscape

Security Risks and Vulnerabilities of LLMs

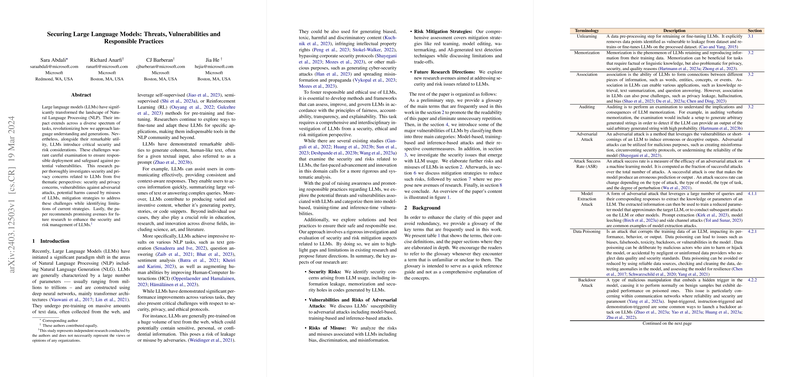

The field of LLMs involves significant security and privacy considerations. These systems, although transformative, are susceptible to various avenues of exploitation. The pre-training phase intricately involves massive datasets that potentially embed sensitive information, underlying the risk of inadvertent data leakage. Moreover, the capability of LLMs to generate realistic, human-like text opens doors to creating biased, toxic, or even defamatory content, presenting legal and reputational hazards. Intellectual property infringement through unsanctioned content replication and potential bypasses of security mechanisms exemplify other critical concerns. The susceptibility of LLMs to cyber-attacks, including those aimed at data corruption or system manipulation, underscores the urgency for robust security measures.

Exploring Mitigation Strategies

The mitigation of risks associated with LLMs entails a multi-faceted approach:

- Model-based Vulnerabilities: Addressing model-based vulnerabilities requires a focus on minimizing model extraction and imitation risks. Strategies include implementing watermarking techniques to assert model ownership and deploying adversarial detection mechanisms to identify unauthorized use.

- Training-Time Vulnerabilities: Mitigating training-time vulnerabilities involves procedures to detect and sanitize poisoned data sets, thereby averting backdoor attacks. Employing red teaming strategies to identify potential weaknesses during the model development phase is paramount.

- Inference-Time Vulnerabilities: To counter inference-time vulnerabilities, adopting prompt injection detection systems and safeguarding against paraphrasing attacks are indispensable. Prompt monitoring and adaptive response mechanisms can deter malicious exploitation attempts.

Future Directions in AI Security

The dynamic and complex nature of LLMs necessitates continuous research into developing more advanced security protocols and ethical guidelines. Here are several prospective avenues for further exploration:

- Enhanced Red and Green Teaming: Implementing comprehensive red and green teaming exercises can reveal hidden vulnerabilities and assess the ethical implications of LLM outputs, thereby informing more secure deployment strategies.

- Improved Detection Techniques: Advancing the development and implementation of sophisticated AI-generated text detection technologies will be crucial for distinguishing between human and machine-generated content, thus preventing misinformation spread.

- Robust Editing Mechanisms: Investing in research on editing LLMs to correct for biases, reduce hallucination, and enhance factuality will aid in minimizing the generation of harmful or misleading content.

- Interdisciplinary Collaboration: Fostering collaborative efforts across cybersecurity, AI ethics, and legal disciplines can provide a holistic approach to understanding and mitigating the risks posed by LLMs.

Conclusion

The security landscape of LLMs is fraught with challenges yet offers ample opportunities for substantive breakthroughs in AI safety and integrity. As we continue to interweave AI more deeply into the fabric of digital societies, prioritizing the development of comprehensive, ethical, and robust security measures is imperative. By fostering a culture of proactive risk management and ethical AI use, we can navigate the complexities of LLMs, paving the way for their responsible and secure application across various domains.