The paper introduces Meta-Prompting for Visual Recognition (MPVR), a novel automated framework designed to enhance zero-shot image recognition using LLMs and Vision-LLMs (VLMs). The core idea is to automate the generation of category-specific VLM prompts by meta-prompting LLMs, thus minimizing human intervention.

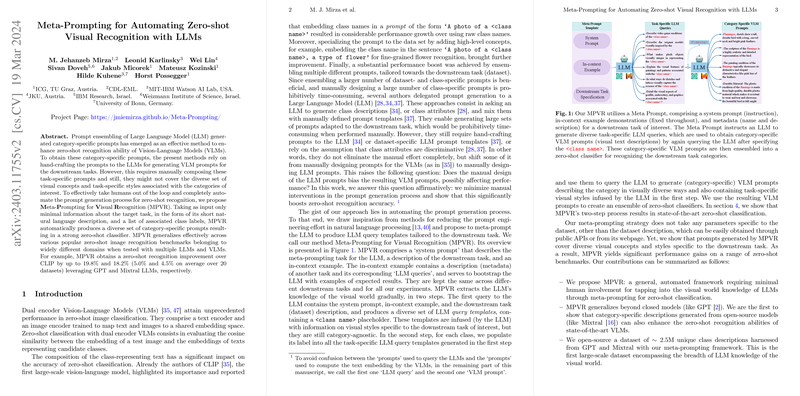

The approach involves a two-step process. First, the LLM is provided with a meta-prompt comprising a system prompt, an in-context example, and a short natural language description of the target task along with its class labels. This meta-prompt instructs the LLM to generate diverse task-specific LLM queries. Second, these generated queries are then used to obtain category-specific VLM prompts by querying the LLM again, this time specifying the class name. These category-specific VLM prompts are then ensembled into a zero-shot classifier.

The method leverages the knowledge of the visual world embedded within LLMs to produce a diverse set of prompts tailored to specific downstream tasks. The system prompt describes the meta-prompting task, and the in-context example contains a description of another task and its corresponding LLM queries. The in-context examples remain consistent across different downstream tasks. The LLM is then queried to produce LLM query templates containing a <class name> placeholder. These templates capture visual styles specific to the task but remain category-agnostic. Subsequently, for each class, the class label is inserted into the task-specific LLM query templates, and the LLM generates category-specific VLM prompts, describing the category in diverse visual ways and containing task-specific visual styles.

The authors emphasize that their meta-prompting strategy doesn't require dataset-specific parameters, except for the dataset description, which can be easily obtained from public APIs or the dataset's webpage. The generated prompts are shown to cover diverse visual concepts and styles, leading to significant performance gains across various zero-shot benchmarks.

The contributions of the paper are threefold: \begin{itemize} \item It introduces MPVR, a general automated framework for zero-shot classification that minimizes human involvement by using meta-prompting to tap into the visual world knowledge of LLMs. \item It demonstrates the generalizability of MPVR beyond closed models like GPT, showing that open-source models like Mixtral can also enhance the zero-shot recognition abilities of VLMs. \item It releases a dataset of approximately 2.5 million unique class descriptions generated from GPT and Mixtral using the meta-prompting framework, representing a large-scale dataset encompassing the breadth of LLM knowledge of the visual world. \end{itemize}

The paper evaluates MPVR on 20 object recognition datasets, including ImageNet, ImageNet-V2, CIFAR-10/100, Caltech-101, and others, and compares its performance against several baselines, including CLIP, CUPL, DCLIP, and Waffle. The results demonstrate that MPVR consistently outperforms the CLIP zero-shot baseline, with improvements of up to 19.8% and 18.2% on some datasets when using GPT and Mixtral, respectively. On average, MPVR improves upon CLIP by 5.0% and 4.5% across the 20 datasets.

Ablation studies are conducted to assess the significance of different components of MPVR. The results show that all major components of the meta-prompt, including the system prompt, in-context example, and downstream task specification, have a strong effect on the downstream performance.

The paper also explores ensembling different text sources, such as GPT-generated VLM prompts, Mixtral-generated VLM prompts, and dataset-specific templates from CLIP. The results indicate that ensembling over the embedding space with both GPT and Mixtral prompts performs the best. Additionally, the paper compares dual encoder models like CLIP with multi-modal LLMs (MMLMs) for image classification, finding that CLIP outperforms LLaVA on object recognition tasks, thus justifying the use of CLIP as the discriminative model in the paper.

Finally, a scaling analysis demonstrates that increasing the number of generated VLM prompts significantly boosts performance, indicating promising scaling potential for MPVR.

In the experimental evaluation, the zero-shot likelihood of class is defined as: , where is the image embedding, is the text embedding for class , and is the temperature constant.

- : zero-shot likelihood of class given image

- : image embedding of image

- : set of candidate classes

- : text embedding for class

- : cosine similarity

- : temperature constant

The text embedding is computed as: , where is the set of prompt templates and is a prompt obtained by completing template with the label of class .

- : text embedding for class

- : the number of prompt templates in the set

- : prompt template in the set of prompt templates

- : a prompt obtained by completing template with the label of class

- : embedding of the prompt