Simple and Scalable Strategies to Continually Pre-train LLMs

Introduction

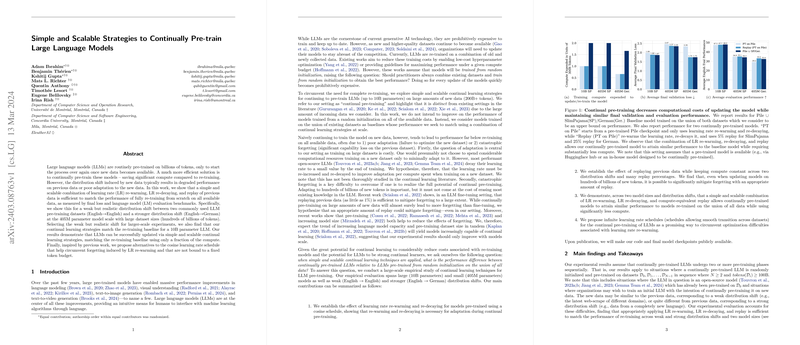

The pre-training process of LLMs can be excessively computationally expensive, especially when new data become available, and the model needs an update. Traditionally, this requires starting the training process from scratch, combining the old and new datasets—a practice that is not only inefficient but also unsustainable. Given the dynamic nature of data and the continuous improvement in data quality, there is a pressing need for more efficient strategies to update these models. Our work focuses on developing and empirically evaluating simple and scalable continual learning techniques for LLMs, targeting the efficient integration of new data into existing models.

The Need for Efficient Update Strategies

Updating LLMs with new data introduces two main challenges: adaptation and forgetting. Adaptation entails effectively learning from the new data, while forgetting involves retaining previously learned information. Our hypothesis revolves around the premise that both challenges can be addressed with strategies involving the adjustment of learning rates and the use of replay mechanisms.

Learning Rate Adjustments

A common theme in open-source LLMs is the use of a cosine decay schedule for the learning rate. Upon analysis, it was clear that simply continuing training from the last stopped point (with a minimal learning rate) leads to suboptimal adaptation to new data. Thus, we propose "re-warming" the learning rate back to a higher value and then "re-decaying" it following a cosine pattern adjusted to the token budget of the new data. This approach aids in better adaptation.

The Role of Replay

Given the improved adaptation from learning rate adjustments, there arises a need to counteract the potential forgetting of previously learned information. We experimented with various percentages of replay—reusing a percentage of the old data in the training batches for the new data. Our results showcased that a well-chosen replay percentage effectively mitigates forgetting without significantly hampering adaptation to new data.

Empirical Evaluation and Results

Our extensive experiments involved pre-training models of 405M and 10B parameters on datasets of varying domain similarity and sizes (ranging up to 300B tokens). Our findings illustrate that a balanced combination of learning rate re-warming, re-decaying, and strategic replay can achieve performance on par with models trained from scratch on the amalgamated old and new datasets—while drastically reducing computational costs.

Learning Rate Schedules for Continual Pre-training

Analyzing further, we identified that re-warming the learning rate might induce unnecessary forgetting when transitioning from one dataset to another. We explored "infinite learning rate schedules" as an alternative that avoids re-warming by maintaining a constant learning rate across transitions between datasets. These schedules proved promising in our initial experiments, showcasing competitive performance with the traditional cosine decay schedules without the drawback of induced forgetting during transitions.

Conclusion

Our work substantiates the practicality of continually pre-training LLMs through simple and scalable strategies. By judiciously adjusting the learning rate and incorporating replay mechanisms, we can efficiently update LLMs with new data, maintaining or even surpassing the performance of models re-trained from scratch. Infinite learning rate schedules further present a promising direction for seamless model updates across multiple datasets. This research not only contributes to the efficiency of LLM pre-training but also opens avenues for maintaining up-to-date models in a more sustainable manner.