Exploring Cultural and Linguistic Intelligence in Korean with CLIcK: A Comprehensive Benchmark Dataset

Introduction to CLIcK

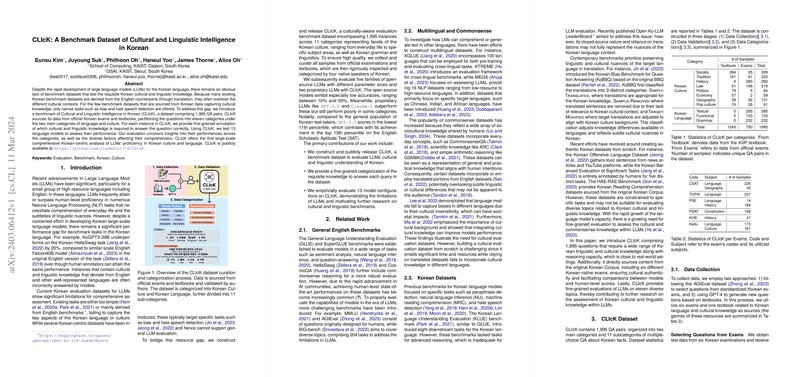

The progression of LLMs, especially in languages other than English, has been a focal point in computational linguistics. However, the development of such models in the Korean language faces a significant roadblock: the dearth of comprehensive benchmark datasets that immerse these models in the cultural and linguistic intricacies of Korean. The Cultural and Linguistic Intelligence in Korean (CLIcK) benchmark dataset aims to bridge this gap, presenting a pioneering collection of 1,995 QA pairs meticulously drawn from official Korean exams and textbooks across eleven diverse categories.

Motivation for CLIcK

Korean language evaluation tasks have so far either been overly simplistic or derivative of English benchmarks, inadequately representing Korean cultural and linguistic uniqueness. Despite some datasets that touch upon Korean cultural aspects, the narrow focus of these resources on tasks such as bias and hate speech detection precludes a holistic assessment of LLMs' (LLMs') cultural and linguistic understanding. CLIcK fills this void by offering a culturally rich and linguistically diverse set of tasks directly sourced from native Korean educational materials.

Dataset Construction and Composition

CLIcK's construction involved selecting questions from standardized Korean exams and using GPT-4 to generate new questions from Korean textbooks. To ensure the questions' relevance and accuracy, a multi-stage validation process with native Korean speakers was employed. Categories under which the data falls include not just traditional linguistic aspects like grammar but also wide-ranging cultural elements from politics to pop culture, offering an expansive view of Korean society and language. The result is a dataset partitioned into two main categories: Cultural Intelligence and Linguistic Intelligence, with the former spanning eight subcategories and the latter three.

Evaluation with LLMs

A comprehensive evaluation of thirteen LLMs spanning various sizes and configurations on CLIcK yielded intriguing insights. Despite some open-source LLMs showing competencies across certain categories, overall performance was modest, with proprietary models such as GPT-3.5 and Claude-2 demonstrating more robust, albeit still imperfect, capabilities. These results underscore the persistent challenge in imbuing LLMs with deep cultural and linguistic understanding, particularly for a language as rich and complex as Korean.

Implications and Future Directions

CLIcK not only spotlights the current limitations of LLMs in grasping the nuances of Korean culture and language but also sets a precedent for constructing similar benchmarks for other underrepresented languages. This initiative beckons the need for tailored model training that places a stronger emphasis on capturing the idiosyncratic elements of individual languages and cultures. As the field moves forward, it is vital to remember that mastery over a language extends beyond mere syntactical proficiency, embracing the cultural lore and linguistic subtleties that give a language its life.

Conclusion

The introduction of CLIcK opens new avenues in the evaluation of Korean LLMs, pushing the envelope on understanding and generating Korean text in a manner that is culturally and linguistically authentic. As researchers and technologists engage with this novel dataset, the hope is for a gradual uplift in the performance of these models, making strides toward genuinely comprehensive linguistic intelligence. By honing in on the cultural and linguistic elements that define Korean, CLIcK paves the way for more nuanced and sophisticated AI models capable of navigating the complexities of language in its full vivacity.