Unpacking Tokenization: A Close Look at Text Compression and Model Performance

Introduction

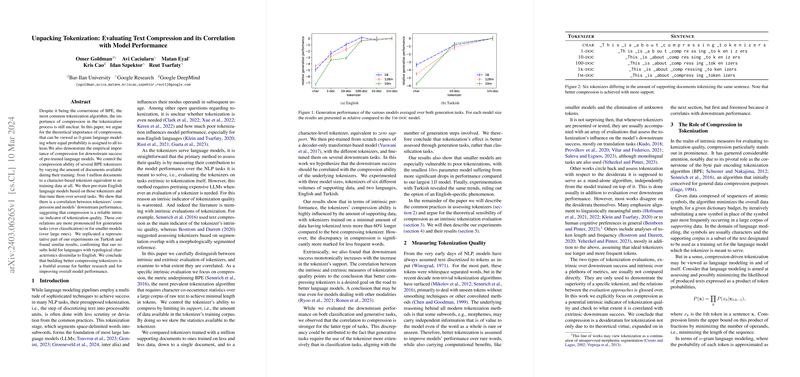

The paper "Unpacking Tokenization: Evaluating Text Compression and its Correlation with Model Performance" explores the significance of text compression in the tokenization process and its correlation with the downstream success of pre-trained LLMs. The authors argue that text compression can be viewed as a form of $0$-gram LLMing where all tokens are assigned equal probability. By manipulating the compression ability of Byte Pair Encoding (BPE) tokenizers through varying the amount of training data—ranging from a character-level tokenizer (equivalent to zero training data) to tokenizers trained on 1 million documents—the authors endeavor to elucidate the intrinsic quality of tokenizers and their extrinsic impact on model performance across several tasks and languages.

Methodology

The authors compared tokenizers by controlling the "support," i.e., the amount of training data available to them. This approach allowed for an exploration of how tokenizer compression abilities impact LLM performance across different tasks. The English language was the primary focus, with models pre-trained on the C4 corpus and fine-tuned on a combination of classification and generation tasks. For intrinsic evaluation, tokenizers' ability to compress text was measured, while extrinsic evaluation focused on performance across selected NLP tasks. Additionally, Turkish was selected for a subset of experiments to confirm whether findings hold across languages with different typological characteristics.

Findings

Compression Ability: The paper found a direct correlation between a tokenizer's compression ability and the amount of supporting data it was trained on. Tokenizers trained with minimal data produced texts significantly longer than those trained with adequate data. The more the supporting data, the better the compression.

Extrinsic Performance: The experiments demonstrated a monotonic relationship between the amount of supporting data a tokenizer had and its subsequent performance in downstream tasks. This correlation was found to be stronger for generation tasks and more pronounced in smaller models.

Language Generalization: The patterns observed in English held true when tested on Turkish, suggesting that the importance of text compression in tokenization is not language-specific.

Analysis

The paper breaks new ground by quantitatively demonstrating the importance of tokenization—specifically its compression capability—on the performance of LLMs. The results suggest that tokenization, particularly for generative tasks or when using smaller models, is crucial. This stands to reason as generative tasks require extensive use of the tokenizer, and smaller models have less capacity to compensate for poor tokenization.

Interestingly, the intrinsic and extrinsic evaluations of tokenization quality presented in this paper reveal a clear path for future research and development: creating better compressing tokenizers could lead to improved overall model performance. It was also noted that tokenizer support directly affects its efficiency in compression, pointing to the potential benefits of increasing the supporting dataset size during tokenizer training.

Conclusion

This paper contributes a novel perspective on the crucial role of tokenization in the development of LLMs by showcasing the intrinsic value of compression as an indicator of tokenizer quality and its correlation with downstream task performance. The findings across English and Turkish emphasize the importance of compression in tokenization and suggest beneficial directions for future tokenizer development. As larger and more complex models continue to evolve, understanding the foundational elements, such as tokenization, becomes imperative for improving efficiency and effectiveness in natural language processing tasks.

Future Work

While this paper provides significant insights, it also opens avenues for future research, including expanding the experiments to other languages and exploring other intrinsic measures of tokenization quality. Additionally, investigating the impact of tokenization on larger models could further refine our understanding of its role in the performance of LLMs.