Exploring MAGID: A Synthetic Multi-Modal Dataset Generator

The paper "MAGID: An Automated Pipeline for Generating Synthetic Multi-modal Datasets" introduces a novel framework designed to address the significant challenges associated with the development of multimodal interactive systems. MAGID aims to enhance text-only dialogues by augmenting them with high-quality, diverse images, thus crafting synthetic datasets that mirror real-world multi-modal interactions without the privacy and quality limitations of traditional methods.

Core Contributions and Methodology

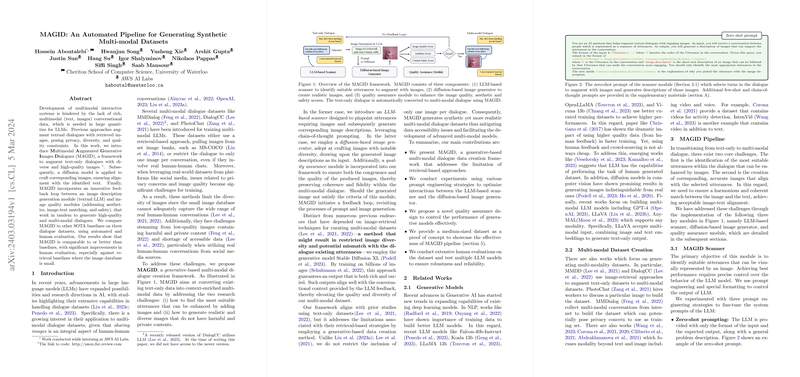

Key to MAGID's innovation is its generative approach, which stands in contrast to retrieval-based methods that often suffer from limited image databases and potential privacy violations when leveraging real-world datasets. MAGID circumvents these issues through a structured pipeline consisting of three pivotal components:

- LLM-Based Scanner: This module identifies dialogue utterances suitable for image augmentation, using advanced prompt engineering techniques to guide LLMs in recognizing the textual context that would benefit from visual representation.

- Diffusion-Based Image Generator: Utilizing diffusion models, MAGID synthesizes images that maintain high diversity and realism, aligned with the textual context. The Stable Diffusion XL model is employed for its state-of-the-art capabilities in generating varied and contextually relevant images.

- Quality Assurance Module: An innovative feedback loop is incorporated, ensuring that generated images meet stringent standards for image-text matching (via CLIP score), aesthetic quality, and safety. This module plays a crucial role in refining image outputs and supports the feedback-driven regeneration of images if necessary.

Numerical Results and Evaluation

MAGID's effectiveness is meticulously evaluated against existing state-of-the-art (SOTA) baselines across multiple metrics. The paper reports a notable performance in both automated and human evaluations, demonstrating parity or superiority in human assessments. Particularly, MAGID excels when compared to datasets constructed through retrieval methods, offering significant improvements in human evaluations where database limitations are acute.

Quantitative analyses reveal that MAGID, powered by models like GPT-4 and GPT-3.5, outperforms others with superior CLIP and aesthetic scores. These results underscore MAGID's capability to create synthetic dialogues that rival or exceed current real-world datasets in quality and relevance.

Implications and Future Directions

Practically, MAGID facilitates the creation of extensive multi-modal datasets without the inherent risks of using real-world data. Theoretically, it paves the way for research into more advanced multimodal understanding and generation within AI systems. This research has significant implications for developing LLMs that are adept at understanding and interacting in multimodal contexts, potentially enhancing automated assistants, conversational agents, and more.

Future work can explore extending MAGID to additional modalities such as video or audio, further enriching the dataset's applicability. Additionally, improving image coherence and reducing artifacts through advanced diffusion models presents another path for enhancement.

In conclusion, MAGID represents a substantial advancement in synthetic dataset generation for AI, setting a foundation for more secure, diverse, and quality-focused multimodal research in computer science. This framework not only challenges the current methodologies but also invites exploration into broader and more complex datasets in the AI landscape.