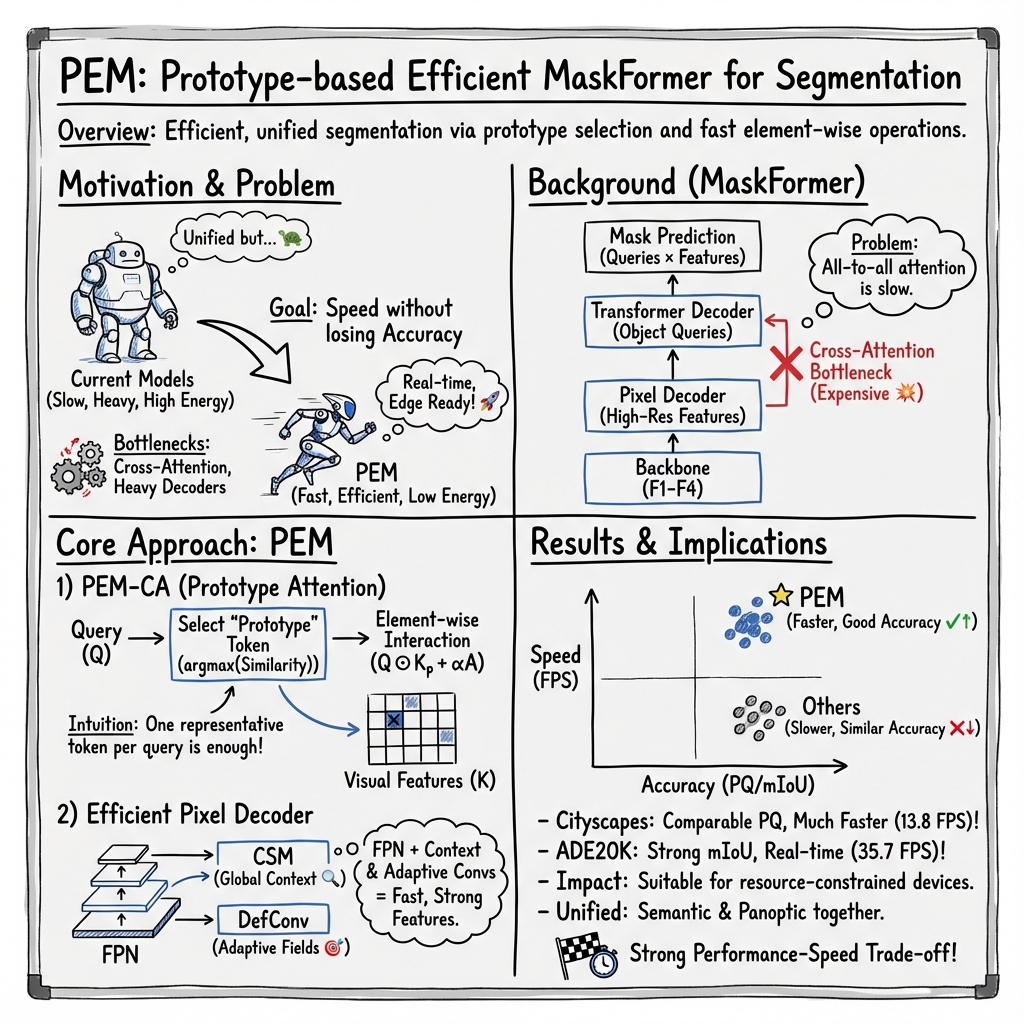

PEM: Prototype-based Efficient MaskFormer for Image Segmentation

Abstract: Recent transformer-based architectures have shown impressive results in the field of image segmentation. Thanks to their flexibility, they obtain outstanding performance in multiple segmentation tasks, such as semantic and panoptic, under a single unified framework. To achieve such impressive performance, these architectures employ intensive operations and require substantial computational resources, which are often not available, especially on edge devices. To fill this gap, we propose Prototype-based Efficient MaskFormer (PEM), an efficient transformer-based architecture that can operate in multiple segmentation tasks. PEM proposes a novel prototype-based cross-attention which leverages the redundancy of visual features to restrict the computation and improve the efficiency without harming the performance. In addition, PEM introduces an efficient multi-scale feature pyramid network, capable of extracting features that have high semantic content in an efficient way, thanks to the combination of deformable convolutions and context-based self-modulation. We benchmark the proposed PEM architecture on two tasks, semantic and panoptic segmentation, evaluated on two different datasets, Cityscapes and ADE20K. PEM demonstrates outstanding performance on every task and dataset, outperforming task-specific architectures while being comparable and even better than computationally-expensive baselines.

- Yolact: Real-time instance segmentation. In ICCV, 2019.

- End-to-end object detection with transformers. In ECCV, 2020.

- Panoptic-deeplab: A simple, strong, and fast baseline for bottom-up panoptic segmentation. In CVPR, 2020.

- Per-pixel classification is not all you need for semantic segmentation. NeurIPS, 34:17864–17875, 2021.

- Masked-attention mask transformer for universal image segmentation. In CVPR, 2022.

- The cityscapes dataset for semantic urban scene understanding. In CVPR, 2016.

- Deformable convolutional networks. In ICCV, 2017.

- Fast panoptic segmentation network. IEEE Robotics and Automation Letters, 5(2):1742–1749, 2020.

- Imagenet: A large-scale hierarchical image database. In CVPR, 2009.

- The pascal visual object classes challenge: A retrospective. International journal of computer vision, 111:98–136, 2015.

- Rethinking bisenet for real-time semantic segmentation. In CVPR, 2021.

- Watt for what: Rethinking deep learning’s energy-performance relationship. arXiv preprint arXiv:2310.06522, 2023.

- Deep residual learning for image recognition. In CVPR, 2016.

- Lpsnet: A lightweight solution for fast panoptic segmentation. In CVPR, 2021a.

- Deep dual-resolution networks for real-time and accurate semantic segmentation of road scenes. arXiv preprint arXiv:2101.06085, 2021b.

- Real-time panoptic segmentation from dense detections. In CVPR, 2020.

- Squeeze-and-excitation networks. In CVPR, 2018.

- You only segment once: Towards real-time panoptic segmentation. In CVPR, 2023.

- Panoptic segmentation. In CVPR, 2019.

- Segment anything. arXiv preprint arXiv:2304.02643, 2023.

- Rethinking vision transformers for mobilenet size and speed. In ICCV, 2023.

- Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983, 2016.

- Decoupled weight decay regularization. In ICLR, 2018.

- Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. In ICLR, 2021.

- Separable self-attention for mobile vision transformers. Transactions on Machine Learning Research, 2022.

- V-net: Fully convolutional neural networks for volumetric medical image segmentation. In 3DV, 2016.

- Carbon emissions and large neural network training. arXiv preprint arXiv:2104.10350, 2021.

- Film: Visual reasoning with a general conditioning layer. In AAAI, 2018.

- Swiftformer: Efficient additive attention for transformer-based real-time mobile vision applications. In ICCV, 2023.

- Attention is all you need. NeurIPS, 2017.

- Solo: Segmenting objects by locations. In ECCV, 2020.

- Bidirectional graph reasoning network for panoptic segmentation. In CVPR, 2020.

- Upsnet: A unified panoptic segmentation network. In CVPR, 2019.

- Pidnet: A real-time semantic segmentation network inspired by pid controllers. In CVPR, 2023.

- Bisenet: Bilateral segmentation network for real-time semantic segmentation. In ECCV, 2018.

- Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. International Journal of Computer Vision, 129:3051–3068, 2021.

- kmax-deeplab: k-means mask transformer. In ECCV, 2022.

- Scene parsing through ade20k dataset. In CVPR, 2017.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.