Enhancing Egocentric Text-Audio Retrieval with LLMs

Introduction to the Study

The pervasive growth of audio and video content online underscores the importance of advanced retrieval systems. This paper addresses the critical challenge of efficiently searching through such content by leveraging LLMs to generate audio-centric descriptions from visual-centric ones for improved text-audio retrieval. The research introduces a novel methodology that maps visual descriptions to audio descriptions, enhancing retrieval performance significantly. The approach is validated through the creation and evaluation of three benchmarks within an egocentric video context, demonstrating the utility of LLMs in bridging the gap between visual and audio data modalities.

Literature Review and Background

The paper situates itself at the intersection of text-audio retrieval and the application of LLMs in multimodal tasks, drawing from advancements in transformer-based models and the integration of LLMs like ChatGPT in understanding visual and audio languages. The paper stands out by focusing on leveraging visual-centric datasets for audio description generation, a contrast to previous works emphasizing audio-centric datasets. Furthermore, it contributes to the burgeoning interest in egocentric (first-person viewpoint) data exploitation for varied tasks, including but not limited to action recognition and video retrieval, now extending into the audio domain.

Methodology and Datasets

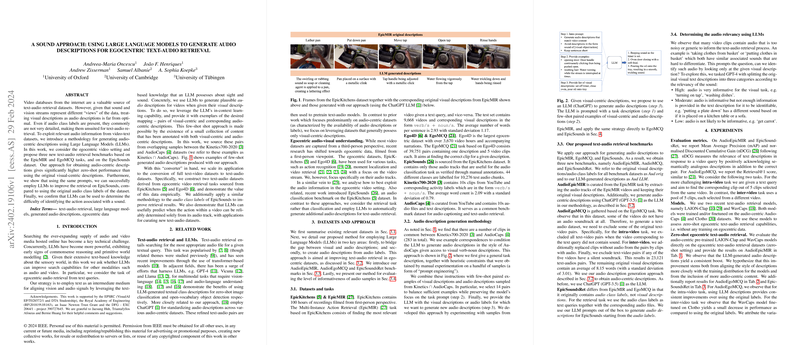

The researchers utilized several existing datasets, such as EpicKitchens and Ego4D for egocentric visuals and EpicSounds for audio classification. The novel methodology involves conditioning LLMs with paired examples of visual and audio descriptions to generate new, audio-centric descriptions. This few-shot learning approach leverages samples from Kinetics700-2020 and AudioCaps datasets, demonstrating the LLM's capability to contextualize and translate between modalities effectively.

Research Contributions and Findings

New Benchmarks and Audio Description Generation

By applying the methodology to the EpicMIR, EgoMCQ tasks, and the EpicSounds dataset, the paper curates three novel benchmarks for egocentric text-audio retrieval. These benchmarks, coupled with LLM-generated descriptions, showcase significantly improved zero-shot retrieval performance. Notably, the approach excels in generating more effective descriptors than the original audio class labels provided in the datasets.

Evaluating Audio Relevancy

An intriguing aspect of the research is the determination of audio relevancy using LLMs. The authors demonstrate LLMs' capability to categorize audio samples based on their informativeness, enabling the filtering out of non-informative audio content from datasets. This aspect has practical implications for dataset curation and highlights the multifaceted utility of LLMs in retrieval tasks.

Evaluation and Implications

The evaluation through zero-shot egocentric text-audio retrieval across the newly developed benchmarks underlines the efficacy of the proposed methodology. Notably, the utilization of pre-trained models like LAION-Clap and WavCaps in a zero-shot setting emphasizes the robustness and adaptability of the generated audio descriptions.

Conclusion and Future Directions

This paper makes significant strides in enhancing text-audio retrieval, especially in the context of egocentric video data. The demonstrated capability of LLMs to generate meaningful, audio-centric descriptions from visual descriptions presents a promising avenue for improving multimodal retrieval systems. As the field progresses, the methodologies and insights from this research could be extended beyond egocentric datasets, paving the way for broader applications in text-audio understanding and retrieval. The findings underscore the potential of LLMs to transform and expedite the search capabilities across a spectrum of multimedia content, suggesting a fertile ground for future explorations in AI-driven multimodal interactions.