Enhancing LLMs with "Sum2Act": A Novel Approach for Complex Tasks via Open World APIs

Introduction to Sum2Act

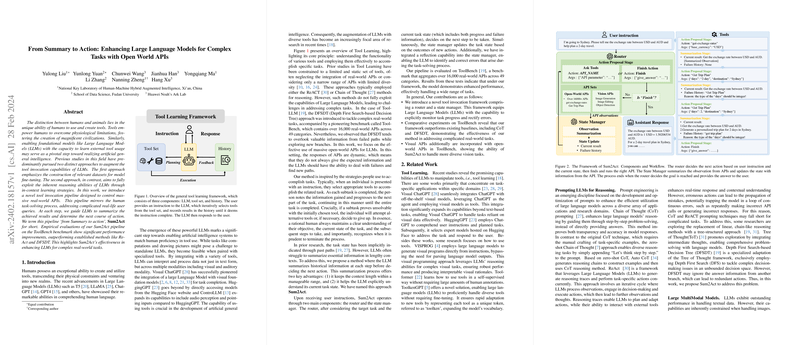

The paper introduces a novel framework titled "From Summary to Action" (Sum2Act), crafted to empower LLMs with the ability to utilize massive real-world APIs effectively. This work is inspired by human strategy for task-solving, which involves summarizing achieved results and determining the next steps. The Sum2Act pipeline emerges as a sophisticated tool invocation methodology, significantly enhancing LLMs' capacity to address complex real-world challenges.

Overview of Tool Invocation in LLMs

The ability to integrate and utilize external tools or APIs is crucial for the advancement of LLMs towards achieving artificial general intelligence. Traditional approaches in tool invocation for LLMs have predominantly focused on dataset construction for model fine-tuning or leveraging the LLMs’ inherent reasoning capabilities. However, Sum2Act breaks new ground by introducing a mechanism in which LLMs refine their interaction with external tools by summarizing outcomes at each step and making informed decisions on subsequent actions.

Core Contributions

The paper makes several pivotal contributions to the field of AI and tool learning:

- Introduction of Sum2Act Pipeline: A novel framework that facilitates the handling of complex tasks by harnessing the power of open-world APIs. The system's architecture, consisting of a router and a state manager, enables dynamic interaction and decision-making, reflecting a significant advancement over traditional methodologies.

- Empirical Validation: Through rigorous evaluation on the ToolBench benchmark, which encompasses over 16,000 real-world APIs across 49 categories, Sum2Act demonstrates superior performance against existing baselines such as ReAct and DFSDT. This validation underscores the effectiveness and potential of the proposed framework in practical applications.

- Integration with Visual APIs: Beyond textual APIs, Sum2Act shows promising adaptability in handling vision tasks, including image generation and editing. This capability highlights the framework's versatility and its potential to cater to a broader range of applications, thereby enhancing LLMs' utility in multimodal scenarios.

Theoretical and Practical Implications

The introduction of Sum2Act heralds several theoretical advancements and practical applications in the field of artificial intelligence. Theoretically, it refines our understanding of tool learning, presenting a framework that mimics human cognitive processes in task resolution. Practically, its ability to effectively integrate and utilize an expansive set of real-world APIs signifies a leap toward more sophisticated and autonomous AI systems. This could find applications in numerous domains ranging from automated customer service and data analysis to complex problem-solving in scientific research.

Future Directions in AI and Tool Learning

Sum2Act's innovative approach lays a foundation for future explorations in AI tool learning. Future research could explore the integration of even more diverse sets of APIs, including those involving more advanced scientific computations or real-time data processes. Additionally, refining the router and state manager components for even more nuanced decision-making and failure analysis could further enhance the model's efficacy and efficiency.

Conclusion

Sum2Act represents a significant stride towards realizing the full potential of LLMs in engaging with and solving complex real-world tasks. By endowing LLMs with a structured mechanism for action based on summary and reflection, this work opens up new vistas in artificial intelligence research and applications. Its success on the ToolBench benchmark not only underscores its immediate utility but also sets a precedent for future endeavors in the domain of tool learning and AI development.