Enhancing Text-to-Image Models with Multi-LoRA Composition

Introduction

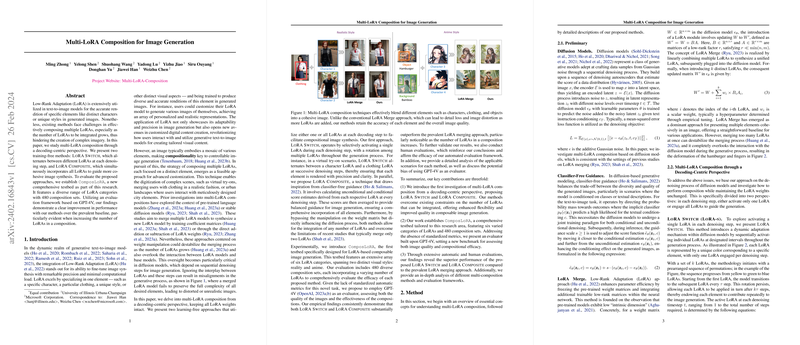

The ability to generate complex images by integrating multiple specific elements through Low-Rank Adaptation (LoRA) represents a significant advancement in the field of generative text-to-image models. Despite the precision and computational efficiency offered by LoRA, the challenge of composing multiple LoRAs, especially as the number increases, remains a notable limitation. This paper confronts this challenge by proposing two novel, training-free methods to improve multi-LoRA composition: LoRA Switch and LoRA Composite. These methods are evaluated using a newly developed testbed, ComposLoRA, demonstrating a substantial improvement over existing composition techniques.

Multi-LoRA Composition Methodology

Underlying Challenges

The intricacy of image generation increases exponentially with the number of specific elements or LoRAs to be integrated. Previous methodologies struggled with scalability and the realistic composition of multiple LoRAs due to their reliance on weight manipulation, which often resulted in unstable merging processes and degraded interaction between the LoRAs and the base models.

Proposed Solutions

The paper presents two innovative approaches that maintain the integrity of LoRA weights while addressing compositional challenges:

- LoRA Switch (LoRA-s): This approach selectively activates a single LoRA at each denoising step of the image generation process, systematically rotating among multiple LoRAs. It ensures that each element is given focused attention, thus preserving the quality of both the specific elements and the overall image.

- LoRA Composite (LoRA-c): Drawing from the concept of classifier-free guidance, this method calculates unconditional and conditional score estimates for each LoRA at every denoising step. By averaging these scores, it provides balanced guidance for image synthesis, ensuring cohesive integration of all elements.

Evaluation Framework

A novel evaluation framework, ComposLoRA, was established to assess the effectiveness of the proposed methods, featuring a comprehensive array of LoRA categories and composition sets. The framework employs GPT-4V for evaluating the quality of images and the success of compositions. Both automated and human evaluations affirm the superior performance of LoRA Switch and LoRA Composite methods over traditional LoRA merging approaches, especially noticeable as the number of LoRAs in a composition increases.

Implications and Future Directions

The proposed decoding-centric perspective on multi-LoRA composition offers a promising advancement in the field of text-to-image generation. By overcoming the limitations of weight manipulation methods, the paper paves the way for more complex and detailed image generation capabilities. The introduction of the ComposLoRA testbed and the employment of GPT-4V as an evaluator represent significant contributions to the standardization and assessment of image generation tasks.

Future research may explore optimizing the activation sequences and intervals for LoRA Switch, exploring the nuances of composition quality in varying image styles, and addressing the positional bias identified in GPT-4V evaluations. Moreover, the broader applicability of LoRA-based methods in other domains of AI could be an exciting avenue for exploration, potentially enhancing the customization and precision of generative models beyond images.

In conclusion, this paper not only addresses a critical gap in our understanding of multi-LoRA composition but also sets a foundation for future advancements in generative AI, offering both theoretical and practical contributions to the field.