Generative AI Diffusion Models: Techniques, Applications, and Future Directions

Introduction

Generative models have undergone a significant transformation with the introduction of diffusion models, offering a versatile framework for creating high-quality data samples across images, text, and audio. While originating as a method for denoising images, diffusion models have evolved to tackle a more extensive range of creative and data augmentation tasks, thanks to their ability to capture complex data distributions. This survey paper provides an insightful overview of the state-of-the-art (SOTA) techniques in generative AI diffusion models, explores their broad applications, and discusses the challenges that lie ahead.

Evolution of Generative Models in Vision

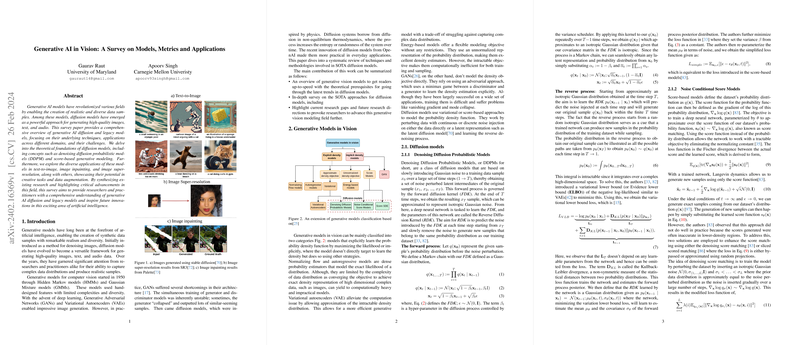

The journey of generative models in vision began with relatively simple models like Hidden Markov Models (HMMs) and progressed through significant milestones such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), each introducing new capabilities and addressing limitations of previous models. Despite their successes, GANs and VAEs faced challenges relating to training stability, computational efficiency, and the ability to capture highly complex data distributions. The advent of diffusion models, inspired by principles of thermodynamics, marked a pivotal turn in this evolving landscape, offering a novel approach characterized by the gradual denoising of data to generate new samples.

A Deep Dive into Diffusion Models

Denoising Diffusion Probabilistic Models (DDPMs)

DDPMs represent a class of diffusion models that gradually introduce Gaussian noise to data, creating a series of increasingly distorted samples. A reverse process, leveraging a deep neural network, then works to progressively denoise these samples, reconstructing the original data or generating new samples from the same distribution. This denoising process is meticulously controlled through a predefined noise schedule, making DDPMs exceptionally adept at generating realistic and diverse samples.

Noise Conditional Score Models and Stochastic Differential Equations Generative Models

Building on DDPMs, Noise Conditional Score Models and Stochastic Differential Equations (SDEs) Generative Models introduce sophisticated mechanisms for data generation. These models refine the scoring function, optimize the noise perturbation process, and employ advanced mathematical formulations, such as SDEs, to represent the diffusion process continuously. This mathematical rigor enhances the models' capability to generate new data samples with remarkable accuracy and efficiency.

Applications Across Vision Tasks

Generative AI diffusion models have found applications in a wide array of vision tasks, demonstrating their versatility and effectiveness. Notable applications include text-to-image generation, image inpainting, and super-resolution, where these models excel at creating realistic images from textual descriptions, repairing damaged images, and enhancing image resolution, respectively. These applications underscore the potential of diffusion models to transcend traditional limitations and inspire novel solutions to longstanding challenges in the field of computer vision.

Challenges and Future Directions

Despite their promising advances, generative AI diffusion models face several challenges, such as the need for increased training stability, improved scalability, and enhanced interpretability. Addressing these challenges will be crucial for unlocking the models' full potential and fostering further innovation. Additionally, future research could explore applications in time-series forecasting, develop physics-inspired generative models, and address ethical considerations related to bias and societal impact.

Conclusion

The exploration of generative AI diffusion models marks a significant milestone in the evolution of generative models in vision. By offering a comprehensive overview of current techniques, applications, and challenges, this survey aims to inspire continued research and innovation in this exciting field. As the community tackles existing challenges and ventures into uncharted territories, generative AI diffusion models are poised to revolutionize the landscape of artificial intelligence, opening new avenues for creativity and data augmentation.