Enhancing Sentence Embeddings via Automatically Generated NLI Datasets

Introduction

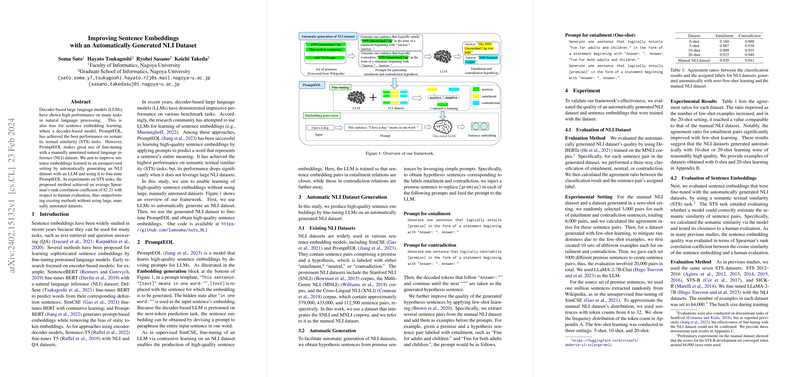

The quest for learning sophisticated sentence embeddings has led to various methodologies, notably the fine-tuning of pre-trained LLMs. Historically, encoder-based models, such as SentenceBERT and PromptBERT, have taken center stage. Lately, however, the utilization of decoder-based LLMs has shown promising results in tasks across the NLP spectrum, including Semantic Textual Similarity (STS). A significant breakthrough was achieved with the PromptEOL model, designed to predict an entire sentence's meaning with a single word through prompting. Despite its superior performance on STS tasks, its dependency on large, manually annotated Natural Language Inference (NLI) datasets poses a limitation. Addressing this, the paper introduces a method to generate NLI datasets automatically, leveraging LLM capabilities to fine-tune the PromptEOL model for enhanced sentence embeddings without relying on extensive manual annotations.

PromptEOL: A Focused Analysis

PromptEOL distinguishes itself by harnessing prompts to extract sentence embeddings from decoder-based LLMs. This novel approach employs a specially crafted prompt to encapsulate the semantic entirety of a sentence into a single word, leveraging the pre-training objective of LLMs centered around next-token prediction. Fine-tuning on NLI datasets further refines the embeddings to emphasize entailment and contradiction relations, foundational to generating semantically rich embeddings.

Automatic NLI Dataset Generation

The cornerstone of this research is the innovative method for automatic NLI dataset generation. By employing simple prompts to transform premise sentences into hypotheses with labels of entailment or contradiction, the paper bypasses the extensive manual effort. To enhance the quality of these hypothesis sentences, the paper incorporates few-shot learning, sequentially increasing the sophistication of dataset generation from 0-shot to 20-shot learning, with the latter matching the quality of manually curated datasets.

Empirical Evaluation

The paper's empirical investigations showcase the generated NLI dataset's superior quality and its efficacy in training the PromptEOL model to achieve remarkable performances in STS tasks. The model, fine-tuned with datasets obtained from 20-shot learning, rivaled the scores of manually annotated datasets, underscoring the potential of automatically generated NLI data in learning high-quality sentence embeddings. Noteworthy is the model's average Spearman’s rank correlation coefficient of 82.21 on STS benchmarks, highlighting the effectiveness of this methodology over existing unsupervised approaches and setting a new precedent for the use of NLI datasets in sentence embedding learning.

Conclusion and Prospects for Future Work

The proposed framework offers a novel pathway to obtain sentence embeddings by leveraging automatically generated NLI datasets, significantly reducing the dependency on large, manually annotated corpora. While the results on STS tasks are promising, the paper also acknowledges limitations, including the exclusive use of the Llama-2-7b model and the focus on English. Future explorations could extend to other LLMs and languages to broaden the applicability and utility of this approach.

This research illuminates the path forward in the development of more efficient sentence embedding methodologies that can potentially adapt to various languages and models, promising an exciting avenue for further exploration in the domain of Natural Language Processing.