Enhancing Generalization in Tool Use for LLMs through Self-Verification

Introduction to Tool Use in LLMs

The integration of external tools into LLMs significantly augments their potential for real-world applications, making them more versatile and powerful. Despite the advancements, the rapid evolution of tools, marked by frequent updates and the introduction of new functionalities, poses a considerable challenge. Previously, the use of few-shot demonstrations has been a go-to method for incorporating new tools into LLMs. However, such methods often fall short when dealing with the vast and nuanced spectrum of tools, especially in parsing finely nuanced distinctions between similar tools.

Introducing ToolVerifier

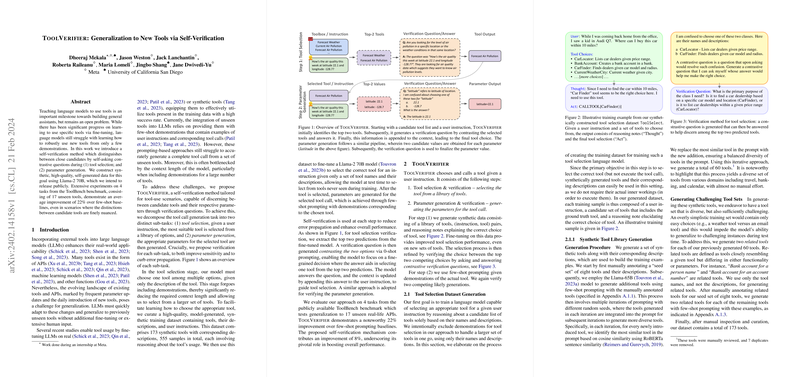

The paper introduces ToolVerifier, a self-verification methodology designed to refine the tool selection and parameter generation process. ToolVerifier intelligently employs contrastive questioning in two distinct phases:

- Selecting the most appropriate tool from a set of candidates based on the user instructions.

- Generating and validating the parameters necessary for the tool's operation.

This approach leverages a synthetically generated dataset built upon Llama-2 70B, concentrating on reasoning about the utility and application of a diverse array of tools. Through extensive experimentation across various tasks, ToolVerifier showcases an average 22% improvement in performance over the few-shot baselines, particularly highlighting its efficacy in addressing finely nuanced distinctions between similarly functioning tools.

Dataset and Methodology

The methodology builds upon a novel dataset comprising synthetic tools and corresponding user instructions, designed to train the LLMs in selecting the correct tool and generating the necessary parameters. The dataset encapsulates a wide variety of tools, intentionally including closely related tools to enhance the model's discrimination capabilities. Each training sample is meticulously crafted to include reasoning notes, elucidating the rationale behind the selection of specific tools, thereby embedding a deeper understanding of the context and utility of each tool in the model.

Experimental Results

The empirical evaluation of ToolVerifier, conducted on the ToolBench benchmark covering 17 unseen tools across four tasks, demonstrates a significant margin of improvement over traditional few-shot and other prompting baselines. Noteworthy is the model's enhanced performance attributed to the self-verification mechanism, which independently contributes to an 8% increase in accuracy. The experiments underline the model's adeptness at generalizing to a broad spectrum of tools, underscoring the utility of the synthetic dataset and the verification methodology in facilitating nuanced tool discrimination and parameter generation.

Theoretical and Practical Implications

From a theoretical perspective, the approach extends our understanding of self-verification methodologies in the field of tool use, showcasing their potential in significantly boosting the performance of LLMs. Practically, the development of a robust mechanism for integrating a diverse range of tools with minimal human intervention opens new avenues for building more capable and versatile AI systems. This work lays a foundation for future exploration into self-verification techniques, potentially paving the way for models that can seamlessly adapt to the rapidly evolving landscape of digital tools.

Future Directions

The paper hints at numerous prospects for advancement. Extending this methodology to include compositions of tools and multi-step operations could significantly broaden the scope of tasks LLMs can perform. Further refining the self-verification process to minimize errors and experimenting with even more sophisticated models could unlock new levels of efficacy and efficiency in tool use and generalization capabilities.

Conclusion

ToolVerifier represents a significant step forward in the quest to enhance the tool use capabilities of LLMs. By smartly navigating the challenges associated with the integration and generalization of new tools, this work not only advances our understanding of the complexities involved but also opens up exciting possibilities for future research and practical applications in this vibrant field of paper.