Beyond Probabilities: A Critical Examination of Evaluation Methods for LLMs

Introduction

As the field of NLP continues to expand, LLMs have taken center stage due to their unprecedented capabilities across a myriad of applications. The scalability of these models, often comprising billions to trillions of parameters, has been met with novel challenges in their evaluation. Traditional evaluation frameworks predominantly rely on probability-based methods to gauge LLM performance, especially in predictive tasks. These methods typically entail the selection of answers with the highest output probabilities from LLMs when confronted with Multiple Choice Questions (MCQs). This paper critically assesses the effectiveness of such evaluation practices in reflecting the true capabilities of LLMs, especially in scenarios mimicking real-world applications.

Evaluation Misalignment

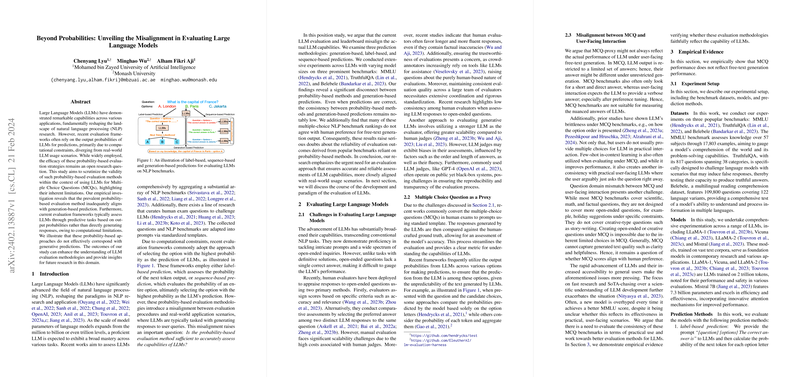

Our investigation into current LLM evaluation methodologies exposes a significant gap between traditional probability-based evaluation strategies and the generative nature of LLM applications in practical settings. Notably, these evaluation frameworks often fail to accurately capture the essence of generative predictions, which constitutes a substantial portion of LLM use cases. Through extensive experimentation involving LLMs of varied sizes across prominent benchmarks such as MMLU, TruthfulQA, and Belebele, it is evident that there exists a disconnect between the outcomes of probability-based methods and generation-based predictions. Even when predictions are aligned, the inconsistency in the accuracy and alignment of the evaluated models’ performance is noteworthy. This disparity raises critical concerns regarding the reliability of conventional benchmarks reliant on probability-based evaluation methods for LLMs.

Challenges in Current Evaluation Practices

The existing evaluation methodologies face several challenges, including:

- Scalability and Reproducibility: Human evaluations, though considered the gold standard, are not scalable and pose significant challenges in ensuring reproducibility and consistency across evaluators.

- Dependency on Restricted Responses: MCQ-based evaluations limit LLMs to a constrained set of responses, potentially misrepresenting a model's generative capabilities in unrestricted, user-facing scenarios.

- Discrepancy with Human Preferences: Our findings suggest that MCQ benchmarks may not accurately reflect human preferences, particularly in open-ended or creative tasks.

This critique underscores the urgent need for a paradigm shift in evaluating LLM capabilities, moving beyond probabilities and towards methods that encapsulate the generative and contextual richness of LLM outputs.

The Path Forward

In light of these findings, we advocate for a comprehensive reevaluation of LLM benchmarks and suggest the following directions for future research:

- Development of Holistic Evaluation Frameworks: Future efforts should focus on crafting evaluation protocols that extend beyond traditional benchmarks to encompass a more diverse array of LLM capabilities, including free-text generation and contextual understanding.

- Emphasis on Slow Research: By prioritizing a deeper understanding of LLM development over leaderboard-chasing, we can foster more robust and meaningful advancements in the field.

- Alignment with Real-World Applications: Evaluation methods should strive to reflect the practical utility of LLMs, ensuring that advancements translate effectively into tangible benefits in real-world scenarios.

Conclusion

Our critical examination of current evaluation methods for LLMs reveals a fundamental misalignment with the practical utility of these models. This discord underscores the necessity for the development of more nuanced and reflective evaluation frameworks that account for the diverse and generative nature of LLM applications. As the field progresses, embracing these recommendations will be paramount in accurately charting the course of LLM advancements and their implications for both theoretical research and practical applications.